This is my next step after BeagleBone AI-64 based Bot in Go

All the code can be found at github. I don’t even think I can explain everything :)

All the code can be found at github. I don’t even think I can explain everything :)So… yeah… the steps:

Thanks to Yasuhiro Matsumoto we can install Go binding for TensorFlow Lite with:

go get github.com/mattn/go-tflite

Now we need to cross compile libtensorflowlite_c.so for aarch64 under Ubuntu PC using these steps: Build TensorFlow Lite for ARM boards

Let’s clone the Tensorflow repo

git clone --depth 1 -branch r2.9 https://github.com/tensorflow/tensorflow.git tensorflow_src_2.9

Then we need to add one more dependency line to tensorflow_src_2.9/tensorflow/lite/c/BUILD file in order to have external delegate support:

cc_library( name = "c_api_without_op_resolver", ... deps = [ ... "//tensorflow/lite/delegates/external:external_delegate", ... ],

Then install Bazel and start our build

wget https://github.com/bazelbuild/bazel/releases/download/5.0.0/bazel-5.0.0-installer-linux-x86_64.sh chmod +x bazel-5.0.0-installer-linux-x86_64.sh sudo ./bazel-5.0.0-installer-linux-x86_64.sh cd tensorflow_src_2.9/ bazel build --config monolithic --config=elinux_aarch64 -c opt //tensorflow/lite:libtensorflowlite.so

Eventually, after some pip install missing-library, under bazel-bin/tensorflow/lite/ we should get our desired libtensorflowlite_c.so file.

TI TFlite Delegate

So what’s all the fuss about this “delegate”? It is actually the crucial TI’s library named libtidl_tfl_delegate.so for Tensorflow Lite runtime which gives us the performance boost (about 30x times) in tasks such as image classification, object detection and image segmentation by offloading computation from CPU to C7x/MMA for accelerated execution with TI Deep Learning Library. Without it dual core TDA4VM barely can squeeze 6–7 frame per second (if the model has not been properly quantized)

.

Long story short… My ti_tfl_delegate.go wrapper was born after me scavenging through TI repositories and combining everything I found with external_delegate.h from TFlite till something started to work. Thanks to CGO it was possible to keep my C helper functions as well as TFlite function prototypes together with Go bindings in one file.

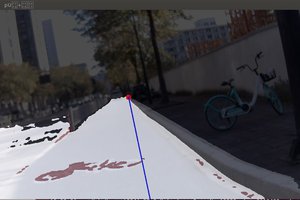

And… after quite some time, I finally managed to finish my image_classification and object_detection examples of how to test mobileNetV1 and ssdLite-mobDet models (precompiled by TI) with TFlite runtime using the libtidl_tfl_delegate.so. The inference can be observed through classification.html and detection.html respectively in browser from any machine in local network as MJPEG stream:

make build-image-classification-tflite make build-object-detection-tflite make run-image-classification-tflite make run-object-detection-tflite

Custom models.

Here TI leaves you on your own because the only runtime supported by their Edge AI Studio Model Composer is ONNX.

So after lots of hours watching YouTube, copy-pasting Python code and dealing with Python’s “dependency management”, I finally made my image_classification and object_detection training scripts work.

The last obstacle was to compile resulting TFlite models into artifacts needed by libtidl_tfl_delegate.so using this Python example (from edgeai-tidl-tools repository) of how to do it on Ubuntu PC.

And after more copy-pasting and “dependency managing” I made my version of compilation scripts as well as edgeai-tidl-tools.Dockerfile to build everything within one machine. So finally I can get my desirable object detection performance of ≈6.5ms per frame using Go:

make train-image-classification make train-object-detection make build-edgeai-tidl-tools-docker-container ...Read more »

hypnotriod

hypnotriod

Capt. Flatus O'Flaherty ☠

Capt. Flatus O'Flaherty ☠

Johanna Shi

Johanna Shi