The Human Connection - 1st Impression

What do the oceans under the ice of Europa sound like? The Europa mission is the "big" vision. What is under the ice? What kinds of sounds are down there? Who is making those sounds and can we communication with those beings?

[Inspired by Star Trek IV - The voyage Home]

Prologue

“Connected” is often a misunderstood and misused requirement in design. Adding a network port to a machine does not make it connected. That is like putting clothing on a monkey and calling it human. True connection means adding pathways between independent system which allows the “network” to become truly more than the sum of its parts. The independent systems in a “connected” project become more than what they could ever be alone because of the connection to the other.

As an example, Humans seek the internet as it allows connection. Not because the internet can allow data traversal but because we are extended and transformed by pathways to other independent entities on the network. It allows each of us, our knowledge, our thoughts, and emotions to be bridged. While this connection is at still at a somewhat primitive state, one can make connection with other information, personalities, thoughts and emotions that allow us to be more than what we were without. I believe that this is what draws people to “the cloud”. We have a connection to the world that we simply did not have before.

We currently stand on a precipice of fundamental human transformation. We have the technology to extend our being into the universe to sense and understand to greater depths than what our biology allows. We have the ability micro-machine and micro-etch physical structures on that scales of billions of a meter. We have the ability to program these items with abstract rulesets. We have the ability to measure virtually all known physical quantities. These technologies online are cheaper than ever and will allow virtually anyone to contribute. Combine this fact with the capability of connecting human thought across a geographically dispersed area, we now have the raw materials to make truly remarkable advances in the human condition. With open platforms, we will be able to overcome biological limitations and push into both the world of the small and the universe at large.

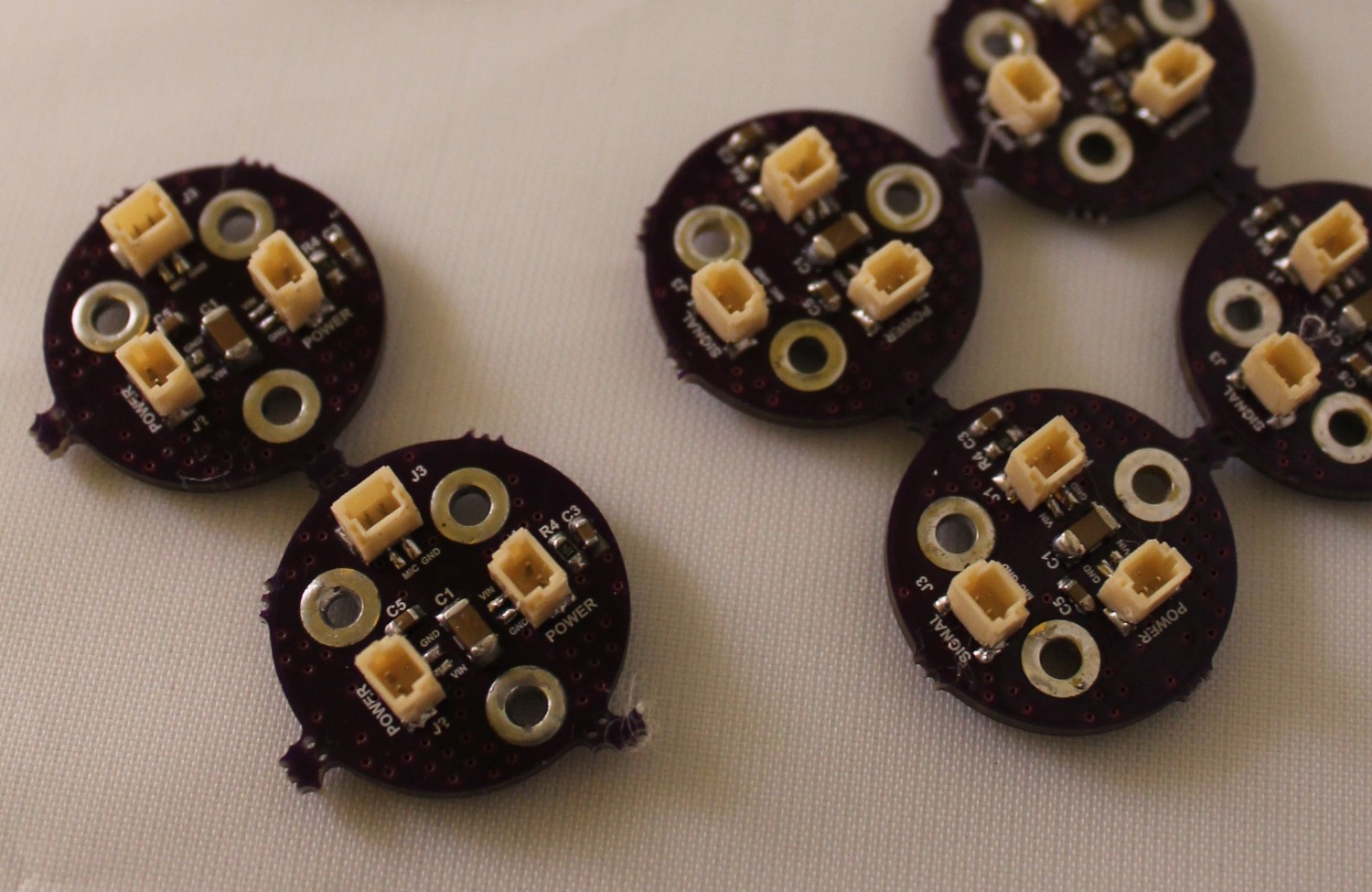

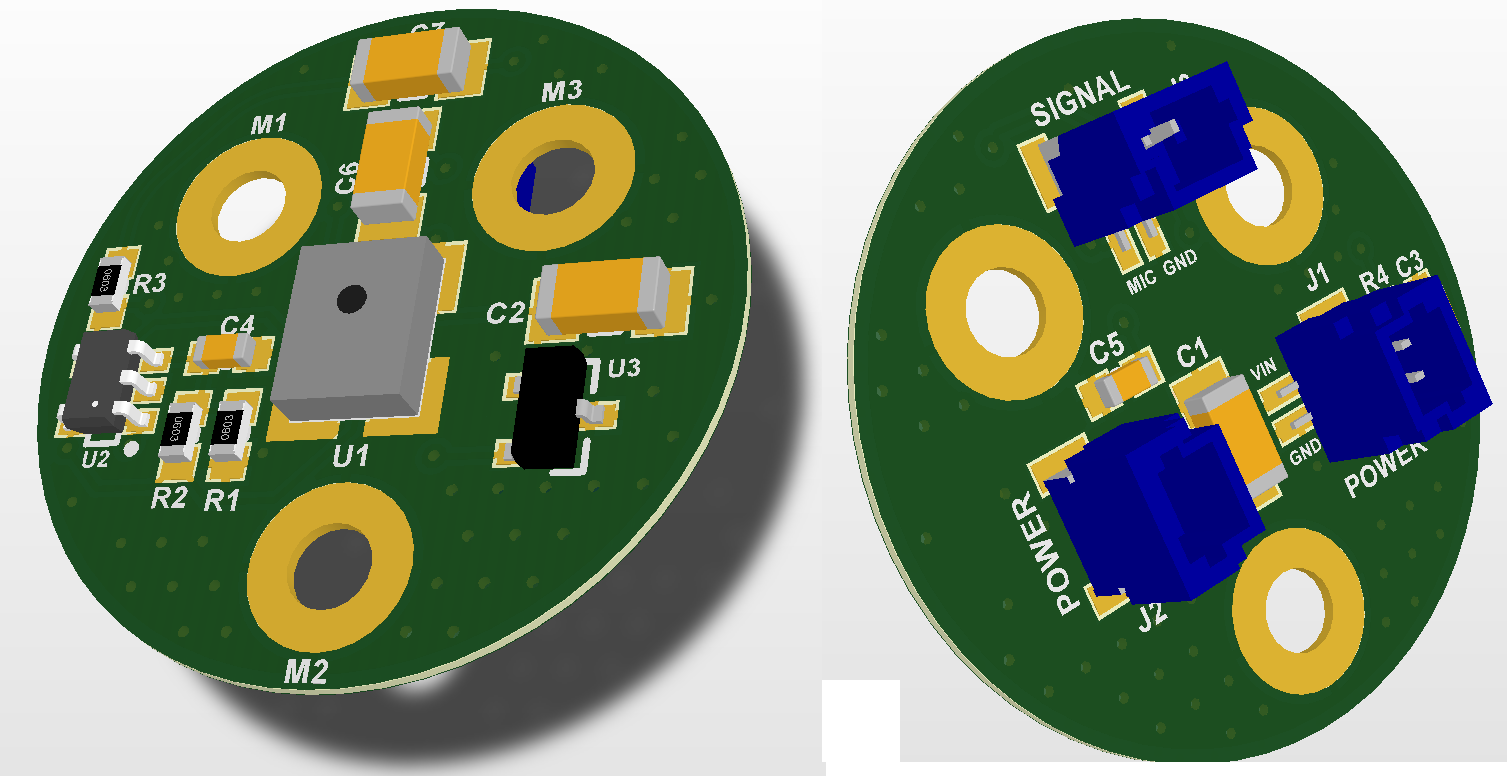

The Human Connection :1st impression is an experiment in developing open technologies that leverage that latest in low cost, state of the art electronics to extend human connection and perception into the universe in which we coexist. This concept can span many disciplines including biology, physics, acoustics, electronics, engineering, etc. For the Hackaday prize this project we will look at extending human interaction with fluidic oscillations (AKA sound).

Please start by looking and the 8-pages of “conceptual” drawings for the 1st impression (See Part 1 below). You will see that we will synthesize concepts in acoustics, electronics, mathematics (signal processing) and embedded systems in this 1st experiment. The ideas presented here will be the starting point.

The next step will be the video introduction and then the actual documentation of the design process. The design process will be open to all. All software, design drawings, schematics, etc. will be available via Github. Instructional videos will be available on Youtube. All of the electronics will use low cost development tools (i.e. a $20 LPC-link or $35 FRDM-64F for high end signal processing, OSH Park for PCBs, etc) as much as possible. Everything will be available for anyone to learn, hack and extend.

Part 1 : What exactly is the Human Connection - 1st Impression?

I always loved the Star Trek Movies. The concepts of molecule size microprocessor (Star Trek I), planet forming (Star Trek II) and biological communication (Star Trek IV) are “big picture” concepts that keep people dreaming. There is a scene in Start Trek...

Read more » ehughes

ehughes

Paul Bruno

Paul Bruno

Sophi Kravitz

Sophi Kravitz

Valentin

Valentin

Pierros Papadeas

Pierros Papadeas