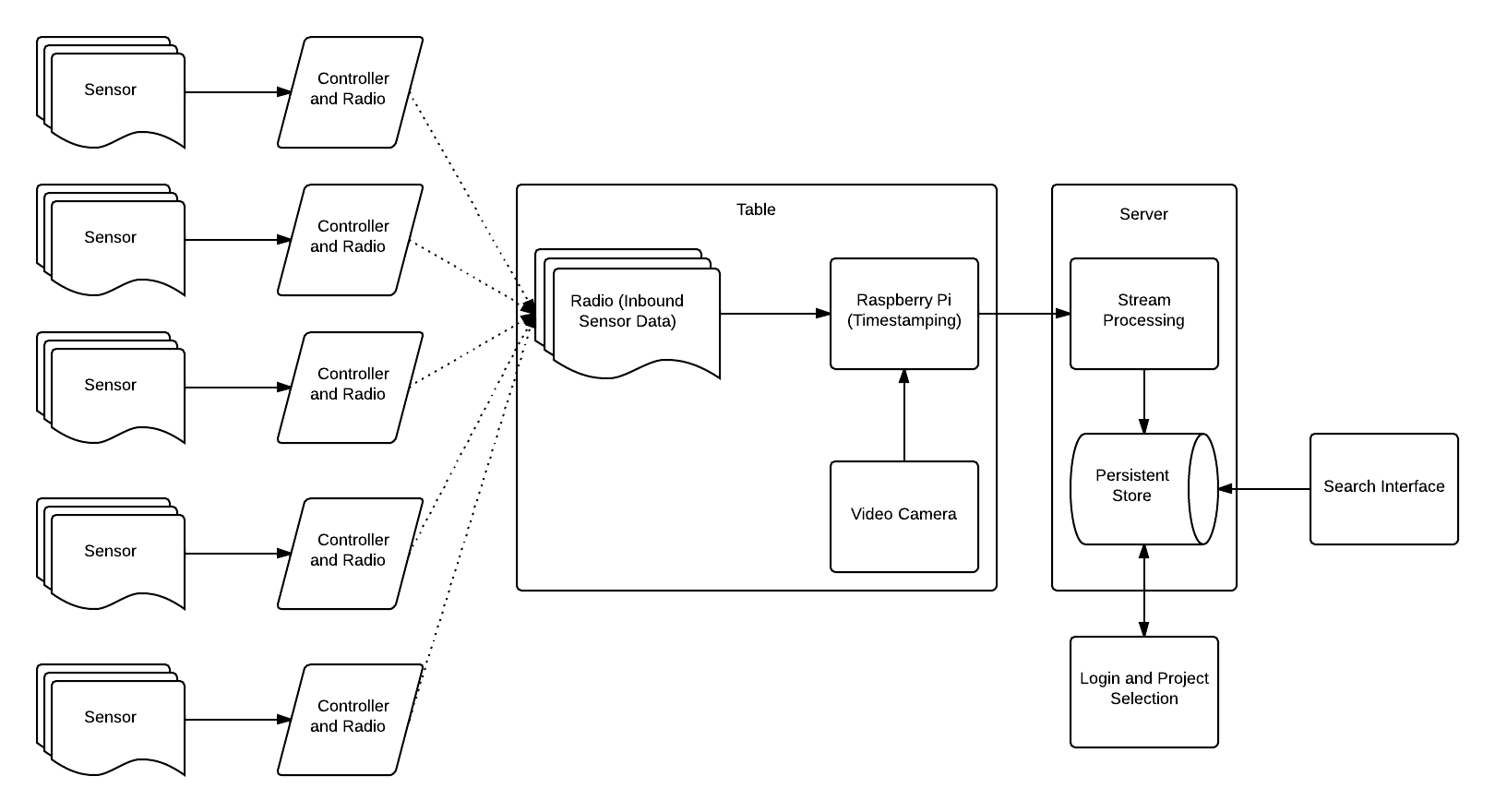

There are three main parts to the system: The sensors, the table, and the server

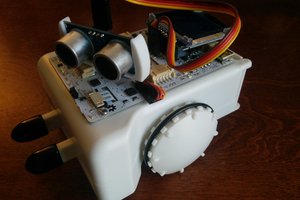

The current goal is to support 10 sensors, 5 motors, and a video feed with automatic detection of what moved. Hopefully that's enough for most mechatronic systems. All this data will feed into the table. Raw sensor data will be transmitted over a simple radio link, while the video feed will most likely use a wired connection.

A Raspberry Pi controls the receiver radios, timestamps the sensor data, and sends it off to the server over WiFi. Timestamping is important because not all data will arrive at the same time, a sensor might die for a random period of time, and the server certainly won't know what time everything arrived.

The server will act as the indexer, and as the primary data store.

Immediately below is a system diagram of how the various pieces communicate.

minifig404

minifig404

sparks.ron

sparks.ron

hornig

hornig

Alex

Alex

brnd4n

brnd4n