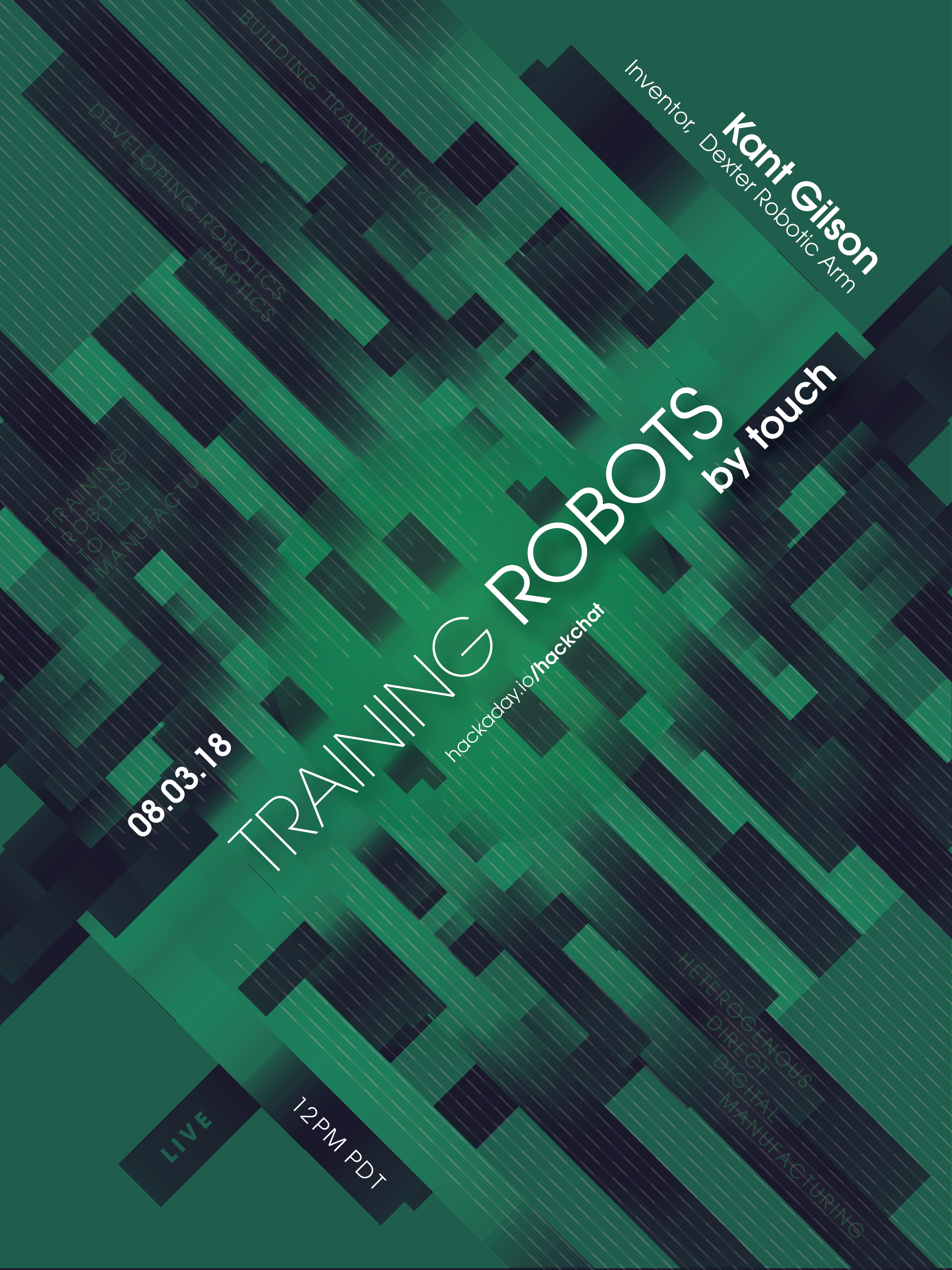

Kent Gilson will be hosting the Hack Chat on Friday, August 3rd, 2018 at noon PDT.

Time zones got you down? Here's a handy time converter!

Kent Gilson is a prolific inventor, serial entrepreneur and pioneer in reconfigurable computing (RC).

After programming his first computer game at age 12, Kent has launched eight entrepreneurial ventures, won multiple awards for his innovations, and created products and applications used in numerous industries across the globe. His reconfigurable recording studio, Digital Wings, won Best of Show at the National Association of Music Merchants and received the Xilinx Best Consumer Reconfigurable Computing Product Award.

Kent is also the creator of Viva, an object-oriented programming language and operating environment that for the first time harnessed the power of field programmable gate arrays (FPGAs) into general-purpose supercomputing.

In this chat Kent will be answering questions about:

- Building trainable robots.

- Developing robotics haptics.

- Training robots to manufacture.

- Heterogenous direct digital manufacturing.

Learn more about Dexter in the video below!

Okay! Lets get started. Welcome to Hack Chat

Okay! Lets get started. Welcome to Hack Chat

Hi Kent. First of all great work on the dextra hand!

We have been working on our own and currently are in the sensor predicament even made our own in this project https://hackaday.io/project/159728-pulse-sensor-to-actuate-a-robotic-hand we would love your opinion on the matter or if you could recommend an off the shelf component better that the one we have made