To understand the basics of how a Perceptoscope works, it's first important to recognize that there are two primary functional components when it comes to augmented and virtual reality devices beyond the computer that powers them: optics and tracking.

In many ways, the optics are the most obvious bit people notice when they put on a headset. VR researchers came to find that magnification of small screen could provide a wide field of view to the end user. By allowing the screen to render two side by side images which are magnified by lenses in front of each eye, the modern VR headset was born.

However, tracking is really the unsung hero of what makes modern VR and AR possible, and the ways in which a particular headset does its tracking are critical to its effectiveness.

Early modern HMDs like the Rift DK1 relied solely on accelerometers and gyros to track the movement of the headset through space. This provided a decent understanding of a headsets rotation around a fixed point, but could not really track a person as they leaned forward or backward. Current holdouts still using this approach are mobile phone based devices like Google Cardboard and Gear VR.

The next generation of devices combined those standard motion sensors with computer vision techniques to track lateral movement of the headset within a volume of space. The Rift DK2 uses infrared LEDs and an IR camera attached to your computer, similar in concept to a WiiMote in reverse. Headsets like the HTC Vive use an array of photosensors on the HMD itself which take note of how synchronized lasers move across them from mounted emitters throughout a space called "lighthouses".

Perceptoscopes take an entirely different approach to tracking which is based on a human sense known as "proprioception". Essentially, proprioception is the inner image we all have of our bodies orientation through touch. It's the way we can stumble through a bedroom in the dark, or touch a finger to a nose with our eyes closed.

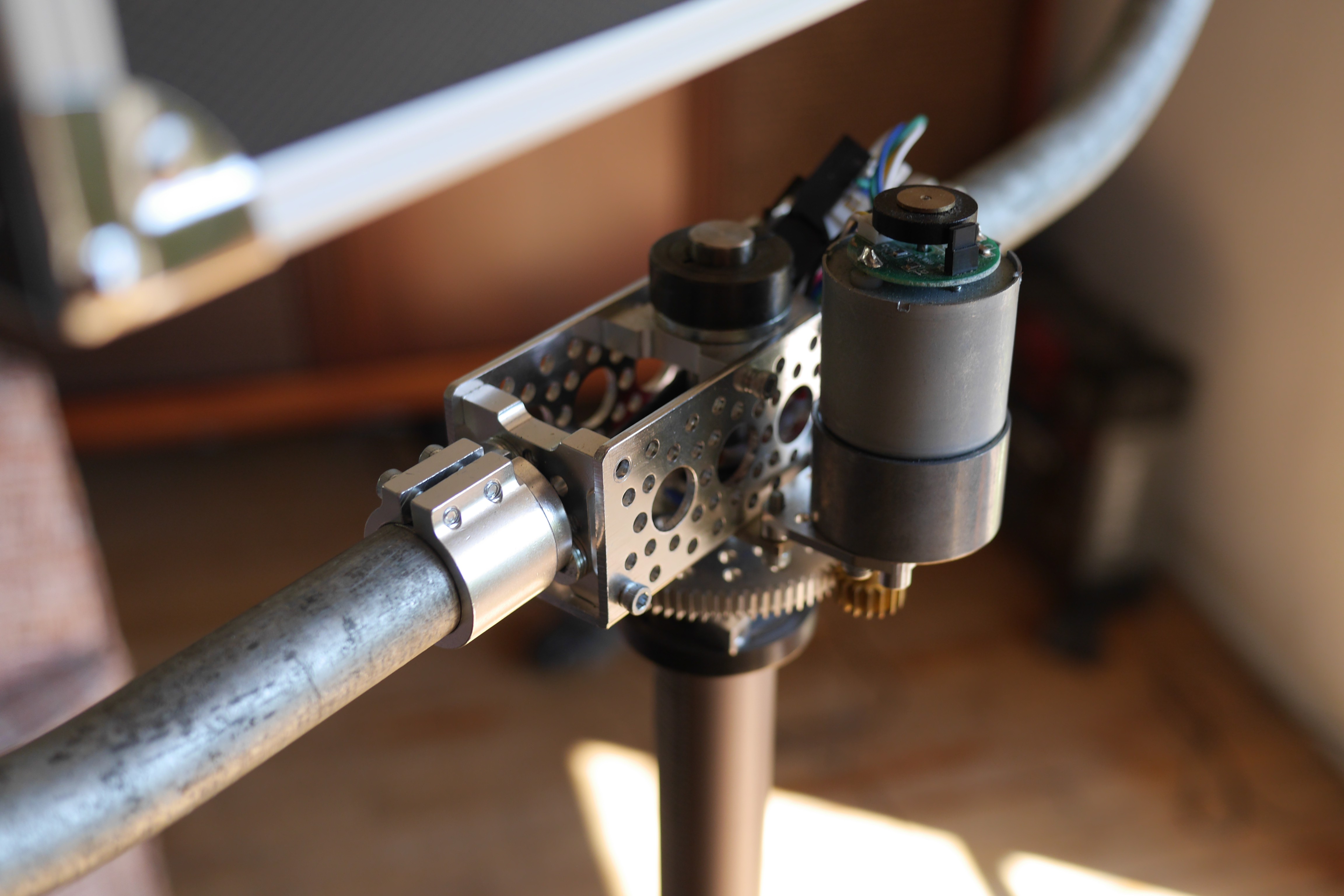

Perceptoscopes are not a wearable extending the human body, but a physical object present in the space. This fixed and embodied form factor, with a limited range of motion along two-axes (pitch and yaw) allows us to use rotary encoders geared to each axis to record a precise angle that a scope is pointing.

So while traditional HMD tracking techniques can be thought of as an analog to the inner ear and eyes working in combination, Perceptoscope's sense of orientation is more like how dancers understand their bodies' shape and position in space.

We've since gone on to incorporate other sensor fusion and computer vision techniques in addition to this proprioceptive robotic sense, but this core approach significantly reduces computational overhead while simultaneously providing an absolute (rather than predictive) understanding of a Perceptoscope's view.

As for what makes our optics special, that's for another post entirely.

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.