Introduction and aims

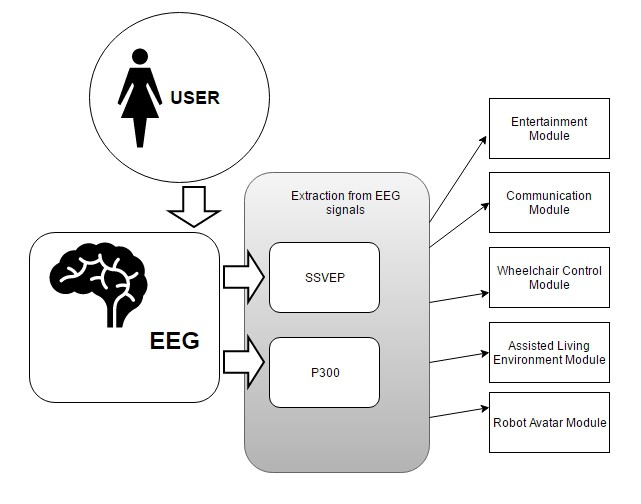

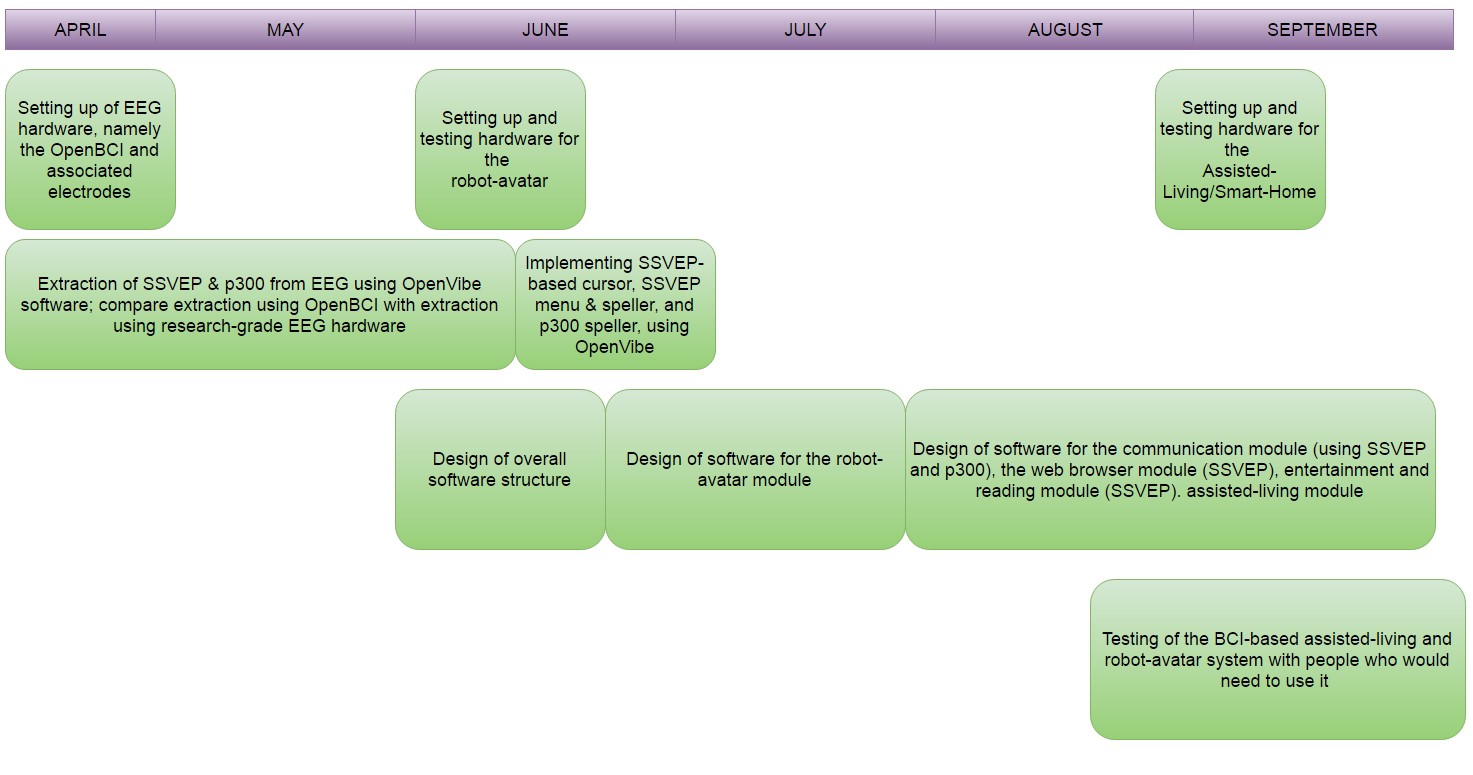

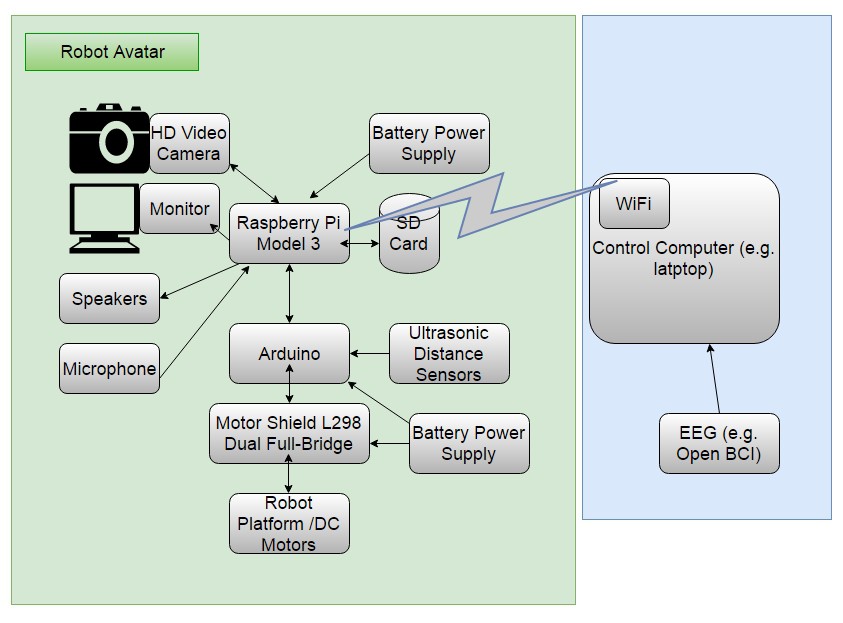

The aim here is to replace the traditional mouse/keyboard interface system with something a little more direct, since locked-in people can't use their limbs, or even move their eyes significantly in some cases (thus ruling out eye-tracking). We will use an EEG device, initially the OpenBCI, to acquire signals from the surface of the skull. These signals will be subjected to amplification, ADC, software-based signal analysis and pre-processing, in order to extract two special signals which are widely used in the field of brain-computer interfacing: p300 and SSVEP.

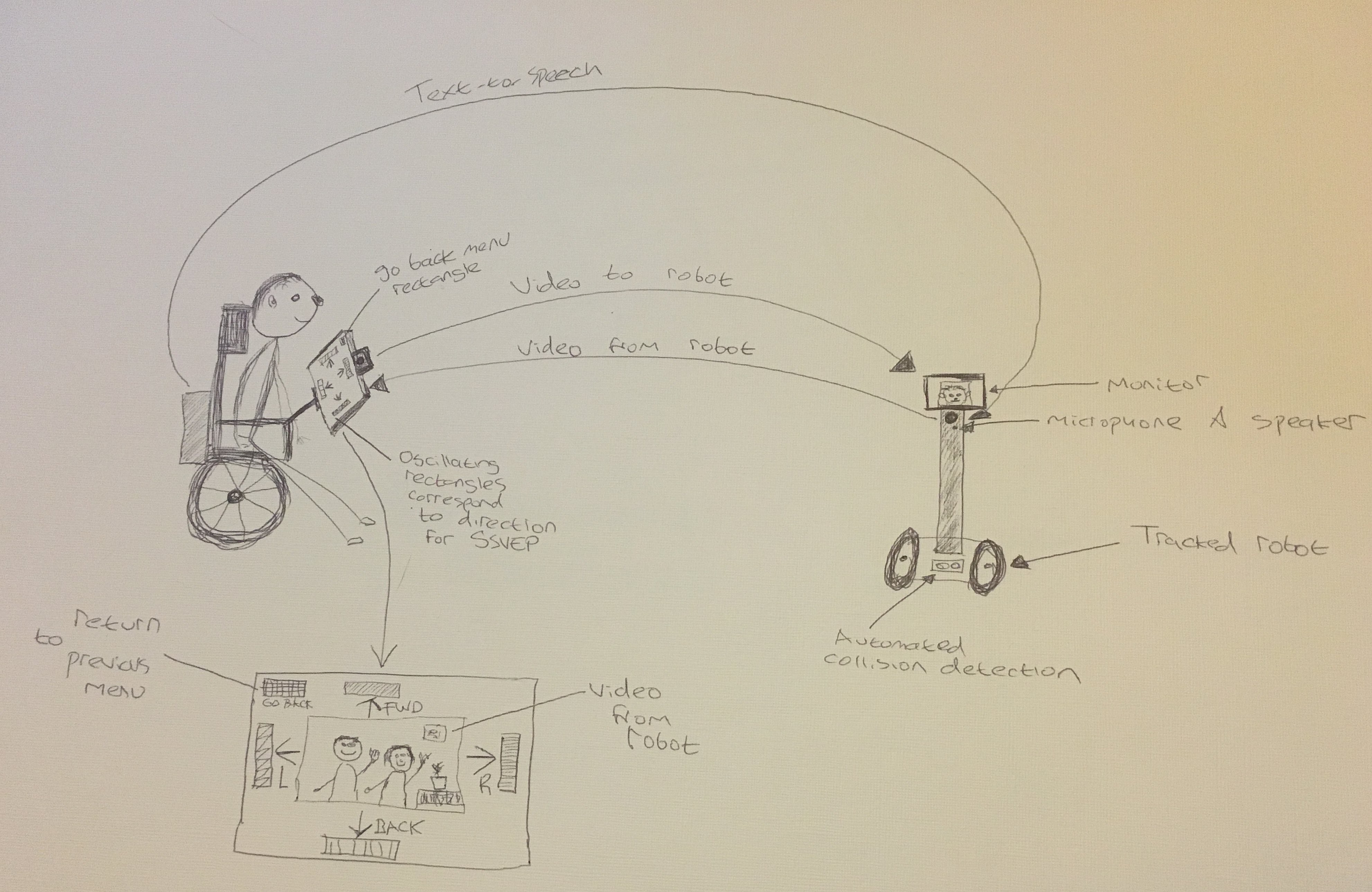

These signals will be used to control cursors (SSVEP), select characters for text input (SSVEP and p300)., and select from image-based menus (p300). You can see examples of these in other people's projects by looking at this project log. These input functions, in place of traditional mouse/keyboard, will allow the user to:

- communication with text-to-speech (both locally and remotely via robot-avatar)

- control web browser

- read books

- control video and audio playback, and selection

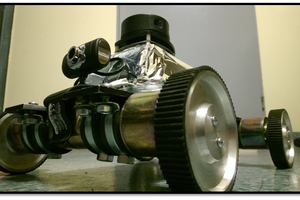

- control motor functions of a wheelchair

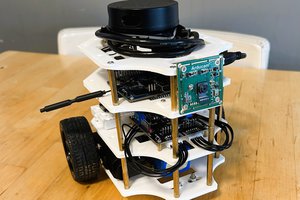

- control a robot avatar

- control a smart-home (e.g. lights on/off, temperature control)

All of this has been accomplished within academia, but our aim is to accomplish this with a low-cost extensible open-source system (<£1500) that people affected can actually afford to make or buy!

Locked-in Syndrome

Locked-in syndrome is caused by damage to specific portions of the lower brain and brainstem.. "Locked-in syndrome was first defined in 1966 as quadriplegia, lower cranial nerve paralysis, and mutism with preservation of consciousness, vertical gaze, and upper eyelid movement.1 It was redefined in 1986 as quadriplegia and anarthria with preservation of consciousness".

Neuroscience involved: EEG, ERPs, p300 and SSVEP

What is an ERP?

Event-related potentials are voltage fluctuations in the ongoing EEG (electroencephalogram) which are time-locked to an event (e.g. onset of a stimulus). The ERP manifests on the scalp as a waveform comprising a series of positive and negative peaks varying in amplitude, and polarity as the waveform manifests itself over time. It’s usual for ERP researchers to assume a peak represents the underlying ERP component – although this is not actually the case as it’s the components between peaks that reflect neural processes. The scalp voltage fluctuations obtained by EEG reflect summation of postsynaptic potentials (PSPs) which are occurring in cortical pyramidal cells within the brain. The PSPs themselves result from changes in electrical potential due to ion channels on the postsynaptic cell membrane opening or closing – thus allowing ions to flow in/out of the cell. If a PSP occurs at one end of a cortical pyramidal neuron, the neuron will become an electrical dipole – one end positive and one negative. If the PSPs occur in multiple neurons which all have their dipoles in the same direction – the dipoles will sum to form a large dipole. This current dipole will be large enough to detect on the scalp surface! Thousands of neurons are required to produce such a summed dipole, called an equivalent current dipole. This is most probable to occur in the cerebral cortex where groups of pyramidal cells are lined up together perpendicular to the cortical surface.

The distribution of positive and negative voltages manifested on the scalp for a given equivalent current dipole will be determined by the location and orientation of the dipole in the brain. So it’s important to note that each dipole will produce both positive and negative voltages on the scalp. (Oxford Handbook of ERPs, Luck & Kappenman, 2013)

What is p300?

P300 is an event-related potential (ERP) that is elicited within the framework of the oddball paradigm. In the oddball paradigm, a participant is presented with sequence of events that can be classified into one of two categories. Events in one of the categories are rarely presented to the participant, whilst events in...

Read more » Neil K. Sheridan

Neil K. Sheridan

Open FURBY

Open FURBY

igorfonseca83

igorfonseca83

TripleL Robotics

TripleL Robotics

Hi!

Wonderful project!

Did you make some progress since, for example connecting the BCI to a wheelchair or a robotic arm?