At this point, we're getting great images from our device and multiple retina specialists agree that they're able to see the features they need to make a diagnosis. But clinical experts may not always be available to give their opinion for the massive number of patients during a field testing or a mass screening program. We want our device to have automated algorithms built-in which would allow the image to be "graded" by severity of the condition (Diabetic retinopathy).

Our starting point for the best solutions to this was to explore the solutions posted by winners of the 2015 Kaggle competition on Diabetic retinopathy. The winners made use of Convolutional Neural Networks, which are giving fantastic results in image detection problems.

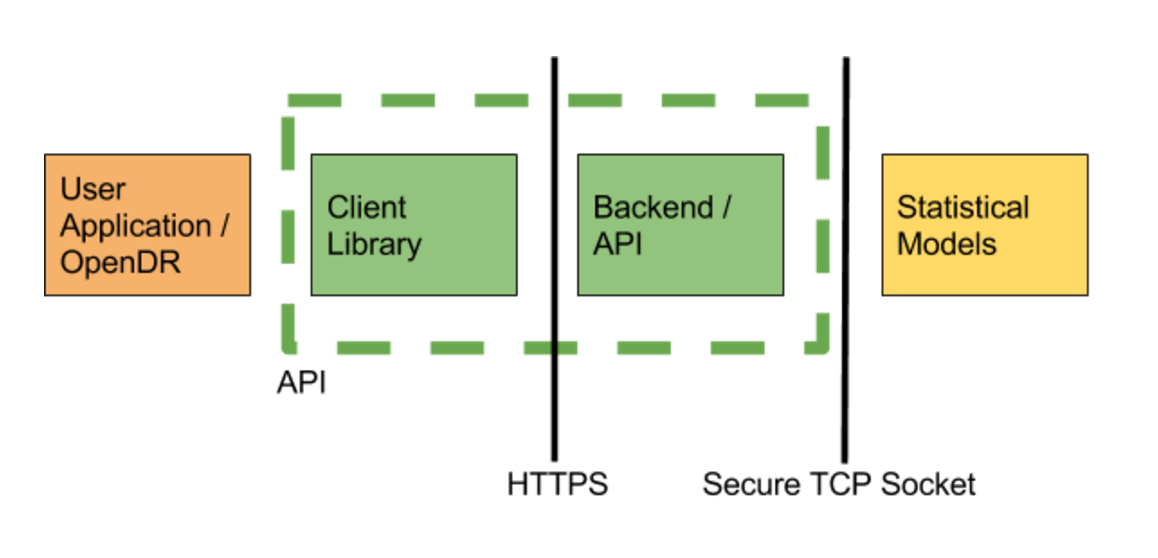

We implemented a version of some of the winning networks in the Kaggle challenge in Torch, and trained them using rack-based training machine running two Nvidia Titan X GPUs (24 GB total VRAM), 64GB of RAM, and 24 core CPU (similar to those available from Amazon Web Services). Training took about 2 days. After training the network we converted the model to run on both a CPU or GPU based machines. Running the service requires less substantial computing resources, our service currently runs off a desktop machine with 16GB or RAM, 8 cores, and a Nvidia GTX 760 graphics card (2 GB VRAM) in order to comfortably handle 12 image requests/second. For context, if everyone in the world was scanned once a year (7 billion), then we would only need 20 prediction machines, or about $20k (+supporting infrastructure). Finally, to complete the service, we built an API to serve image requests. The service consists of the predictor, written in torch, and a web server, written using the python flask framework. The server receives images, verifies files and sends a pointer to the image data over a socket using TCP to the predictor, which responds with the predicted grade. This configuration allows us to run predictors and the web server on different machines for scale, but currently our service runs off a single desktop. In the future, our CPU based models can run on embedded ARM hardware such as a Raspberry Pi.

In order to interact with the web service, we created a client library in javascript and python. A user makes a profile and is given an API key from our web server. Through this, the processed jpg image is sent as a multipart form. The server processes the image and returns a json object. The grade is returned as a float between 0 and 4, with the following interpretation:

In order to interact with the web service, we created a client library in javascript and python. A user makes a profile and is given an API key from our web server. Through this, the processed jpg image is sent as a multipart form. The server processes the image and returns a json object. The grade is returned as a float between 0 and 4, with the following interpretation:

0 - No Diabetic Retinopathy (DR)

1 - Mild

2 - Moderate

3 - Severe

4 - Proliferative DR

The OWL client receives this data and converts it into a simple UI object which is easy to interpret by a field worker or layman.

A live demo of the grading interface lives at https://theia.media.mit.edu/ and the code is being continuously updated at https://github.com/OpenEye-Dev/theiaDR. The algorithm was trained on several thousands of images sourced from partner hospitals globally, including the LV Prasad Eye Institute, Hyderabad, India.

Dhruv Joshi

Dhruv Joshi

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.