To interact with the environment I'll be using fiducial markers. The markers will tell the robot 1. what an object is, and 2. where it is relative to the robot

#2 is very important. The SLAM system is only accurate to about 10cm, which gives us a general idea of where we are relative to the map, but is not enough for grasping. In order to grasp objects we need to have line of sight to the fiducial marker throughout the grasping process.

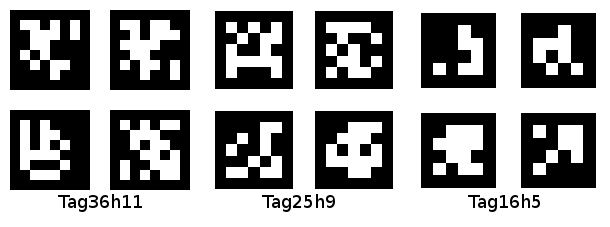

The particular type of fiducial marker I'm using are called apriltags

why are they called apriltags? no idea, might be a person's name.

I had intended to write a full GPU implementation of apriltags, but I don't think I'll have enough time to make the hackaday prize deadline. So instead I'll just use an existing library that runs on the CPU.

There are a bunch of AR and other fiducial marker nodes for ROS, but strangely none of them seem to support nodelets.

If you're not familiar with ROS, inter-node communication is a rather heavy process and especially so when it comes to video. When a camera node transfers images to the fiducial marker node, it's first encoded by image_transport, then serialized and sent over TCP - even if you're on the same machine! Special implementations of nodes that do not do this are called nodelets, with the kicker that nodes and nodelets don't share the same API.

I think because ROS is used primarily for research trivial stuff like this is easily neglected. You don't have to worry about CPU load when you have a PR2 after all : ]

Anyways, not having found a suitable nodelet implementation of apriltags, I ported the code for an existing node into a nodelet container.

check it out on github: https://github.com/Jack000/apriltags_nodelet

I stress tested it with some 1080p video on the TK1. At that resolution the node runs at 2fps and the nodelet at 3, but with a 1.1 decrease in CPU usage.

For actual use on the robot I think I'll scale down the video so the detection can proc with a reasonable framerate. That thankfully, can be offloaded to the GPU.

Jack Qiao

Jack Qiao

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.