I was trying to go to sleep after a day of laying out VLSI ( my day job ) and this thought came into my head.

What is AI, it isn't some pre- programmed set of mimicry. What is a true robot? Does a true robot have any sort of human input? When I say true, and I know this is a debate for some (much like "Mac vs PC" or "Coke vs Pepsi"), I mean a robot should be like a mechanical animal, it has a base set of "instincts" and uses those to overcome obstacles or puzzles in its environment. It should see the world and learn using its sensors. It should try random solutions when no known solution exists, then when one is found save that solution to try first the next time a similar situation occurs.

This is how animals learn, and it is what I want to do with the OS of P.A.L.

PAL should be able to sense a stimuli and respond accordingly, if that stimuli is being stuck into a fenced area, or hearing a spoken command, it should be able to respond in a way that makes sense. The initial OS will be a set of "instincts", or actions to try. These actions will not be tied to anything, they will just be that... actions. Such as Go forward, go backward, turn left, turn right, reach an arm out, reach the other arm out, reach two arms out, try different angles, yell for help, etc. There should also be a "baby duck" syndrome included where PAL sees humans as caretakers, at least at first. PAL will also have a place to "eat", that is a charging station. I have no intention of showing PAL where this station is, but instead intend to have in the instincts algorithms a value that is "good", "indifferent", "bad". Charging would be one of the good things, as is being content.

This could also be used as a reward system for PAL when a question is asked and a web search performed. If the answer is correct, you can tell PAL "Good job" and the good value will rise, to the point where PAL likes answering questions. If an incorrect response is given, such as a math problem, you can let PAL know that as well.

In the end, I do not want PAL to be some glorified remote control toy, I want to create a true, even if simple, AI learning algorithm that tries things out and discovers on its own what is successful. I want PAL to learn from its mistakes.

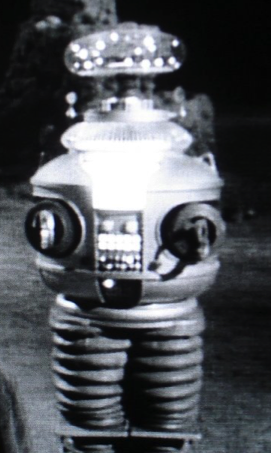

In a side thought, I am also going to include a panel on the front of PAL that contains an LED for every system, for each servo, etc. This will let me know with a visual inspection which systems are being activated and when. I know it is a bit old fashioned having a robot with a chest full of blinking lights, but I did grow up with the scifi of those eras.

Sorry for the late night rambling, but I wanted to get these thoughts onto paper before I slipped into a sleep and they were forgotten.

ThunderSqueak

ThunderSqueak

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

Something that might help the robot learn is a concept called "empowerment" - there's a bit of research out there on it, but it requires the robot to try actions a lot and learn like a baby (as well as a fair bit of storage and processing). The idea is that the base instinct for the robot is to try to control its surroundings. Therefore, it will pick actions that lead to the best possibility of control. Other instincts might further shape this, but the basic idea of wanting toaffect the environment (and predict the effects) might be useful.

Are you sure? yes | no

That is an intersting idea, and one that I wonder if PAL will learn on its own. It will certainly be capable :)

Are you sure? yes | no