Due to job and family obligations I was only able to work occasionally on the project. However, I finished the servo control via the Raspberry Pi:

For controlling the servos via the Raspberry Pi I decided to use Richard Hirst's excellent ServoBlaster driver. After installation (requires Cython and Numpy) it creates a

/dev/servoblaster

device file in the Linux file system, allowing the user to generate a PWM signal on one (or several) of the Raspberry Pi's GPIO pins. Controlling the servos is as easy as echoing the desired pin and pulse width to the driver:

echo 3=120 > /dev/servoblaster

In the example above a pulse width of 120ms is set on GPIO pin 3.Multithreading

Based on the device driver I wrote a Python class (see 'servo_control/ServoControl' on GitHub) in order to control the servos from within my software framework. As the Raspberry Pi 2 has four CPU cores and the face detection part is computationally quite demanding, I implemented the class to run as a multiprocessing subprocess. This allows to efficiently leverage the multiple CPU cores on the Raspberry Pi 2 by spawning different tasks (e.g. face detection, servo control,...) on the different cores. In order to exchange data between the different processes I decided to use Sturla Molden's sharedmem-numpy library. Python's multiprocessing library allows for sharing memory between processes using either Values or Arrays. However, as I would like to pass camera images stored as Numpy arrays between the processes later on, using sharedmem-numpy currently seems to be the way to go. Although the author states that the library is not functional on Linux and Mac it seems to work quite well on the Raspberry Pi running Raspbian Jessie. However, I had to write a small patch to make things work (can be found in the project's GitHub repo).

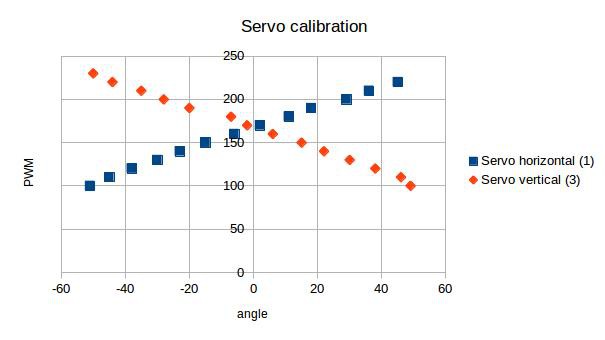

Servo calibration

In order to set the servo motors to a specific angle, the angle has to be remapped to a corresponding pulse width. By measuring the angle for the horizontal and vertical lamellae at different pulse widths using a triangle ruler, I found that the transfer function is almost linear:

Hence, the transfer function for remapping a specific servo motor angle α to a specific pulse width p can be estimated by fitting a simple linear regression to the dataset

where m is the slope and b the intercept at the y-axis. I used LibreOffice Calc to do so (see calibration.ods in GitHub repo).

Low-pass filtering of input values

The speed of servo movement is controlled by stepwise incrementing or decrementing the pulse width of the servos at a defined frequency (currently 100Hz) until a desired angle has been reached. To smoothen overall servo movement and to avoid possible problems with jittery input signals, I implemented a temporal low-pass filter in between the computation of input angles and output pulse width. I will see how this works out when face detection is implemented. So, next step will be to hook up a webcam and get face detection running using OpenCV...

hanno

hanno

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.