To build a mocap system, you first need some mocap cameras. A commercial camera will have a strobe light, a high-throughput IR sensor, and some DSP behind it to read the imaging and find the 'blobs' - which is the strobe's light reflected from markers the subject is wearing.

I think I can make this out of Raspberry Pi v3, plus the v2 NoIR camera, plus an 'off the shelf' IR Strobe unit.

I will need to do quite a lot to make it work though.

- Develop 'Command & Control' protocol and state machine to manage camera

- Develop 'packet scheduling' technique to return mocap data (Centroids) and Images in a sensible way.

- Develop Blob detection Algorithm

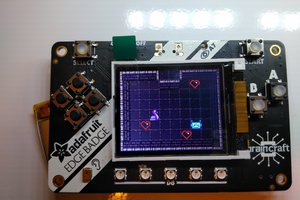

- Develop Test application to control camera(s), and view Imaging / Blobs

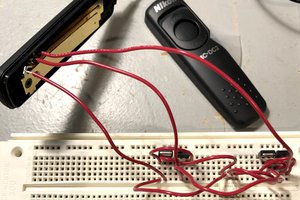

- Build Control gear to 'flash' the strobe

- Build hard-wired sync system so all cameras strobe at same moment

- Develop interface between Pi Camera & my Blob detection code

- Develop method to read from camera (still or video frame) in step with sync

- Build box for camera/pi

Christoph

Christoph

tehaxor69

tehaxor69

foamyguy

foamyguy