"...this is the closest they've ever been to creating a robot so similar to the real thing..."

Compared to man-made machines animals show in many respects a remarkable behavioral performance. Although there has been tremendous progress in building (autonomous) robotic systems in the last decades, these systems still often fail miserably:

In contrast, if you have ever tried to catch a flying fly with your hand or watched a dragon-fly intercept prey mid-flight at 1000 frames/sec you will realize that even insects - despite having only relatively small nervous systems - outperform current robotic systems in terms of computational and energy efficiency, speed and robustness in different environmental contexts . Hence, from an engineer's perspective the mechanisms underlying behavioral control in insects are of potential great interest; or to phrase it in the wise words of Grandmaster Miyagi:

Collision avoidance based on insect-inspired processing of visual motion information

A prerequisite for behavioral control in animals, as well as in autonomous robotic systems, is the acquisition and processing of environmental information. Flying insects, such as honey bees or flies mainly rely on information their visual system provides when performing behavioral tasks such as avoiding collisions, approaching targets or navigating in cluttered environments. In this context an important source of information is visual motion, as it provides information about a) self-motion, b) moving objects, and also c) about the three-dimensional layout of the environment.

In the visual system of flying insects certain types of motion-sensitive interneurons have been identified which respond to visual motion in a direction-selective way. Although neuroscientists still struggle to unravel the specific physiological and computational mechanisms of these cells, the response characteristics of such neurons can be modeled computationally according to so-called Hassenstein-Reichardt-Correlators - also known as Elementary Motion Detectors (EMDs). The concept of EMDs was initially proposed by Bernhard Hassenstein and Werner Reichardt in the late 1950s in order to explain optomotoric turning responses in weevils. However, in later studies it could be shown (by comparing EMD responses with electrophysiological recordings of motion-sensitive interneurons) that EMDs can explain the response characteristics in the fly's visual motion pathway depending on the parameters of a moving visual stimulus to a large extent.

One interesting property of motion-sensitive interneurons and EMDs is that their response depends upon the nearness to objects in an environment during (translational) self-motion. While navigating in cluttered environments it is crucial for an autonomous agent to have some kind of representation of it's relative distance to obstacles in order to avoid collisions. To solve this task robotic systems nowadays normally rely on active sensors (e.g. laser range finders) or extensive computations (e.g. Lucas-Kanade optic flow computation). However, in my former lab we could show that it is possible to autonomously navigate in cluttered environments whilst avoiding collisions purely based on nearness estimation from visual motion using EMDs. From a technical perspective this biomimetic approach has several advantages over conventional systems:

- due to it's highly parallel architecture and low resolution of the camera frames it is really fast

- it has low computational and energy requirements making it suitable to be integrated in robotic systems where weight and energy requirements are critical (e.g. flying drones)

- it can be implemented using only low-cost hardware (i.e. a Raspberry Pi 3 and a webcam)

BIVAC - Bio-Inspired Visual Collision Avoidance System

My current work at the Biomechatronics Group at CITEC (Bielefeld, Germany) involves the development and implementation of bio-inspired models for autonomous visual navigation on our robot HECTOR. In a recent paper we have successfully implemented the bio-inspired model for visual collision avoidance described above in simulations of the robot:

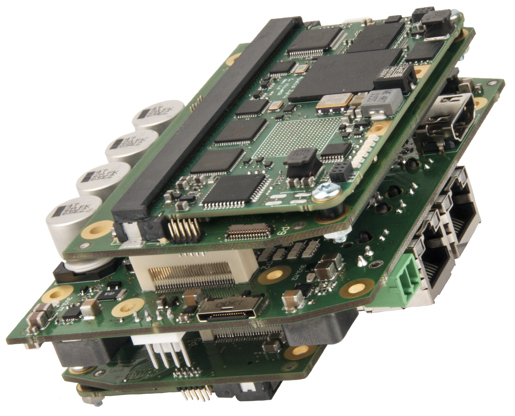

By estimating the relative nearness to objects in the environment, HECTOR is able to navigate to a goal position without colliding with obstacles even in cluttered environments. Leveraging the highly parallel neuronal architecture of the insect's visual system, we have designed a dedicated FPGA-based hardware module, which theoretically allows frame rates up to approx. 10 kHz, making the system also usable on fast moving autonomous platforms, such as flying drones.

The system was developed in cooperation with the Cognitronics and Sensor Systems Group at CITEC Bielefeld and is based on a Xilinx Zynq SoC. Using a panoramic fisheye lens and a resolution of the camera images of only 128x128 pixels the system was successfully tested on our walking robot HECTOR (the corresponding paper is currently under review):

As not every hobbyist tinkering with robotics has the technical and financial resources to build a system as described above I decided to make the underlying algorithms for visual collision avoidance accessible to a wider audience. Hence, BIVAC (Bio-Inspired Visual Avoidance of Collisions) is a low-cost module for bio-inspired visual collision avoidance on autonomous robots which requires only cheap off-the-shelf parts (i.e. a Raspberry Pi, RasPi Camera and a cheap panoramic lens) and access to a 3D printer. The performance of the system should be sufficient to allow slow moving robots to avoid collisions with obstacles while navigating in visually cluttered environments based on neuronal mechanisms found in the visual system of flying insects.

hanno

hanno