AIMos On A Pi

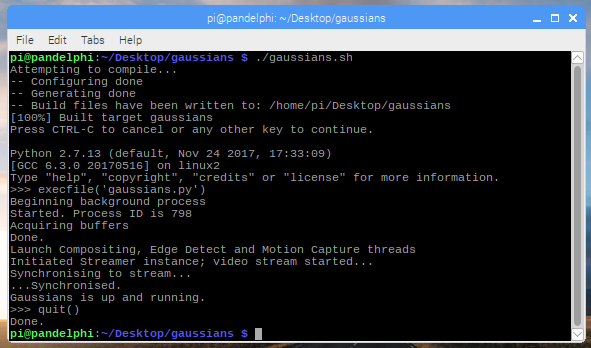

I've had to do quite a lot of chopping to fit AIMos' motion tracker into a Pi. It was designed to run on a a dual 3.2GHz Intel laptop motherboard at 640x480 resolution at 15 FPS. Using this camera [an old PS2 cam, the one with the differential mics on top] and my Athlon X2 in greyscale at 160x120, it can comfortably hit 125FPS. Great camera, even has a zoom lens and works in near darkness, but it wont fit in the skull.

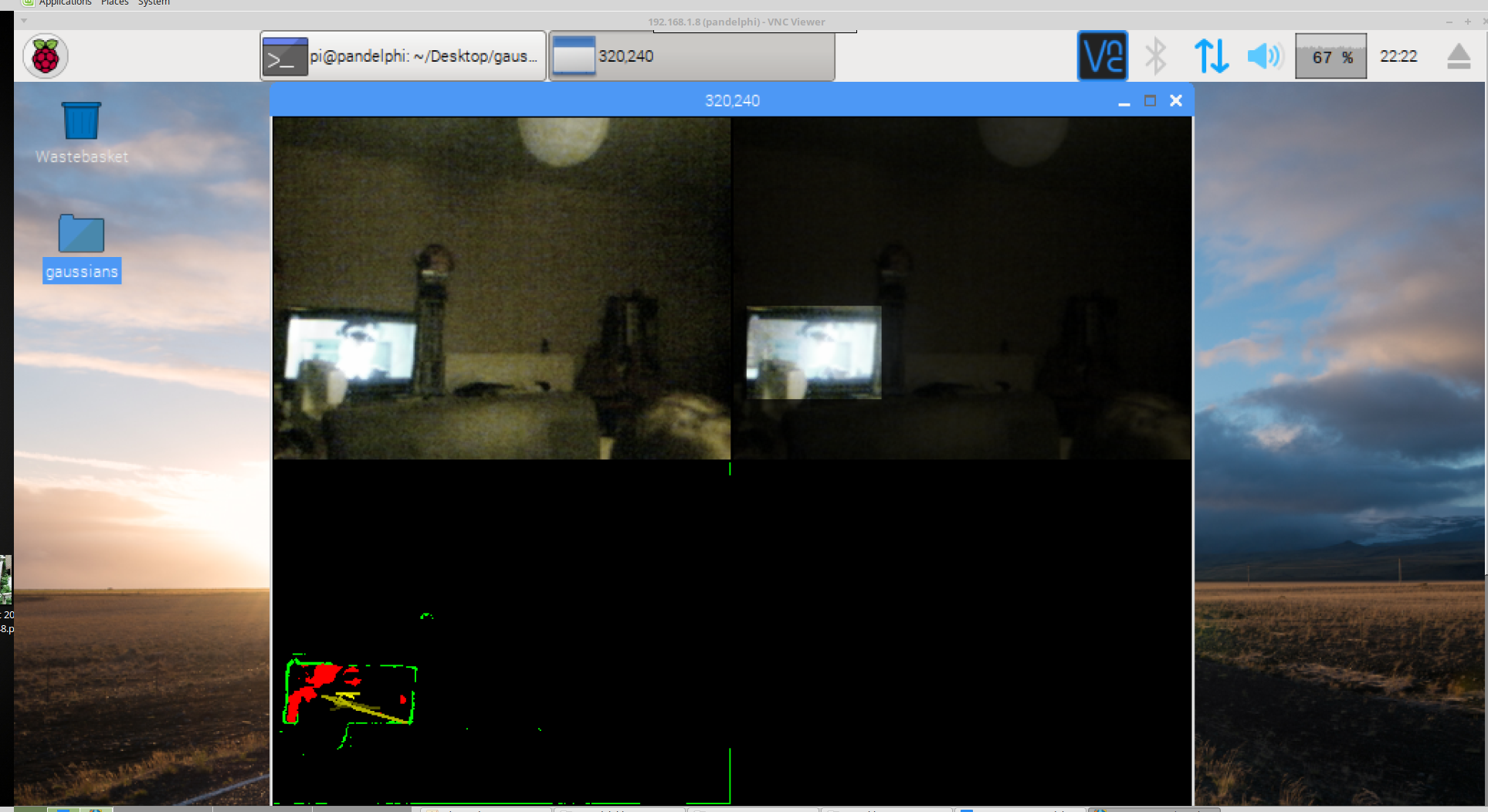

Pandelphi will have to put up with 320x240 RGB at 5FPS. It will do 8FPS, but I'd rather leave a bit of overhead for AI processing.

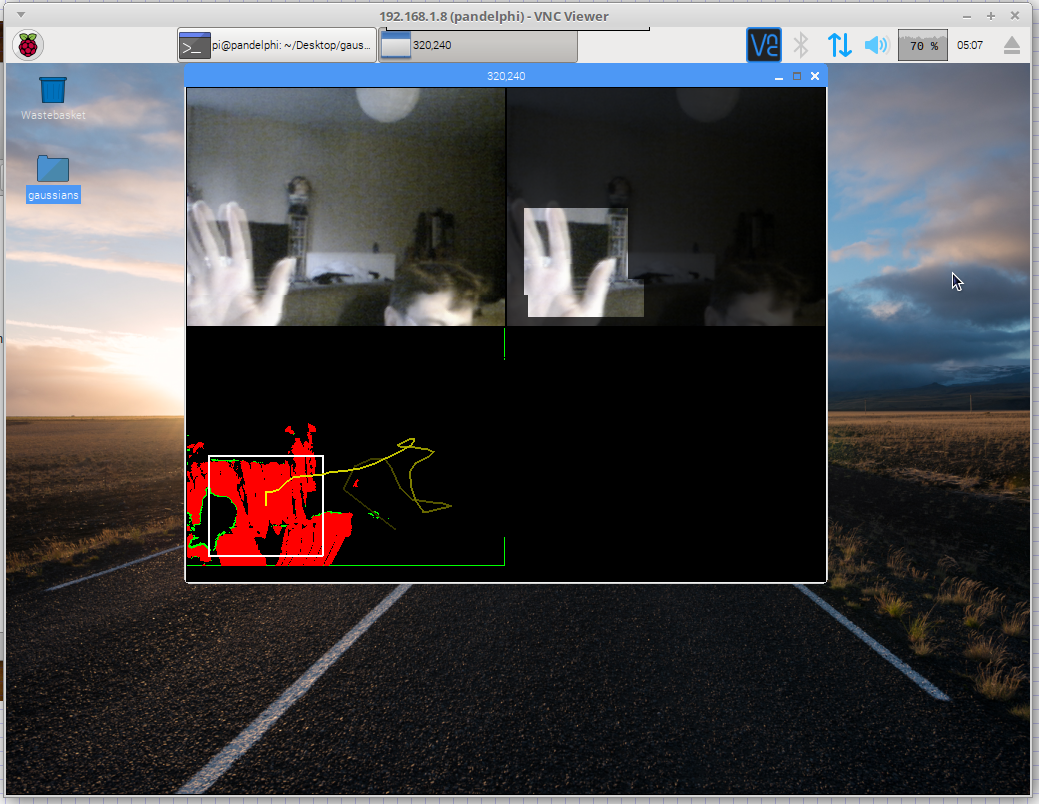

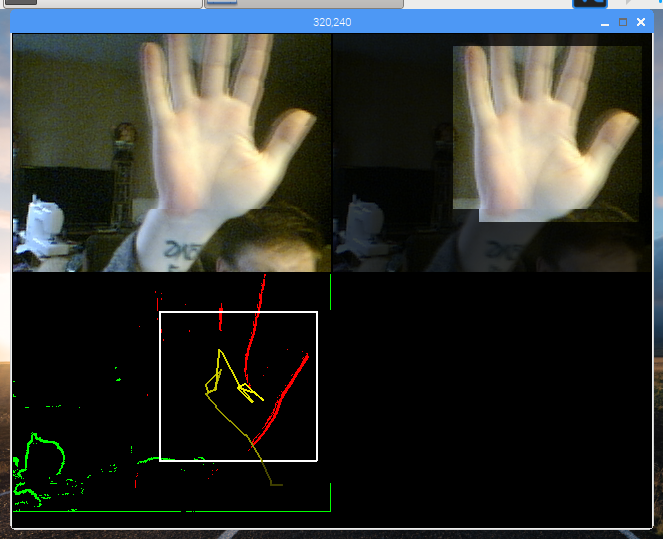

The motion tracker doesnt just sense motion. It has a time-of-flight mechanism that records the vector of any motion, besides where it is, and can use this for a predictive model of whats happening. A thrown object still takes around a second to traverse the screen from side to side, AIMos can get 5 grabs and make a vector, computing not only direction but acceleration too.

It can use this to detect gestures without complete recognition. If the analysis doesnt see a complete hand, but its moving like one it could be someone waving. Biometric sensors determine what the motion is likely to be, by checking if there is a changing capacitive field in that direction too.

I've added Adafruit's PWM library to the Raspberry Pi, and and drive the servo board over I2C directly. This dispenses with the Arduino, which I am mightily happy about. Its been nothing but trouble and required lots of messing around with toolchains and an unreliable data transport.

Using I2C and a dedicated controller board made all the hassle go away. It compiles in one step, runs nice and stable for over 24 hours [while VNC fell over on the big machine used to view it numerous times] and shuts down cleanly too.

Here's Gaussians running on the Pi, watching the TV. Not very interesting I'm afraid, but it shows how the tracker pays attention to areas of interest based on movement.

And there's the first test after connecting the servos to the tracker code.

For some reason, the tracker seems to like the lightshade, and when it catches it at the top edge of the screen centres it rather than the TV. It needs a bit of tweaking to limit the vertical motion, and slow down the frame reacquisition after movement. What it is doing, is making out the outline of the lightshade against a blank background, and when the head moves so does the light.

Normally there will be objects in the scene, a blank wall causes trouble. This is because when the head moves the entire scene should change and it doesnt, so anything highlighted in it becomes the focus of attention as it moves about.

To get rid of this annoying behaviour, I'll have to build in boredom, so it searches out other things to look at after a while. AIMos has always found the TV fascinating.

Morning.Star

Morning.Star

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.