For some reason, streaming video from a Raspberry Pi camera across a network to a web browser is unnecessarily difficult. You'd think it'd be easy enough to pop a URL into a video tag and all would be great. But obviously it's more complex than that. Once you've factored in video formats, container formats, streaming formats, and the matrix of these which your favorite browser might support, it all just seems a bit broken.

Over the years I've tried many things to get this working, including wrapping mpeg4 video in streams only Chrome supports ... until it doesn't; or repurposing ffmeg to generate content which everything supports, but kills the cpu on the robot in the process. And it all kind of works until you start to notice that the video latency can just make it all unusable anyway. It's difficult to control an ROV when the video latency is a couple of seconds.

So ultimately everyone falls back to the simplest thing - Motion JPEG. Motion JPEG is a sequence of JPEG images, formatted as a multipart HTML stream, which any browser with an IMG tag will display. One popular application for generating these streams is GStreamer, but GSteamer can do a lot more than just push JPEGs across a network, and for my purposes it's big, ugly and unnecessarily complicated.

So, time to write my own.

The new Camera app landed in 8BitModule GIT today and it's the simplest thing. On one end it reads JPEGs directly from the Raspberry Pi camera using the V4L2 interface, and on the other a super simple web server pushes these images across the network to whomever wants them. And that's all it does.

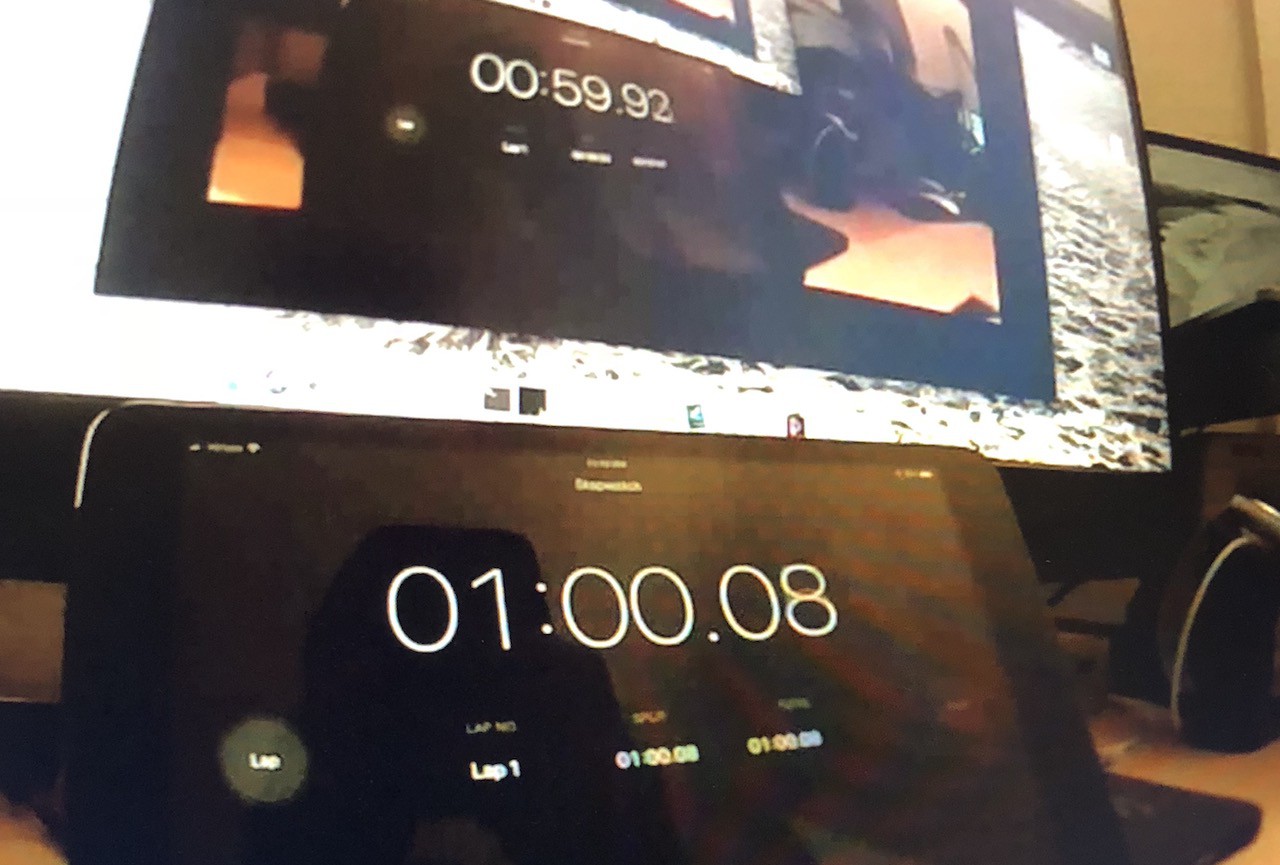

One other reason to write my own Camera app is to manage cpu and latency. The first cut of the app had a latency of 1.5 seconds ... which was a bit depressing. But after lots of experimentation (and experience from my various other attempts), the app come pre-configured to deliver latency of about 160ms over WiFi (see the photo above - the top browser is displaying the video of the timer at the bottom). It does this in a few ways. First, the JPEGs are always 1280x720 which appears to be the optimal size. Second, it reads frames from the camera as fast as the camera provides them (which happens to be 30 fps). Finally, it send these images across the network at 60 fps regardless of the speed of the camera (the camera side and website run in different threads). The result is a stream with minimal latency and only consumes 18% of the Raspberry Pi Zero cpu.

Why these values are the sweet spot I don't know. If anyone understand the latency of moving an image though a web browser and onto the screen I'd love to understand that. Delivering too few images to the browser seems to increase the latency, but why is that?. Is it possible to get more fps from the camera without a major hit on the cpu? Ultimately, where does that 160ms go? I'd love to know.

Tim Wilkinson

Tim Wilkinson

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.