When i first concieved the project, in the first hardware and firmware revision, i was planning to generate random numbers with this code in arduino's environment:

void setup(){

...

attachInterrupt(digitalPinToInterrupt(2), randomCore, FALLING);

}

void randomCore() {

Serial.println((unsigned int)(( micros() >> 2 ) & 0xFFFF));

return;

}

The right shift by two is done because micros()'s two lower bits are always to zero. The ANDing with 0xFFFF is done to prevent the sequentialiy of the numbers. The micros() value overflows every 70 minutes, we have about 30 new values per minute. If this AND wasn't done, we would have monotonically increasing values for the span of 70 minutes). Doing this, our remaining 16 bit value overflows about every 200 mS, which is acceptable.

Data is printed as a 16 bit number on the serial port and is collected by the computer. This data is then saved to a text file, the numbers are converted in two raw bytes and are analyzed by ent.

The first two-day sampling gave an alarming result:

$ ent randomdat.bin -c Value Char Occurrences Fraction 0 739 0.004077 1 402 0.002218 2 1033 0.005699 3 674 0.003718 ... ... ... 254 � 722 0.003983 255 � 691 0.003812 Total: 181256 1.000000 Entropy = 7.997995 bits per byte. Optimum compression would reduce the size of this 181256 byte file by 0 percent. Chi square distribution for 181256 samples is 498.15, and randomly would exceed this value less than 0.01 percent of the times. Arithmetic mean value of data bytes is 127.4942 (127.5 = random). Monte Carlo value for Pi is 3.138799695 (error 0.09 percent). Serial correlation coefficient is 0.005408 (totally uncorrelated = 0.0).

As you can see, i was getting about half times less "0x01" than all the other bytes, and about 1.5 times more "0x02" than the others. There was clearly something wrong. (byte values from 0x04 to 0xFD are omitted)

At some point i decided to split the high order and low order bytes that i was getting from the 16 bit number into two separate files and analyize them separately. These were the results:

$ ent MSBs.bin -c Value Char Occurrences Fraction 0 358 0.003950 1 393 0.004336 2 347 0.003829 3 333 0.003674 ... ... ... 254 � 374 0.004127 255 � 330 0.003641 Total: 90628 1.000000

The MSB showed no significant problems.

$ ent LSBs.txt -c Value Char Occurrences Fraction 0 381 0.004204 1 9 0.000099 2 686 0.007569 3 341 0.003763 ... ... ... 254 � 348 0.003840 255 � 361 0.003983 Total: 90628 1.000000

But i was obviously having a problem with the LSBs. All my 0x01 values were counted as 0x02.

At this point i was totally clueless about what was going on, but some considerations about the periodicity of the 0x##01 value (where # is a dontcare) saved my day.

This value is periodic by 2^10 microseconds, which is about 1024 milliseconds. This led me to think to how the millis() value is updated via TIMER0_OVF interrupt in Arduino. From Arduino's wiring.c for AVRs:

ISR(TIMER0_OVF_vect)

{

// copy these to local variables so they can be stored in registers

// (volatile variables must be read from memory on every access)

unsigned long m = timer0_millis;

unsigned char f = timer0_fract;

[...omitted...]

timer0_overflow_count++;

}

Basically the prescaler of the timer0 is set so that it overflows every 1024 microseconds: clock frequency is 16MHz, the prescaler is set to 64 and it overflows every 256 counts = overflow every 1024 uS. The function then takes into account for the extra 24 uS per overflow to calculate the milliseconds, and that's how the millis() work.

Just as another side note: 16MHz with 64 as a prescaler gives minimum resolution of 4 uS, and that's why the micros() function has only 4 uS resolution.

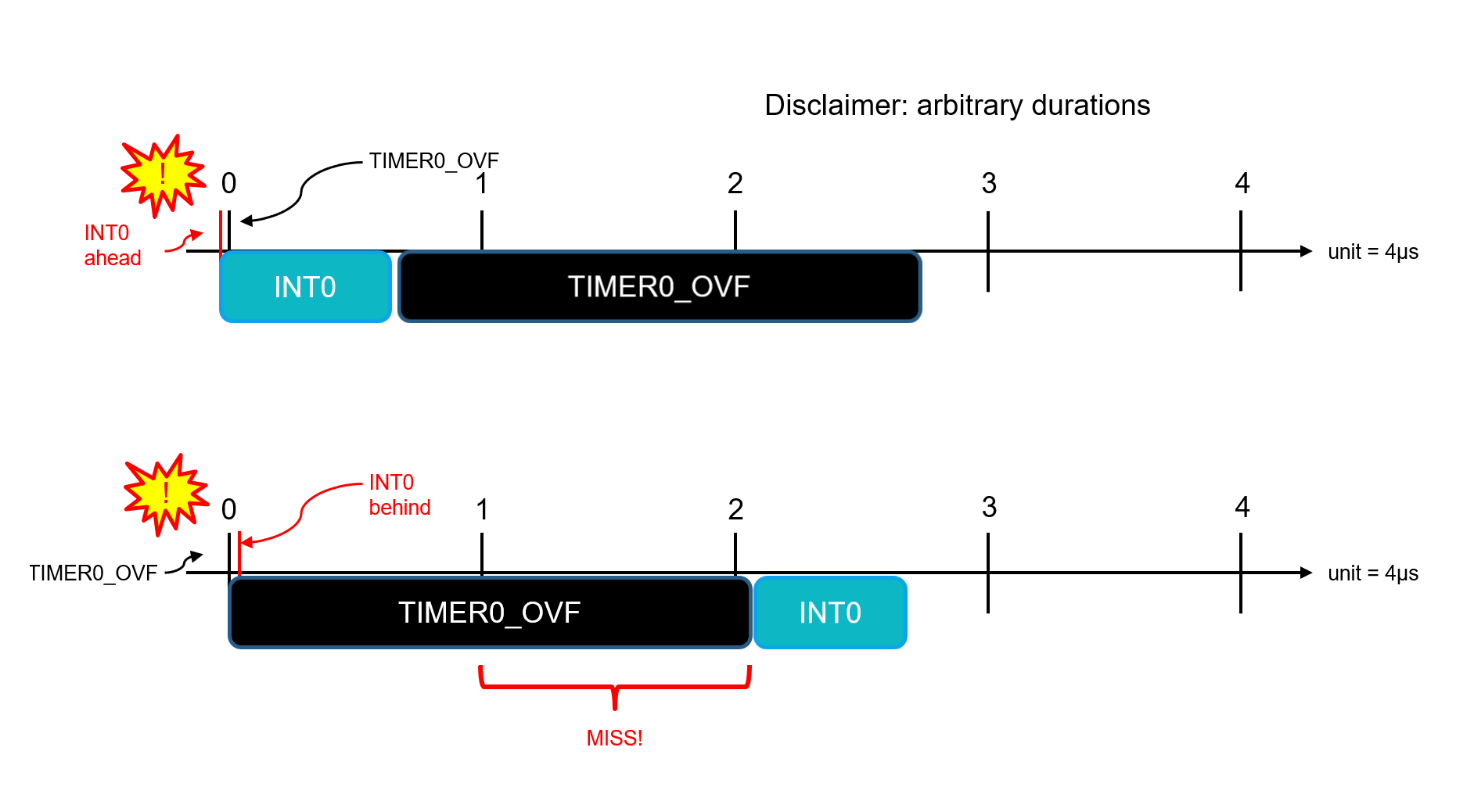

What i think was happening with my problem is this: interrupts have priorities. INT0 (the external interrupt) has the highest priority, while TIMER0_OVF has little priority.

When we have concurrent interrupts in AVR, the lower priority one is queued behind those with higher priority. So the INT0 interrupt should be executed first. In fact, the value 0x00 is registered correctly.

But if the interrupt INT0 arrives slighty after the TIMER0_OVF, the timer overflow interrupt will be already in execution and the external interrupt will be queued behind it. The TIMER0_OVF then takes some time to execute and delays the INT0, to the point that, when it reads the timer with micros(), some microseconds are already passed and the micros() has been already updated to value 0x02, and that's why interrupts generated on 0x01 converge to 0x02.

This is just an hypotesis, the only thing that i've verified is that my hardware is ok, because using the input capture unit the results are good. Suggestions on how to verify the hypotesis are welcome.

valerio\new

valerio\new

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.