In addition to trying to provide this robot build with autonomous behaviour (e.g. SLAM navigation), another objective is to explore various innovative ways of controlling its moving and walking behaviour., and its interaction with the user. The walking and steering motion of the robot can already be controlled via the GUI, keyboard or XBox One game controller.

So I am currently working on the improvement of the current user-controlled functions, as well as the implementation of some interesting new ones:

Fine-tune input for controlling the walking and steering motion of the robot

I have added a new input mode which allows the predefined walking gaits to be scrolled through via the keyboard or controller inputs. In effect, this means the controller can be used to “remote-control” the walking of the robot. The walking gaits still need a lot of tuning, but the basic function is now implemented.

Exploring interesting ways that a robot 'tail' can interact with the user

I am currently working on the design of a tail for the robot, as a way of adding more animal-like quality to the design, as well as a fun way of making the robot convey 'emotion' and other types of feedback. I'm not sure of the exact method of actuation, it might not have any! The design will be composed of multiple parts with similar design but with decreasing sizes, which result in a tapered snake-like appendage:

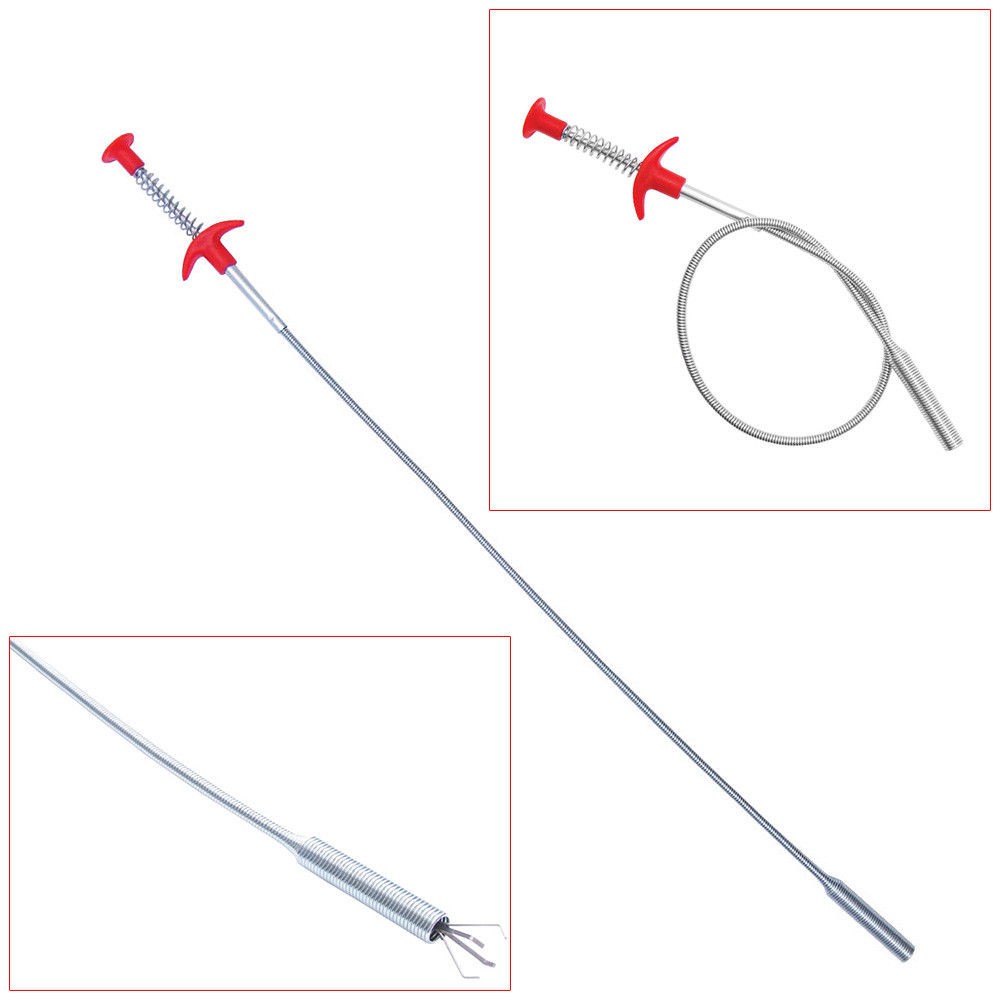

The parts will have a hollow central section, which will allow a flexible material to be threaded through for support. So far the best material I have found is the long spring section from a flexible long reach pick-up tool, which can be found cheap on eBay:

Adding a 3D sensor head to the robot

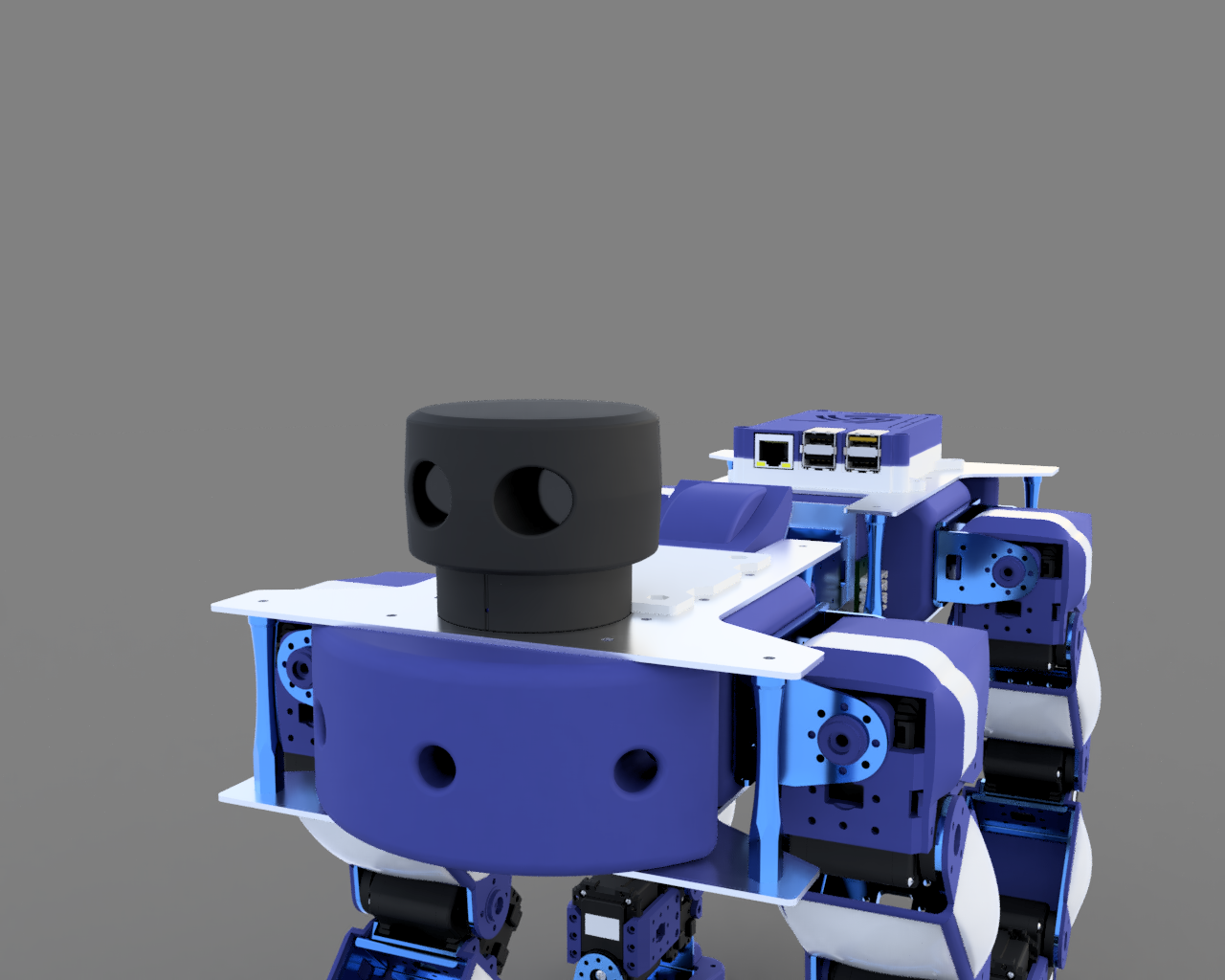

I originally had two ideas for area scanners which could be the main “eyes” of the robot. One is the Kinect v2, and the other a Scanse Sweep.

The main advantages of the Sweep is that it is designed specifically for robotics, with a large range and operation at varying light levels. On its own it only scans in a plane by spinning 360°, however it can be turned into a spherical scanner with little effort.

The Kinect has a good resolution and is focused on tracking human subjects, being able to track 6 complete skeletons and 25 joints per person. However it only works optimally indoors and at short ranges directly in front of it. It is significantly cheaper than the Sweep, but much bulkier.

However, more recently I have been looking at a third option from Intel's range of depth cameras. The Intel RealSense ZR300 seemed like an ideal choice, but since it has been discontinued, its successors from the D400 series seem like the best choice.

I rendered the various options on the robot for a size comparison:

Updating the user's graphical interface

In previous work with a robot that uses the same servos, I created a Qt-based GUI written in C++ and integrated the USB motor controller as well as the ROS ecosystem.

My idea is to re-use many of the components in this interface, in order to improve the interaction with the quadruped. This should be fairly simple, as the GUI was mostly indifferent to the physical configuration of the motors.

Here are some screenshots showing some of its features:

Dimitris Xydas

Dimitris Xydas

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.