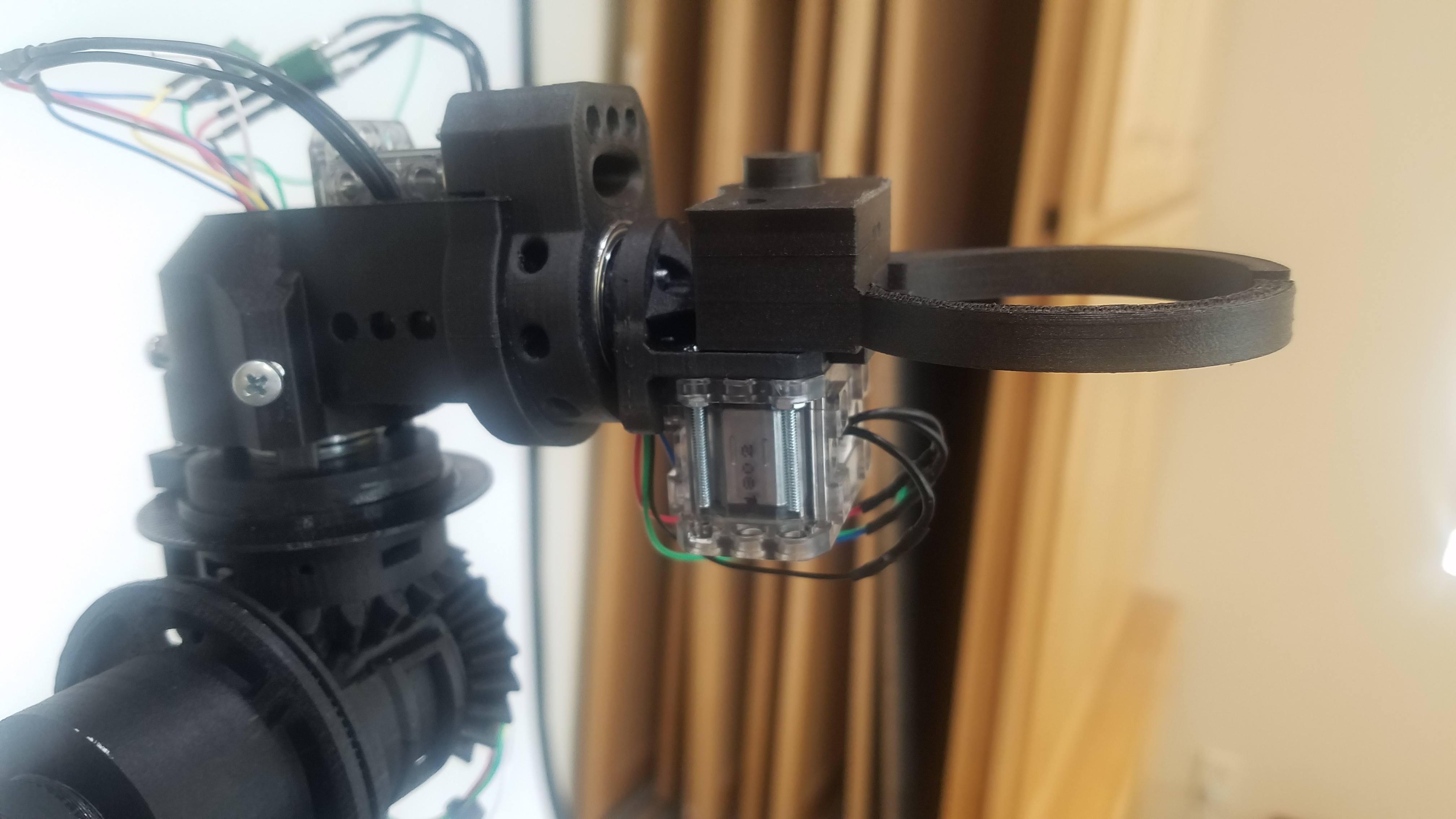

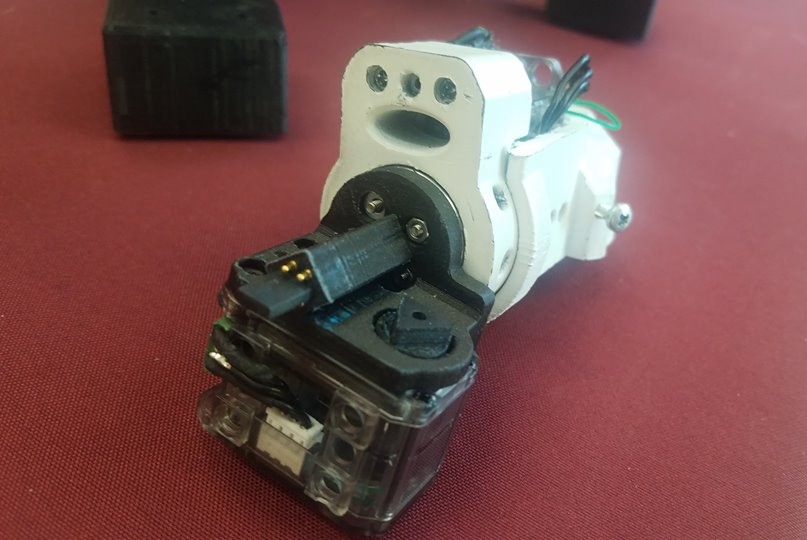

Dexter is an open source, 5+ axis robotic arm. Its parts are 3D printed with PLA and held together with carbon fiber strakes for reinforcement. It uses 5 NEMA-17 stepper motors with harmonic drives on 3 of the axes. These don't have encoders and are prone to slipping, so we build our own optical encoders mounted on the axes themselves. We use 2 sensors with one of them 90 degrees out of phase so we get a quadrature encoder out of the system. Our software interpolates these values as sin and cos and allows us to measure each axis.

We use an FPGA to allow us to process the data from the encoders in real time. With our gateware, we're able to take 5 million measurements per second and get a 200 nanosecond control loop with the motors. This allows the motors to react when changes are detected in the optical encoder. One of our control modes known as "follow me" allows someone to physically control Dexter by touch without backdriving the harmonic drives (doing so wears them out and eventually weakens the integrity of the transmission.)

We wanted Dexter to be easy to program to someone new to robotics and a basic understanding of code, so one of our team members created Dexter Development Environment. It uses a modified version of JavaScript due to accessibility and it comes with documentation on how to program Dexter in DDE.

Dexter uses a socket connection for DDE, but that isn't the only thing that has used it. One of the members of the Dexter community has actually developed a Unity library for use with Dexter. Using follow me mode, you can actually connect move Dexter around in virtual reality to interact with objects. Touching one will provide haptic feedback in the physical Dexter. We think this was really cool because that opens the door for Dexter to potentially be used as a game controller.

Haddington Dynamics

Haddington Dynamics

Anthrobotics

Anthrobotics

Kevin Harrington

Kevin Harrington

AngelLM

AngelLM

Patrick Joyce

Patrick Joyce

I am interested in building one. It can be the first version or the HD.

I saw a kit is mentioned, but it seems is not available anymore, at least, not on https://www.hdrobotic.com/shop, where prices start from 13.000 (ouch) and there are no kits listed.

I am wondering, has anybody built/ assembled one using just the info on github/thingiverse? Does it work, are all the parts listed, is the Bom missing something?

I would like to avoid starting ordering / making parts without any feedback on the feasibility of making it. Thanks!