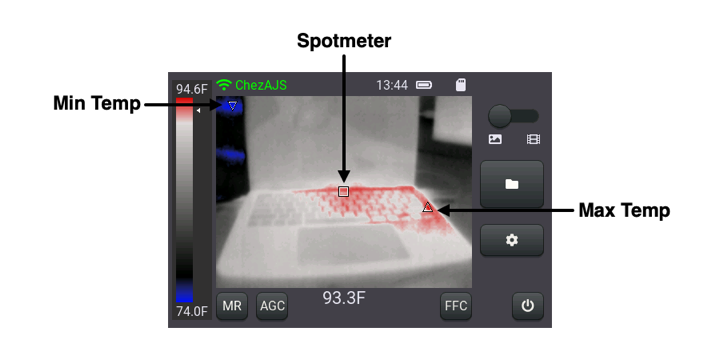

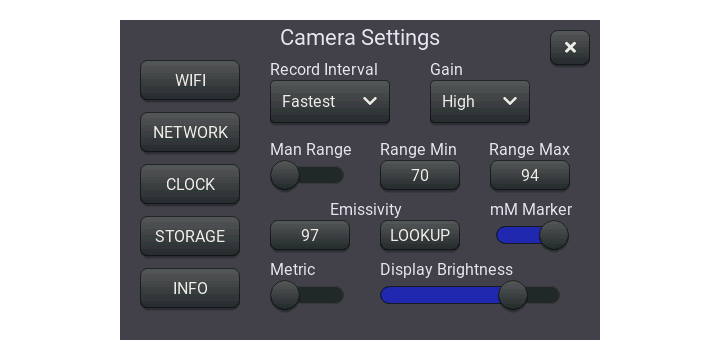

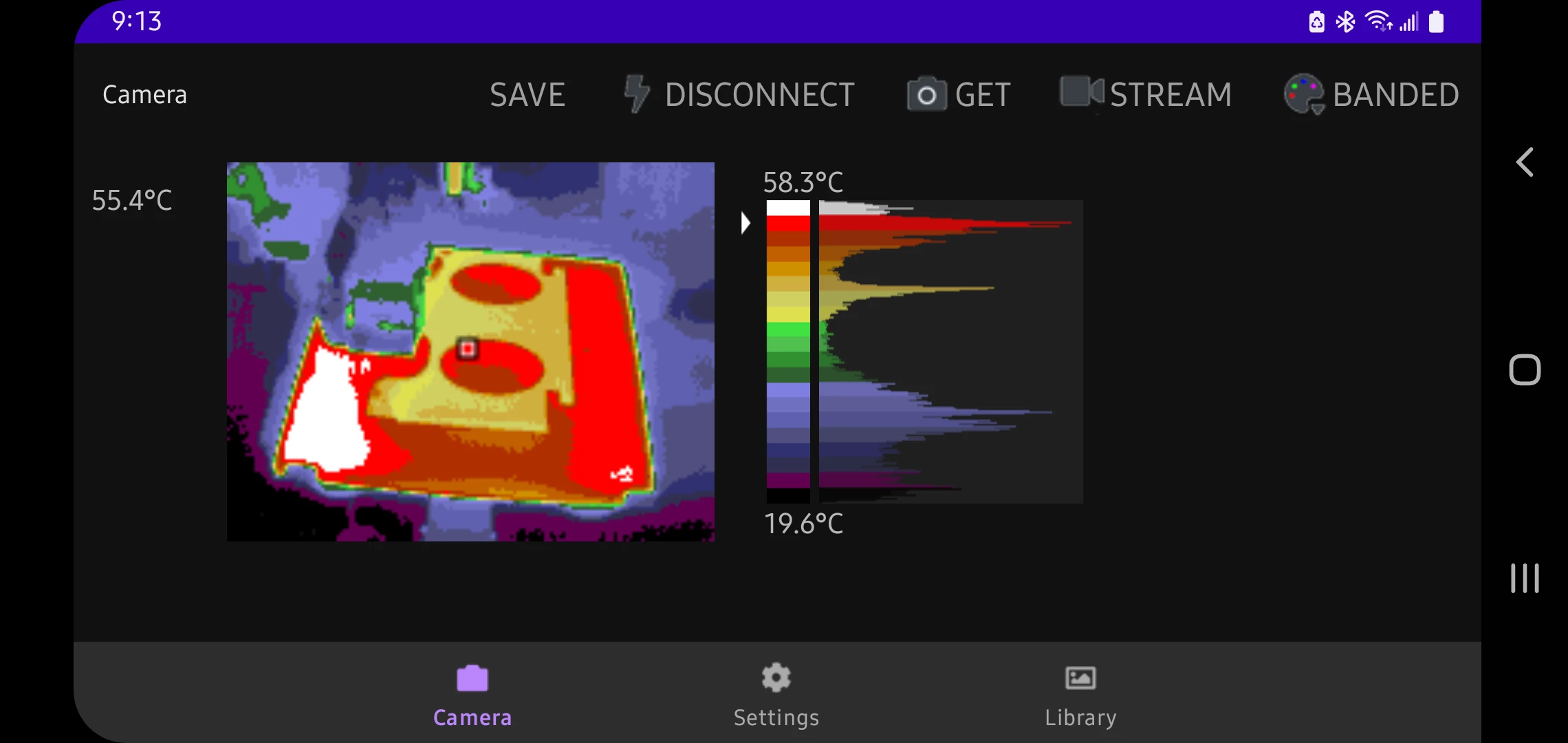

My original long-term goal was to create a capable thermal imaging camera using the Beaglebone Black and a 7" LCD cape as the platform matching some of the features of high end commercial products. However it soon became obvious that I'd need a simpler platform to learn how to use the Lepton module when I started reading the documentation and playing with the various demo codebases. This is the story of my thermal imaging camera journey.

I read about a lot of other great projects online to get going. Pure Engineering and Group Gets are to be commended for making these devices available to makers. Max Ritter's DIY Thermocam and Damien Walsh's Leptonic are really well done and instructive. Both Max and Damien were very gracious when I sent them various questions when I was getting going.

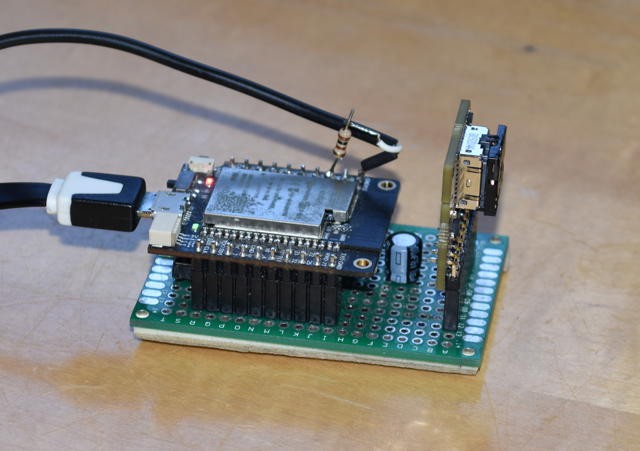

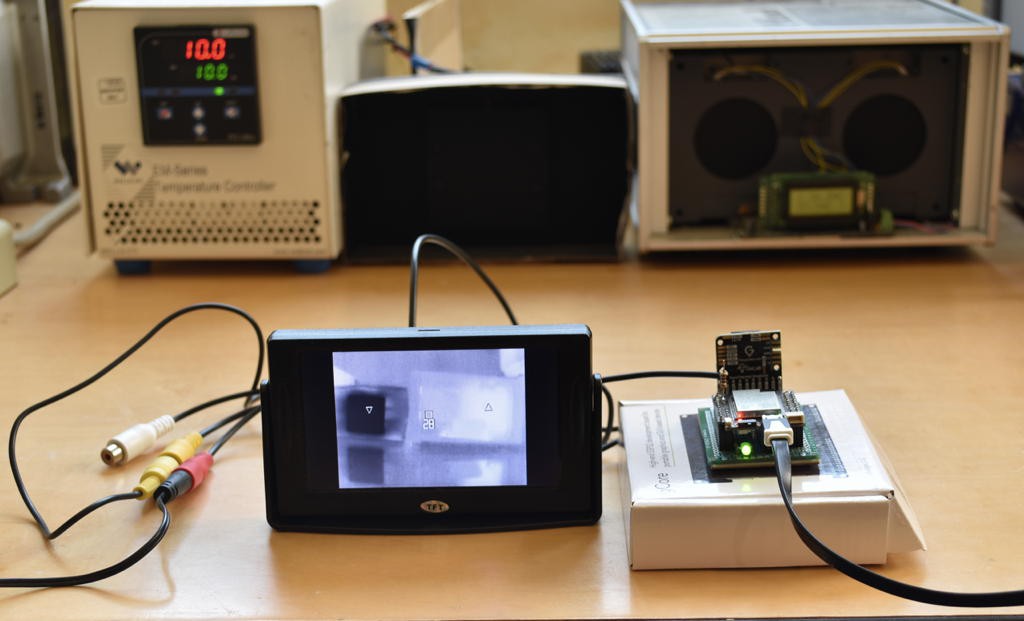

I ended up starting with a Teensy 3.2 based prototype with a Lepton breakout board, before moving on the Raspberry Pi, then the exciting world of PRUs in the Beaglebone SBCs before finally settling on the ESP32 for my final design (actually designs).

Dan Julio

Dan Julio

Ashwin K Whitchurch

Ashwin K Whitchurch

Sukasa

Sukasa

SaberOnGo

SaberOnGo

Martin Fasani

Martin Fasani

Hello Dan. having limited knowledge about sockets, I have a general query. What is the reason for using ZeroMQ? From the little research I've done so far the message size for even 1 frame would be way too high... am I missing something?