The lion kingdom started getting ideas to make a tracking camera in July 2014. Quad copter startups were booming & tracking subjects by GPS suddenly caught on, even though it was just a rehash of the worthless results hobbyists were getting in 2008. The lion kingdom figured it could improve on it with machine vision tracking fiducial markers.

It was terrible. You can't make a video wearing all those markers & the picture quality wasn't good enough to reliably detect the markers. To this day, hobbyist tracking cams are all still using chroma keying & LED's. The lion kingdom would do better.

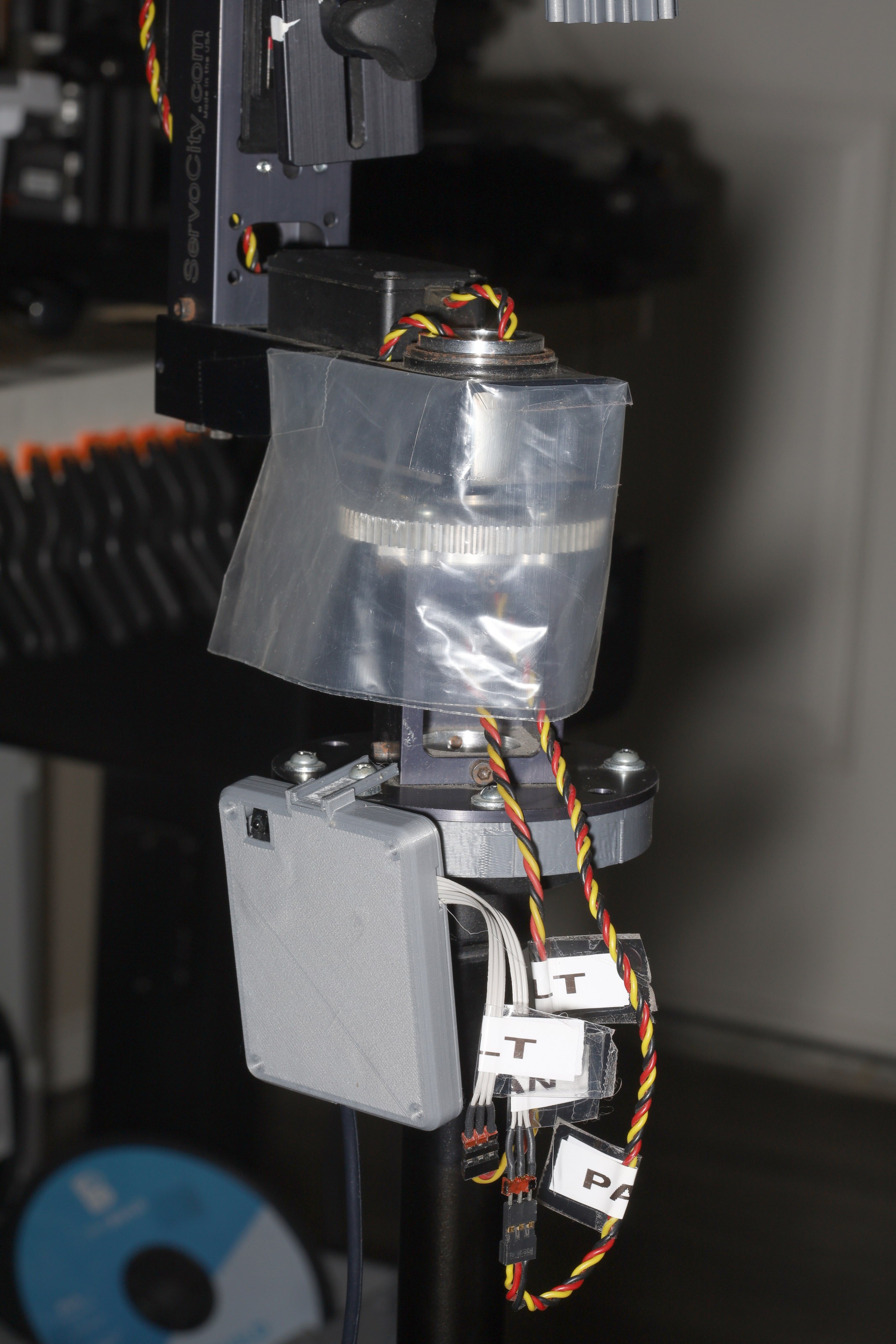

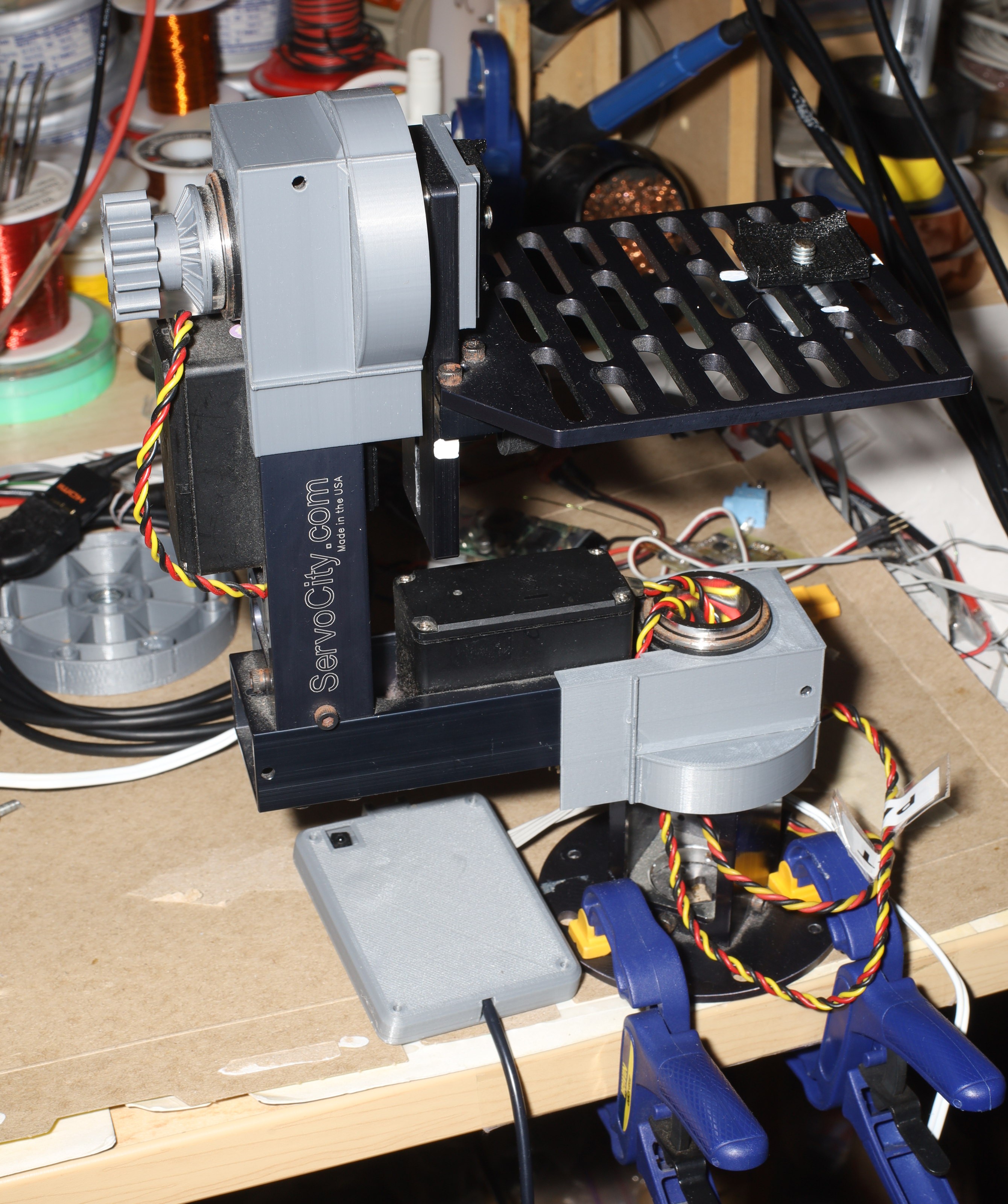

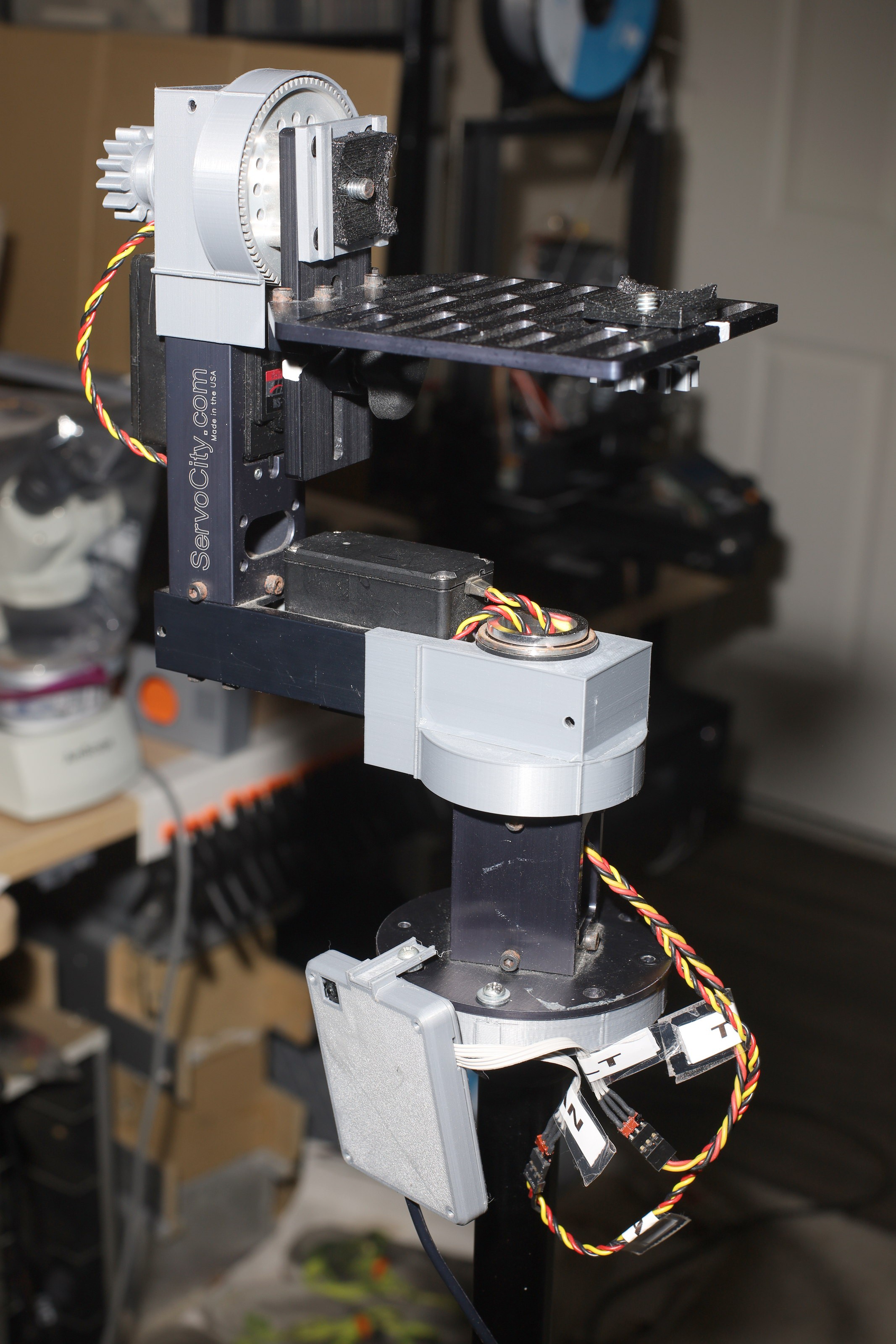

The next step occurred in Aug 2016 with LIDAR.

That had problems with reflections in windows & couldn't detect tilt. It could only estimate tilt by the distance of the subject from the camera.

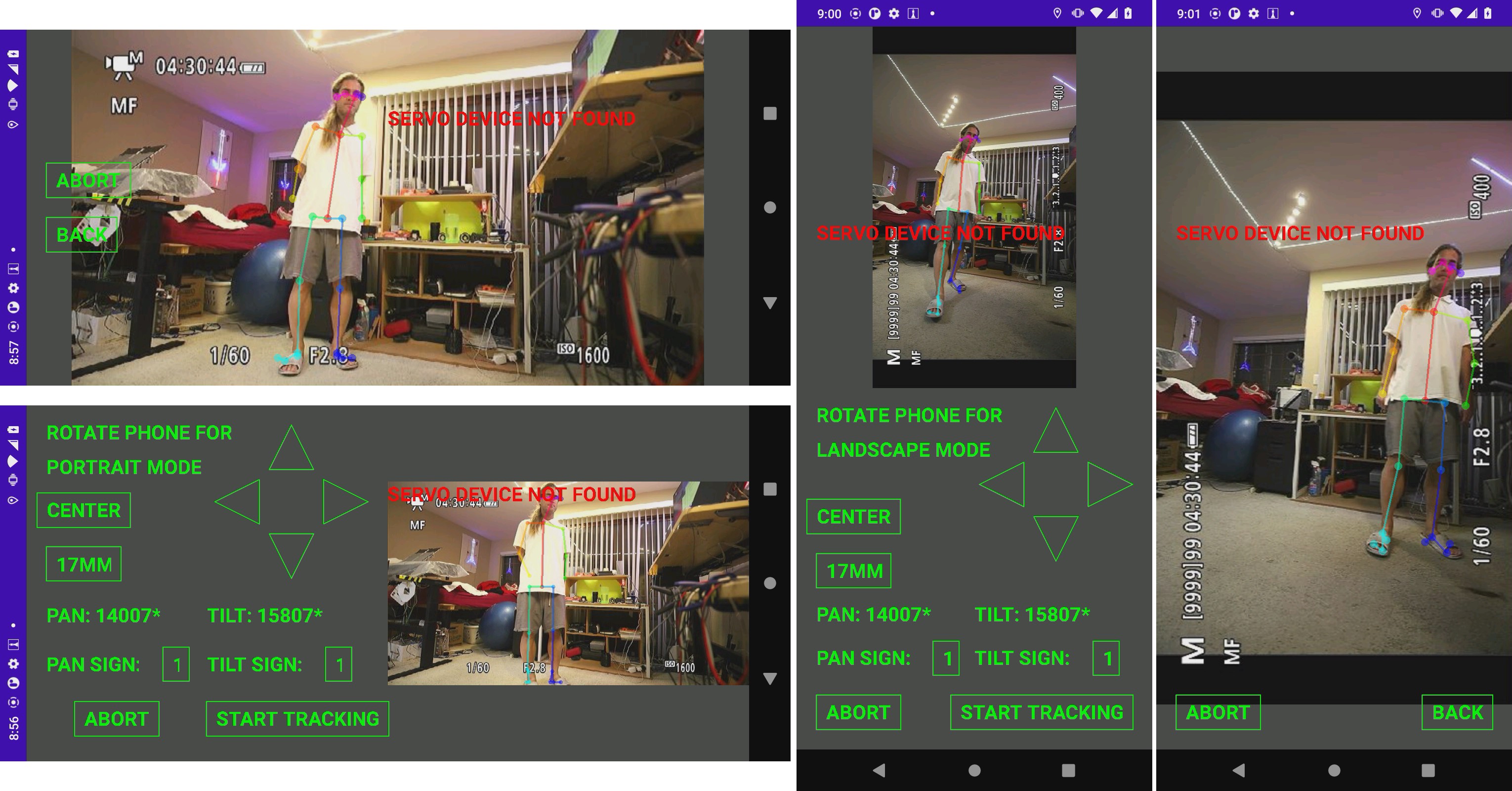

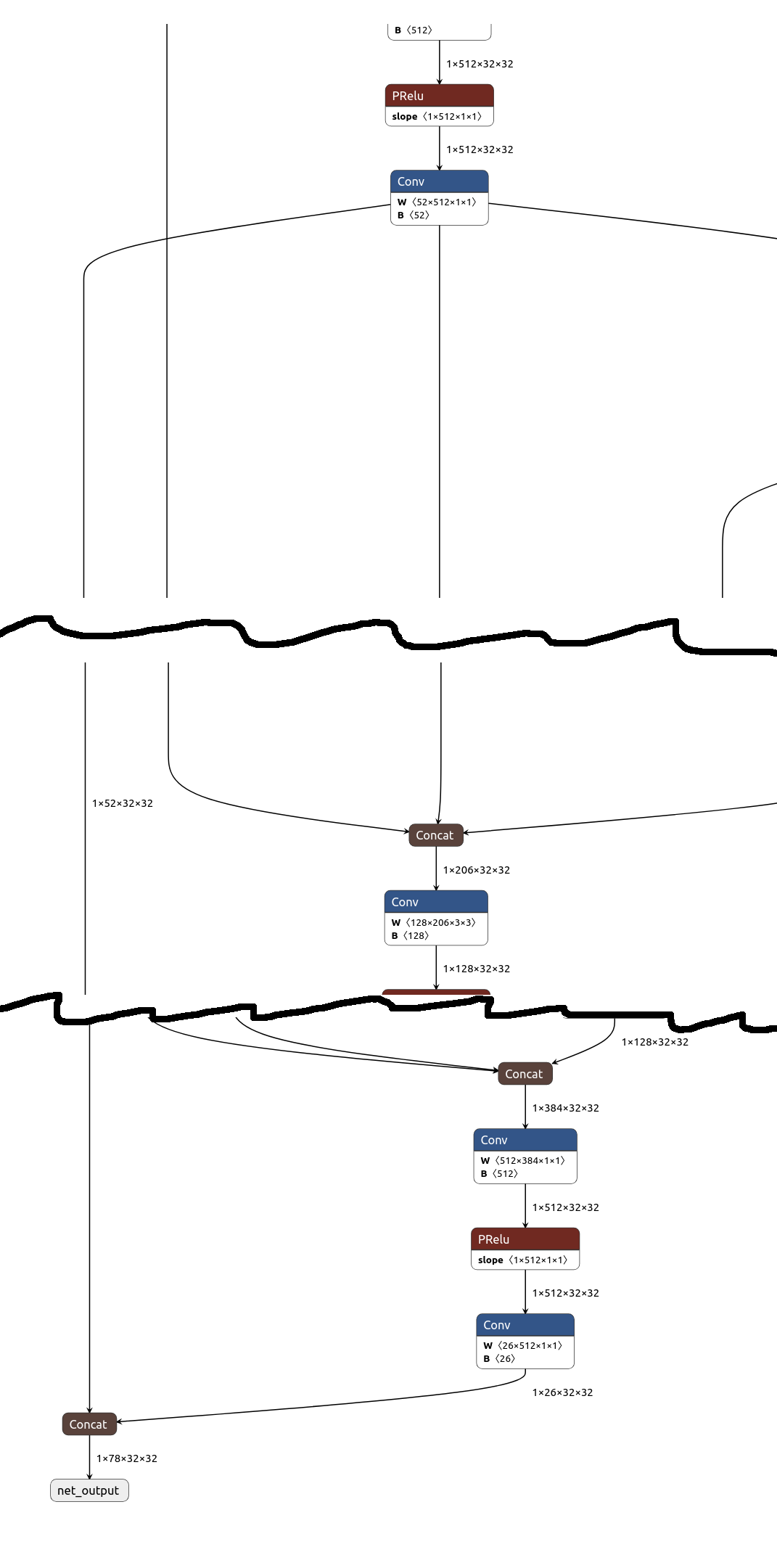

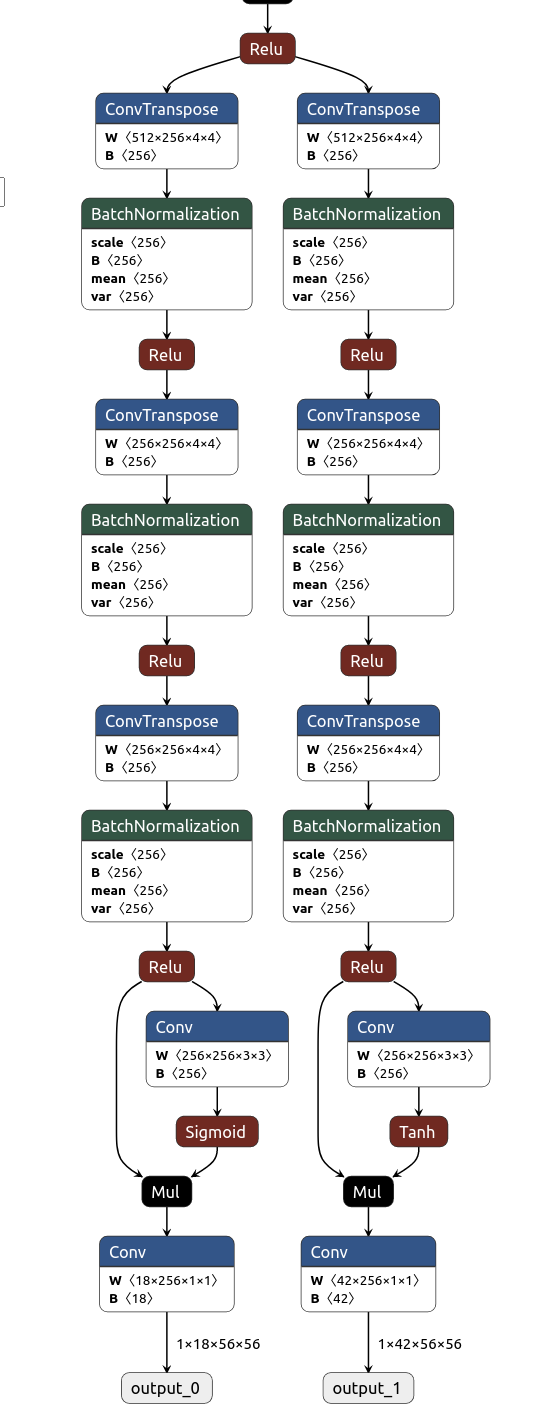

2018 saw an explosion in CNN's for subject tracking. The key revelation was openpose. That theoretically allowed a camera to track a whole body or focus in on a head, but it didn't allow differentiating bodies. The combination of openpose & a 360 camera finally allowed a subject to be tracked in 2 dimensions, in 2019.

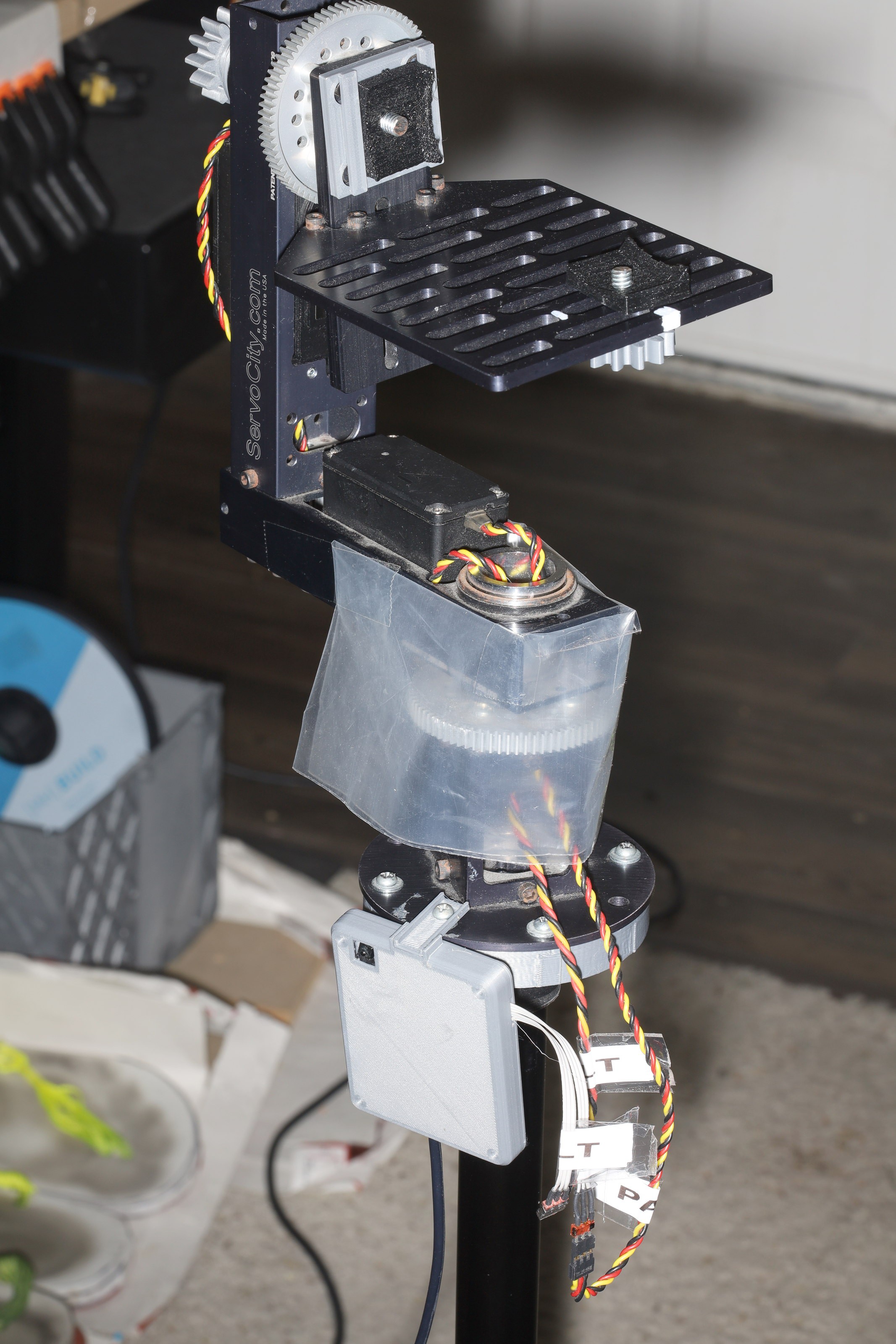

The problem was a 360 camera with live output was expensive & cumbersome to get working. The live video from the 360 camera drove the recording camera & had a long lag. Cameras which recorded high quality video didn't have a wide enough field of view or fast enough autofocus to track anything. The tracking camera was offset from the recording camera, creating parallax errors.

Tracking would have to come from the same camera that recorded the video. That would require a wide angle lens, very fast autofocus, & very high sensitivity. It took another year for cameras to do the job for a reasonable price.

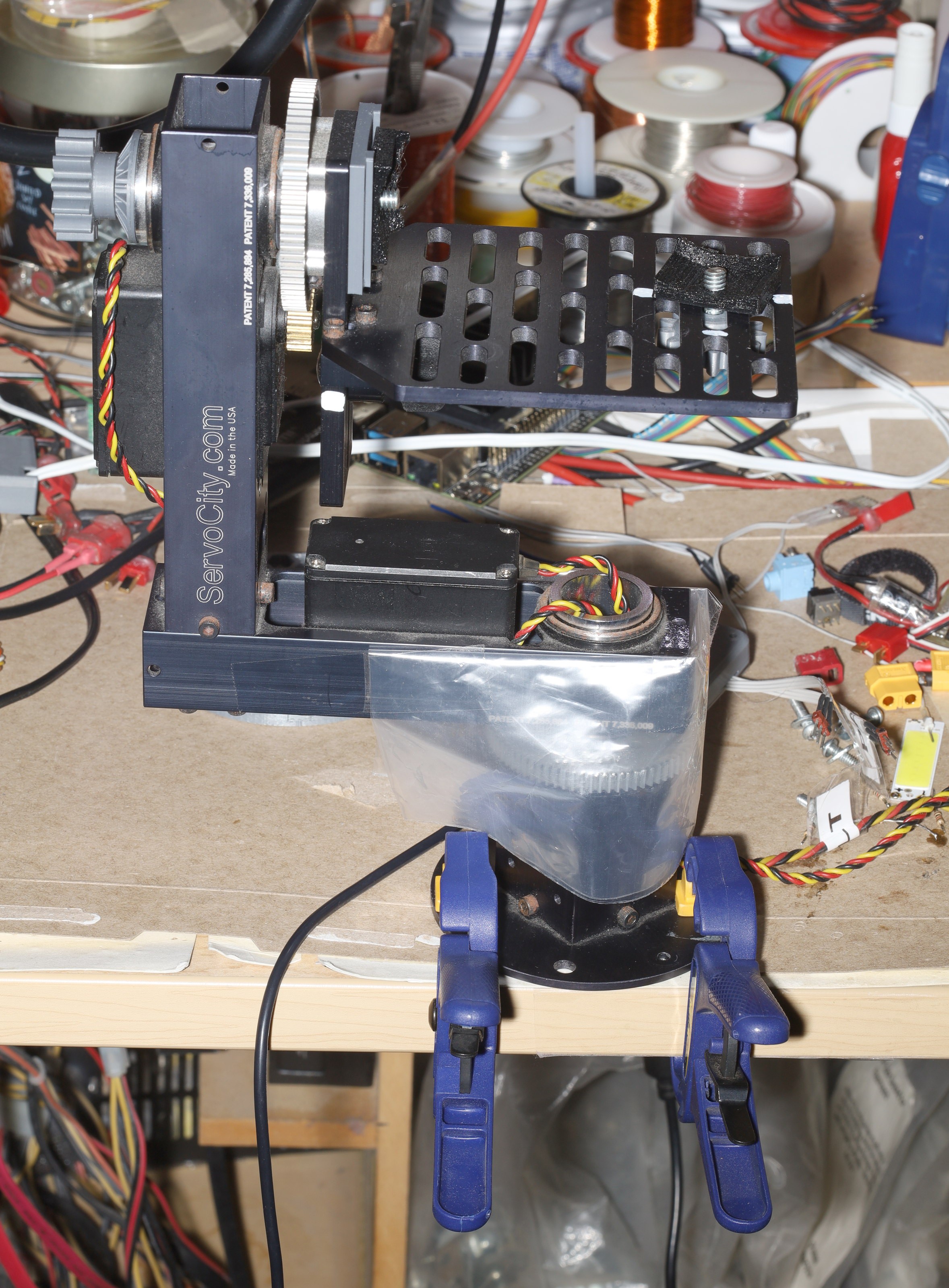

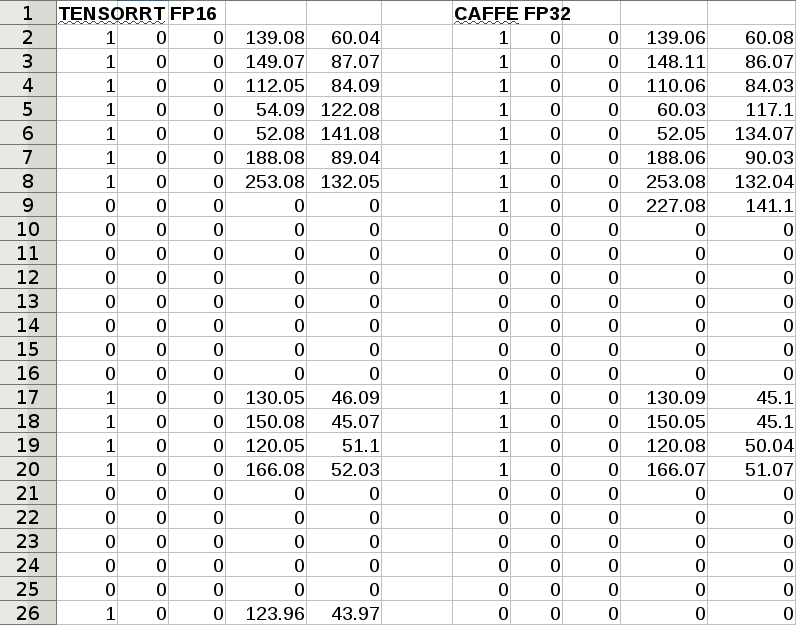

The EOS RP allowed wide angle lenses & had much faster autofocus than previous DSLRs. Together with a faster laptop, the tracking system was manely doing the job. Openpose couldn't detect the boundaries of the head, only the eye positions. That made it point low. A face tracker running in parallel would refine the tracking, but enough confusing power would cost too much.

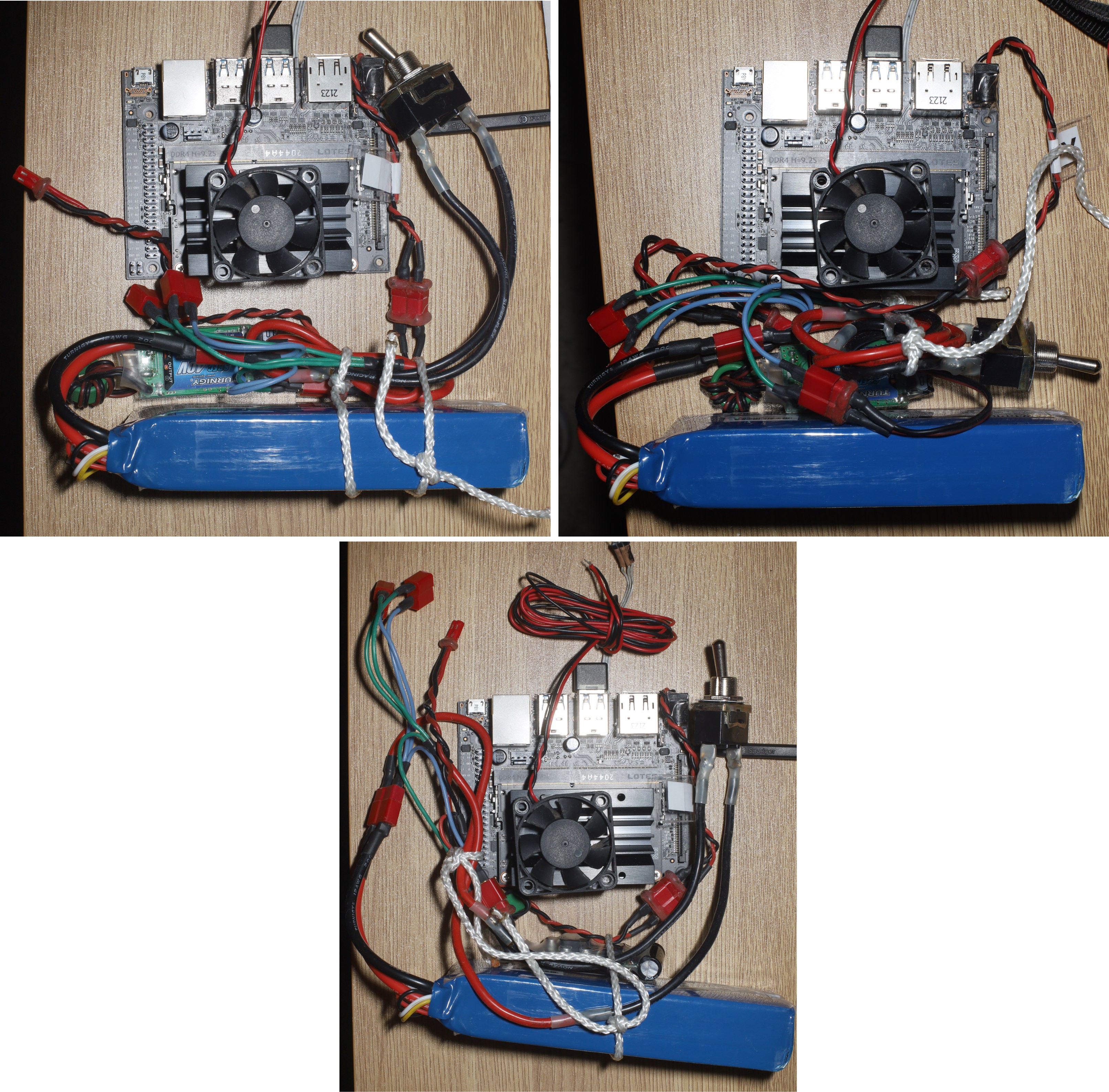

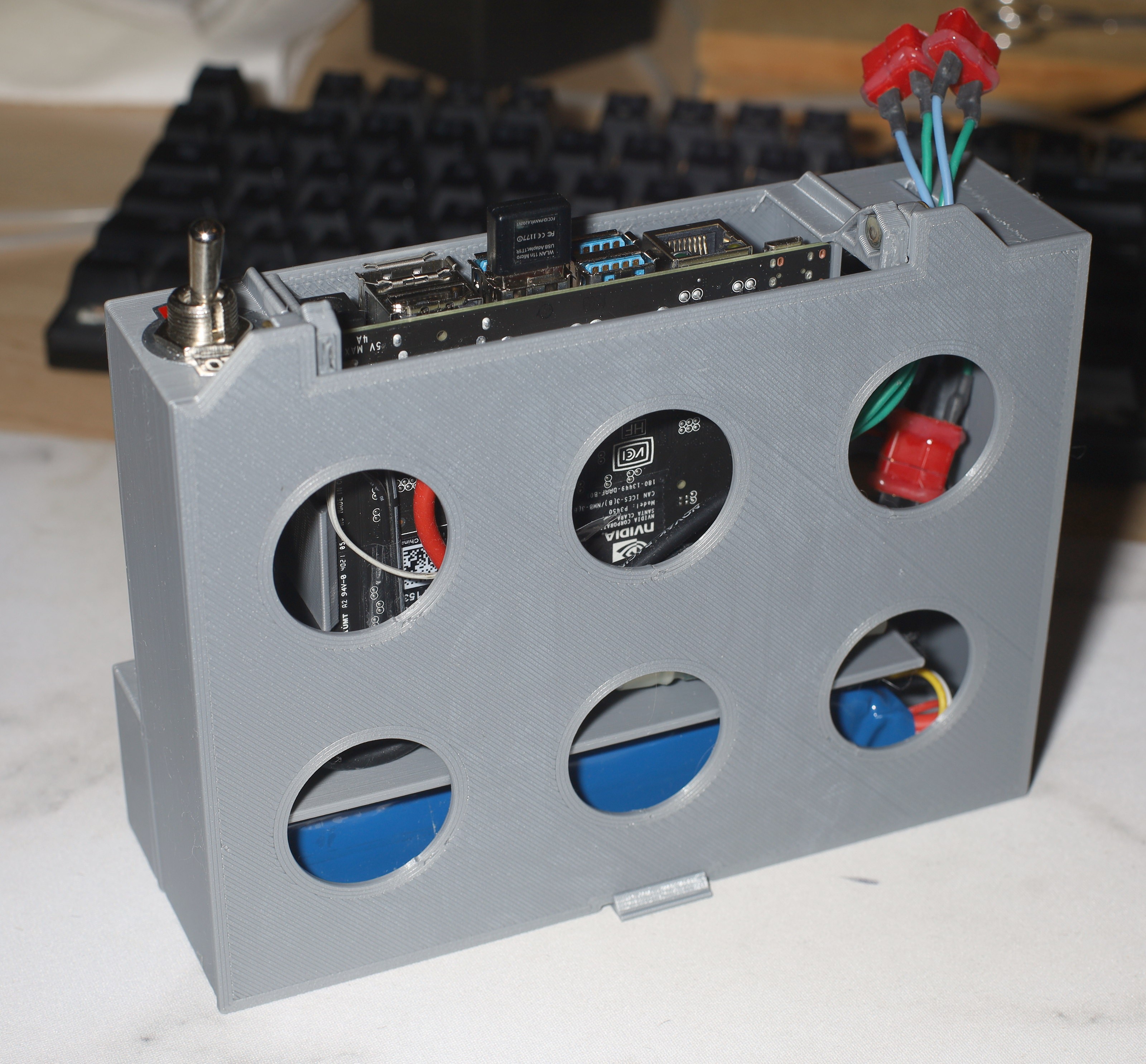

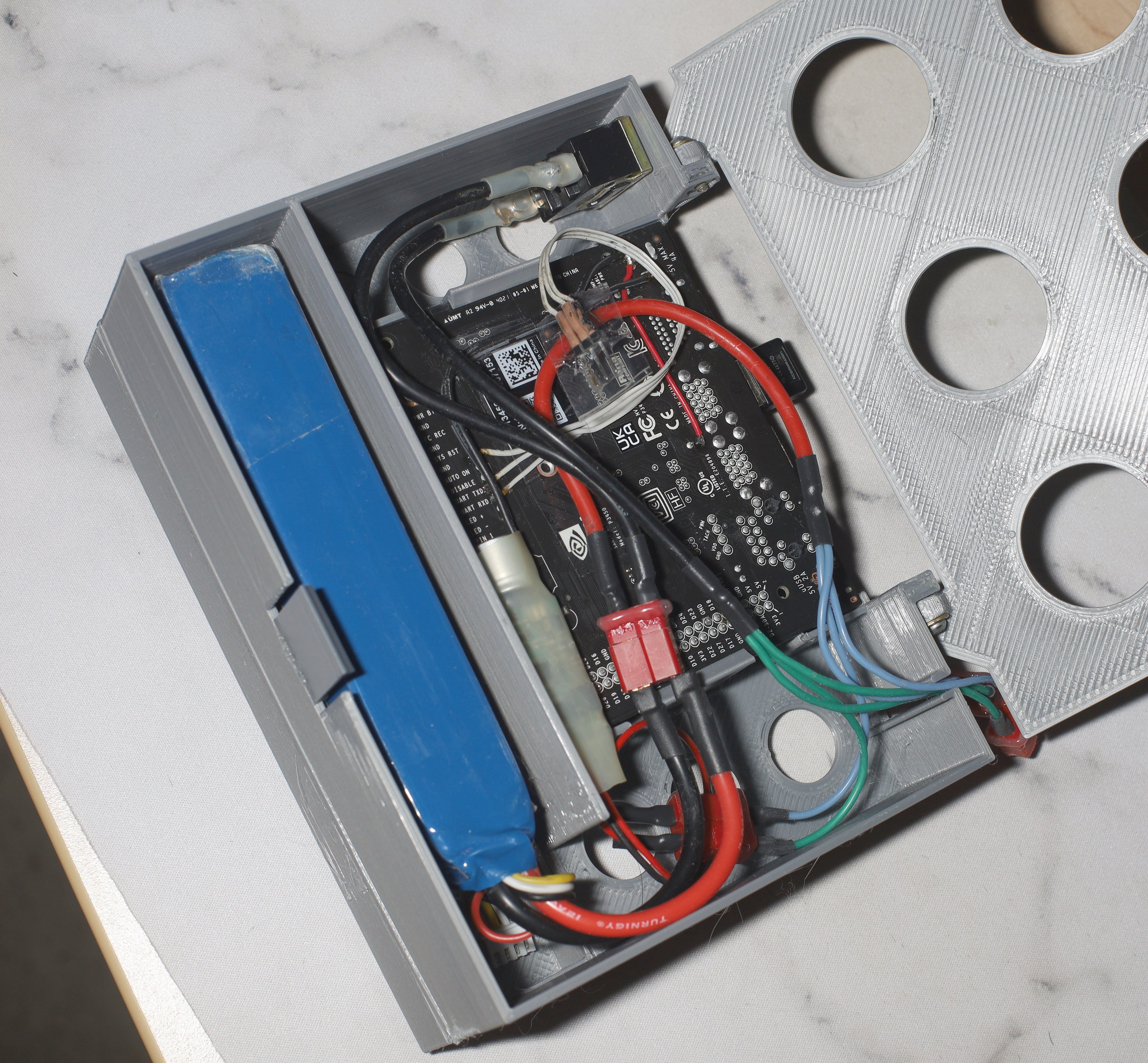

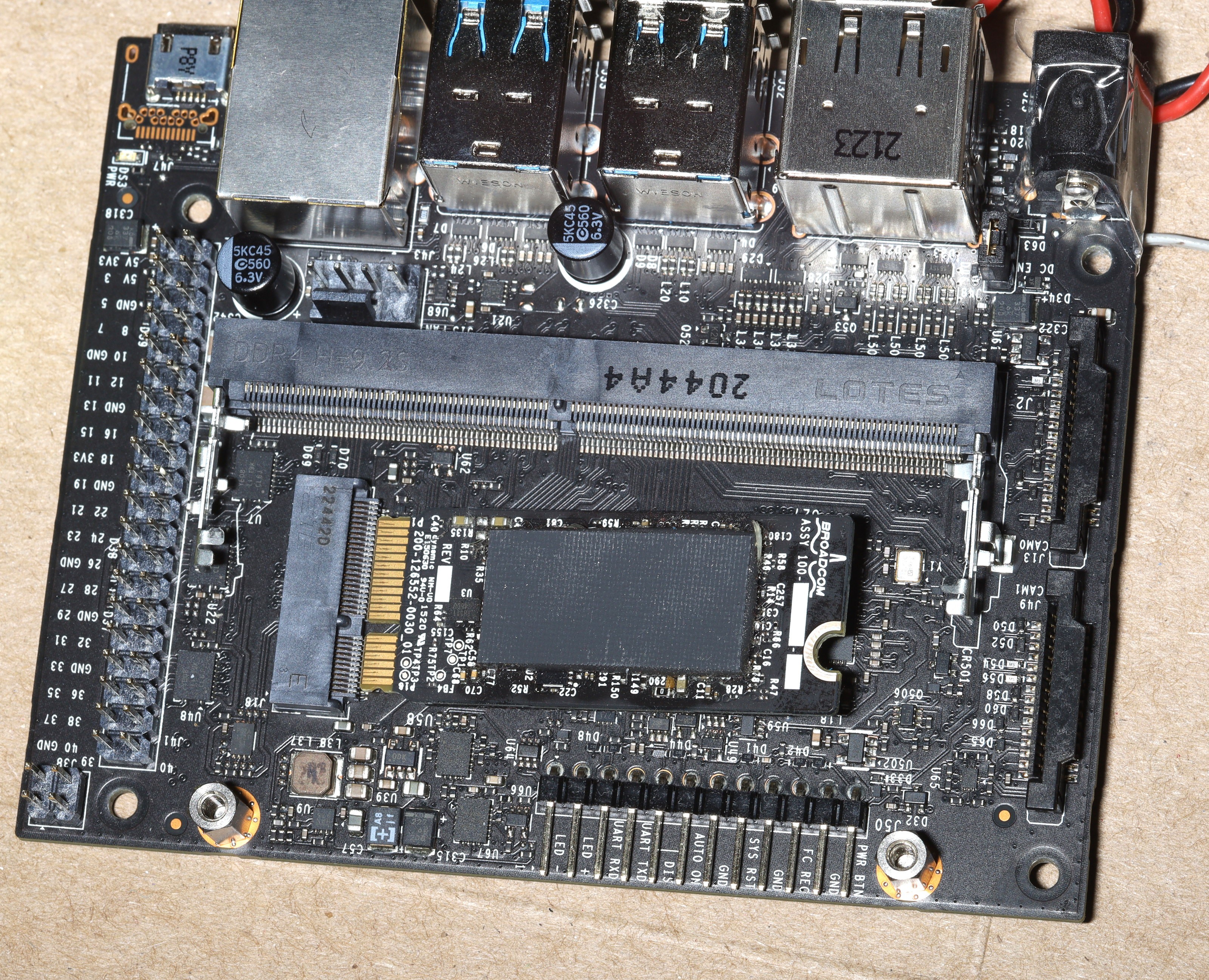

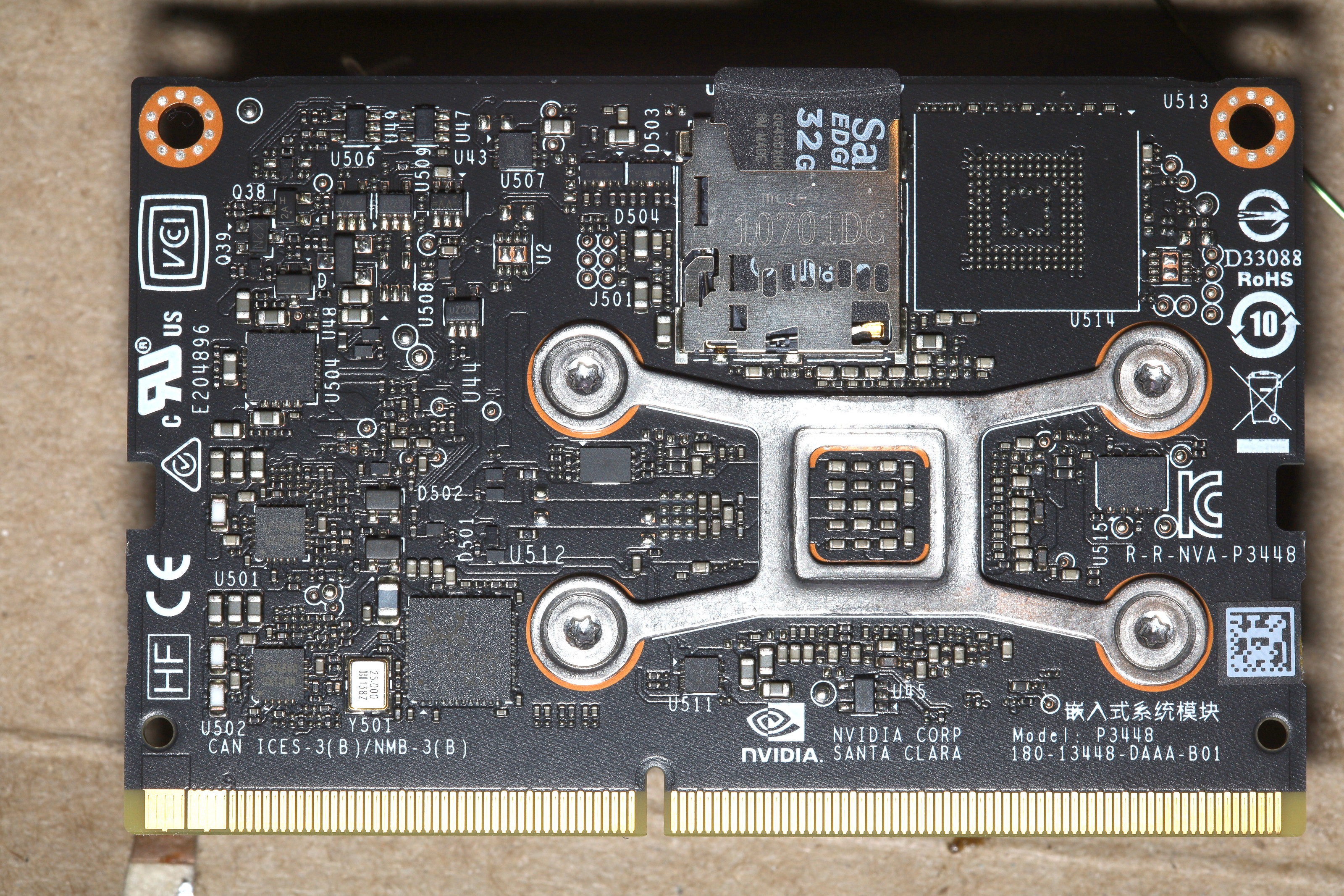

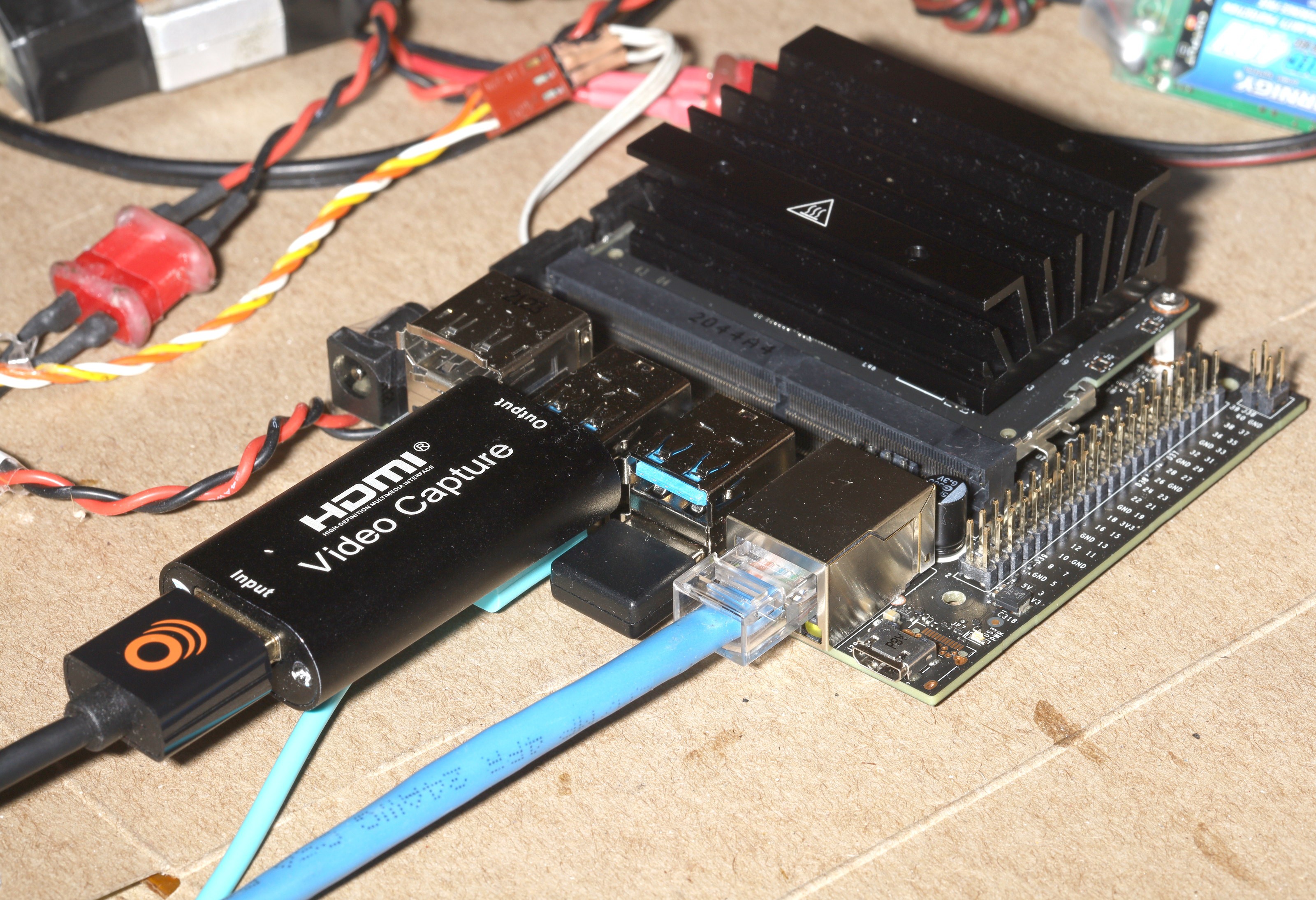

The key requirement of openpose seemed to be 8GB of video RAM. The laptop had 4GB of video RAM, required manes voltage & ice packs to run at full speed, so it was far from portable.

The next step would be tracking a single face in a crowd of other faces.

lion mclionhead

lion mclionhead

Robert Rudolph did the same type of thing for his sentry gun projects, back in 2010/2011. https://www.youtube.com/watch?v=8ekeP3Y-DcY

I think his open-source software is still available via links on his YouTube channel. https://www.youtube.com/@SentryGun53/videos