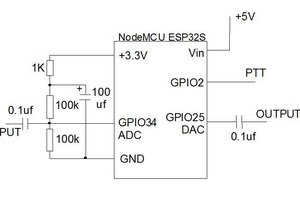

Generalized cross correlation with phase transform is used here to determine the time delay from when a signal reaches the first microphone in an array to when that signal reaches another microphone in the array. In this case, the first and fourth microphones in the microphone array of a PlayStation Eye are used. The distance between these microphones is 6 cm. Lines representing the incident sound wave to two microphone arrays are plotted in the terminal, and distances in the x and y directions are reported. The x-axis is defined as the line on which the cameras sit, with the positive direction toward the right array and zero halfway between the arrays. The y-axis is defined such that the sound waves are travelling in the negative y direction, with its zero point coincident with the zero point on the x-axis. During testing the arrays were spaced from three to five feet apart.

At the start of the program, the user chooses which audio capture device to use for the left array and which device to use for the right array. Next, the user is asked to enter the distance between the arrays in feet. At this point control of the terminal is given to ncurses for plotting. Audio is sampled with a sample size of 16 bits for each of the four channels at 96,000 frames/second. The plot is updated only when both arrays report reasonable angle of arrival. GCC-PHAT can give nonsensical angles. These erroneous angles are detected when attempting to take the sine of the angle returned by GCC-PHAT, and then they are ignored if the sine value fails a "!isnan()" check.

The code is available as a zipped CodeLite project and as separate files in the files section.

There are print statements commented out throughout the code, and these may be uncommented for debugging. Note that the ncurses functionality should be disabled if printing to stdout is desired for debugging.

Thanks Chuck Wooters for the code on your GitHub showing the usage of FFTW3 for GCC-PHAT.

Thanks David E. Narváez for the code on your blog showing the usage of FFTW3 for cross correlation, and how to rotate the output array so that the 0-frequency appears in the center.

Thanks Xavier Anguera for the page showing the math of the GCC-PHAT.

Thanks Jeff Tranter for the article in Linux Journal showing the procedure for capturing audio using alsa.

Please let me know if you find errors/bugs or if you feel that I have not attributed usage of your prior art properly. I intend no infringement, and will cite you or remove this project.

dBSound Bracelet

dBSound Bracelet

Orlando Hoilett

Orlando Hoilett

Electronoob

Electronoob

I want to build a large microphone array, say 64 microphones. Thinking to connect 16 PlayStation Eyes with a Linux machine. Do you think if it it possible to capture all the audio streams?