Hi everyone,

So we took a bit of time to see what our power use is now that we have neural inference (real-time object detection, in this case) running directly from image sensors on the Myriad X. So this was actually inspired by this great article by Alasdair Allan, here, and we use his charts below in the comparison as well.

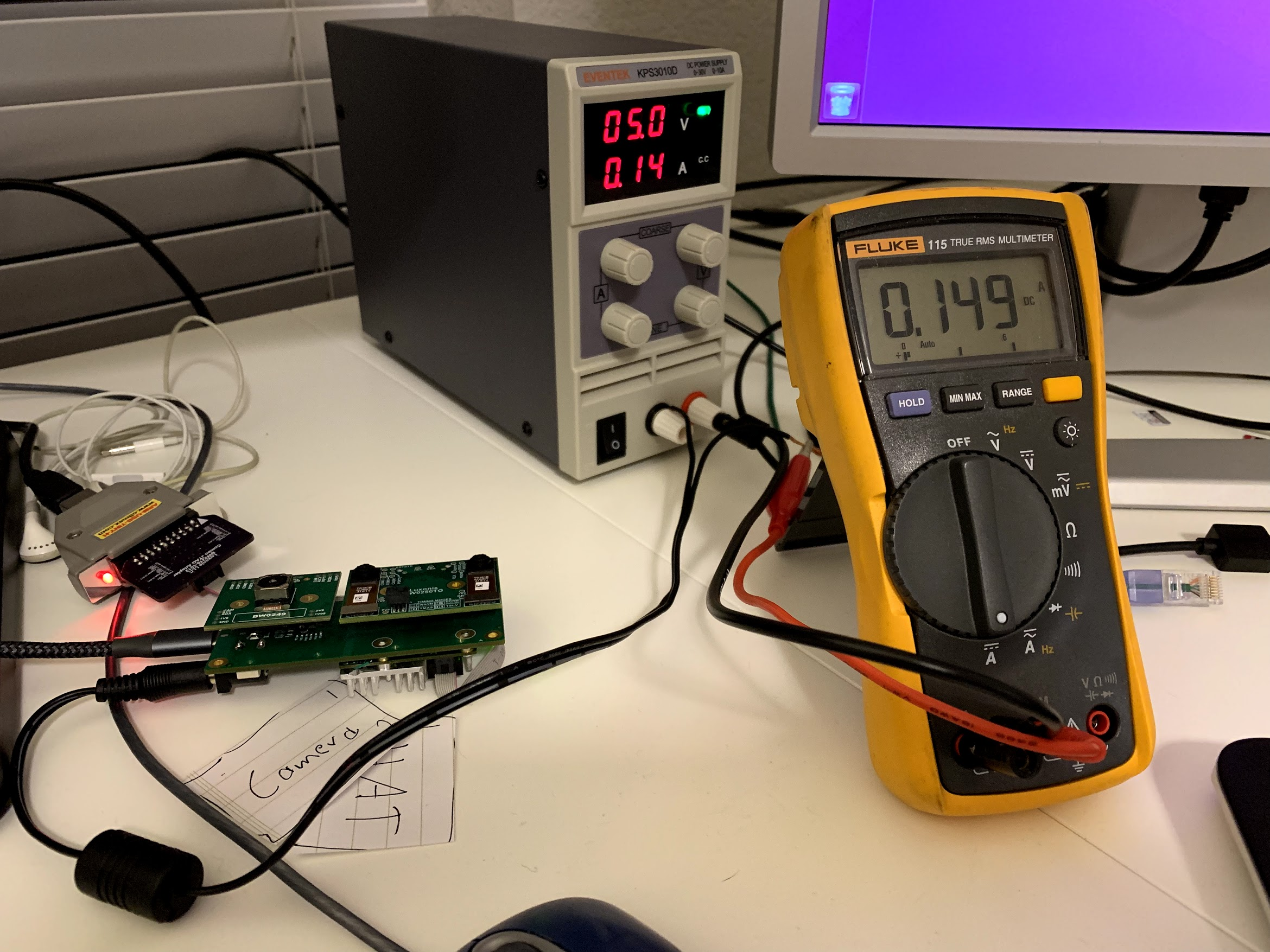

Idle Power:

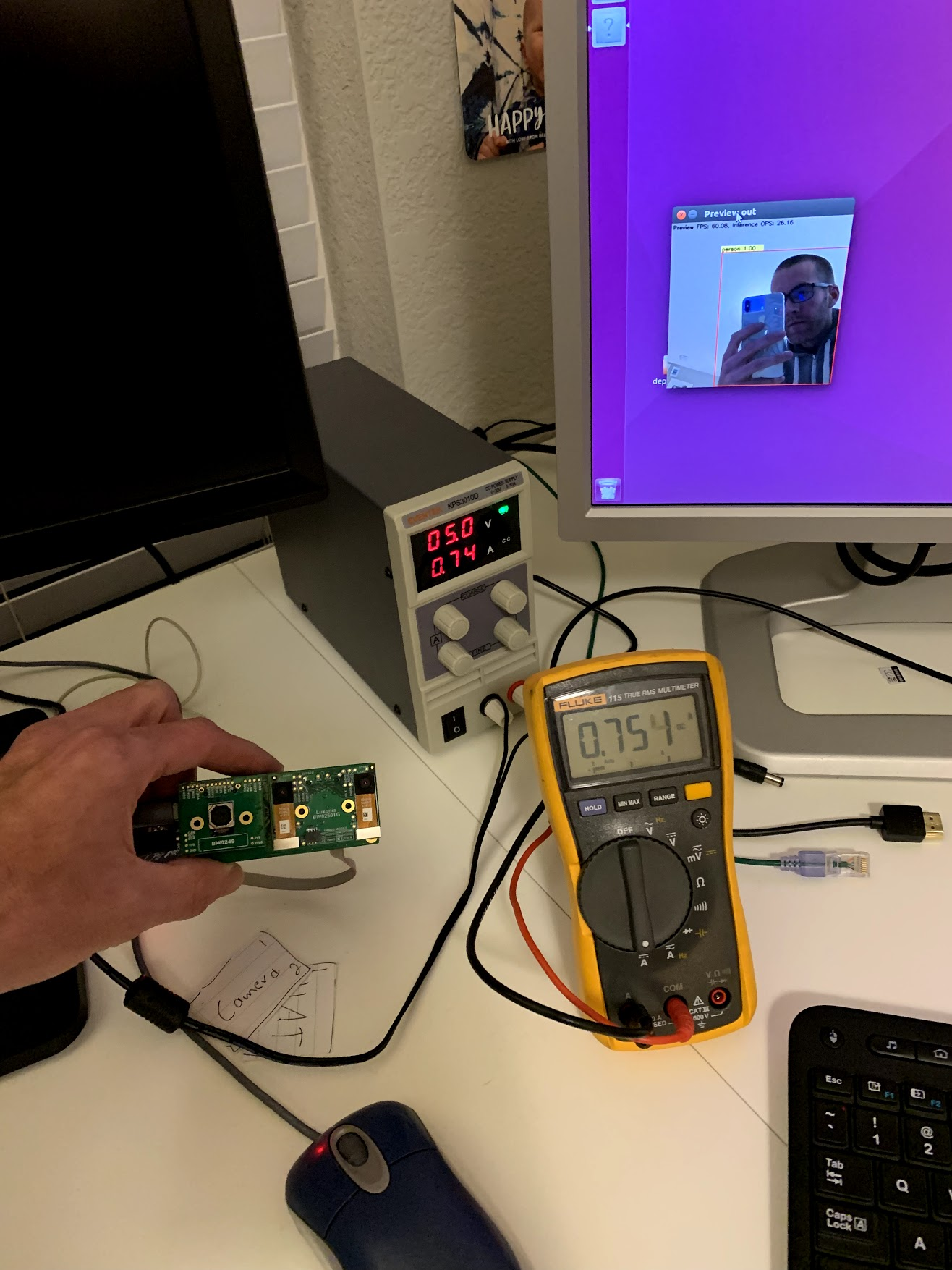

Active Power (MobileNet-SSD at ~25FPS):

So the DepthAI platform running MobileNet-SSD at 300x300:

Neural Inference time (ms): 40

Peak Current (mA): 760

Idle Current (mA): 150

So judging from Alasdair's handy comparison tables (here, and reproduced below), this isn't too shabby. In fact it means we're the second fastest of all embedded solutions tested, and we're the lowest power.

And what's not listed in these tables is what is the CPU use of the host (the Raspberry Pi). Here are our results on that (tested on Raspberry Pi 3B):

| RPi + NCS2 | RPi + DepthAI | |

| Video FPS | 30 | 60 |

| Neural Inference FPS | ~8 | 25 |

| RPi CPU Utilization | 220% | 35% |

So this allows way more headroom for your applications on the Raspberry Pi to use the CPU; the AI and computer vision tasks are much better offloaded to the Myriad X with DepthAI.

Thoughts?

Thanks,

Brandon

Brandon

Brandon

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.