For more details please also visit the GitHub Repo

Theoretical Background

The device employs the concept of Sensory Substitution, which in simple terms states that if one sensory modality is missing, the brain is able to receive and process the missing information by means of another modality. There has been a great deal of research on this topic over the last 50 years, but as yet there is no widely used device on the market that implements these ideas.

Previous Work

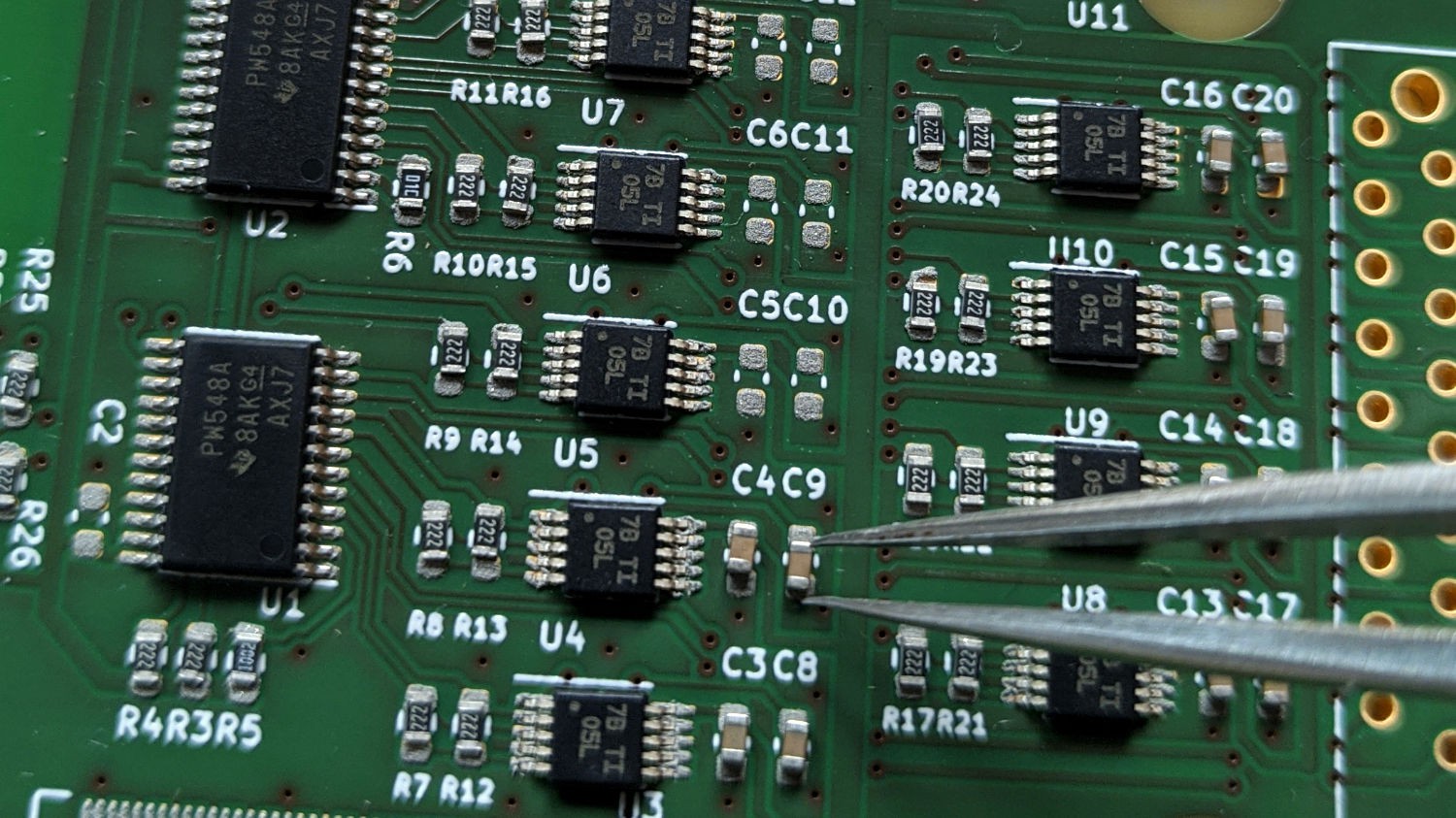

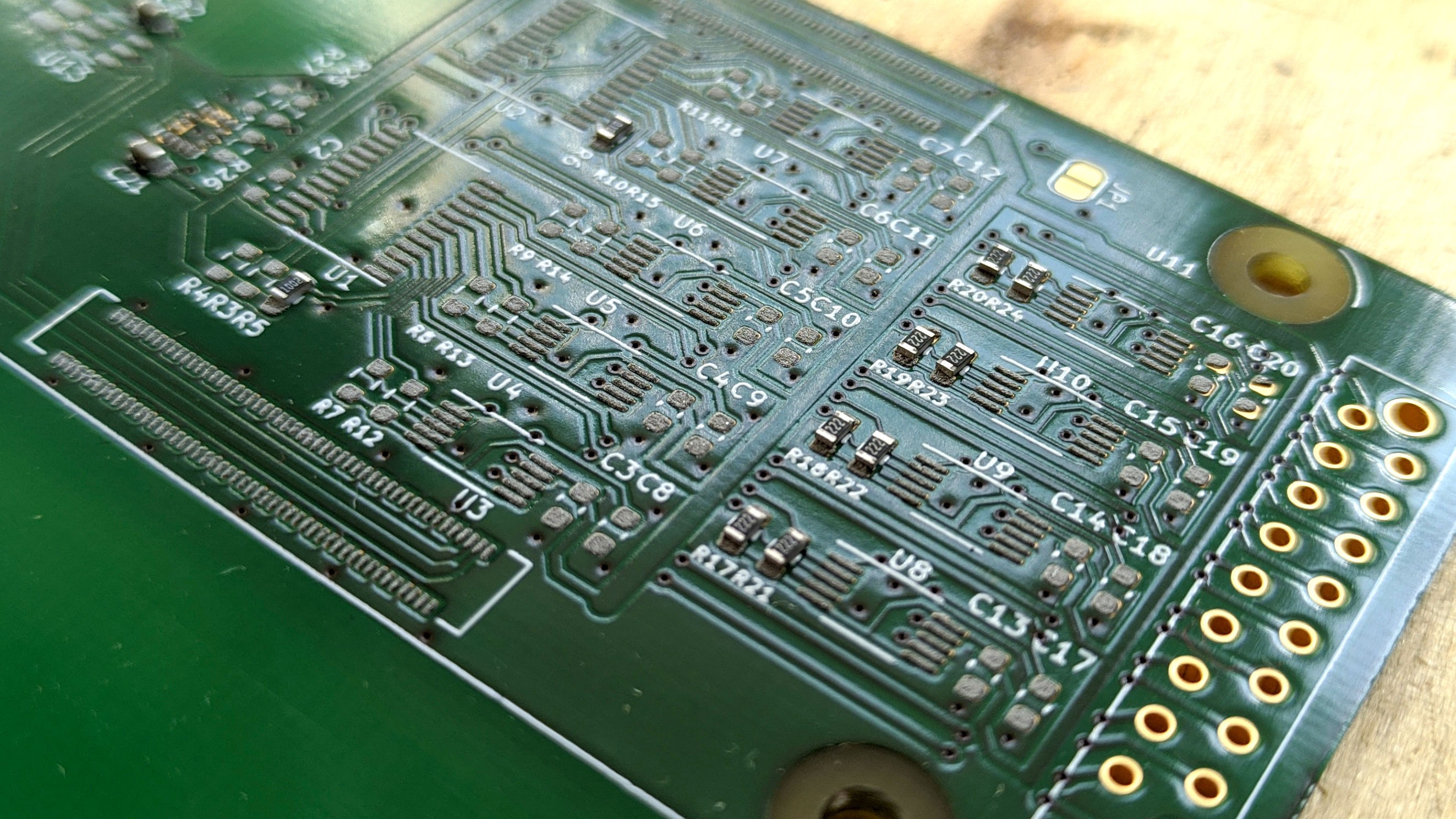

Initiated in 2018 as an interaction design project as part of my undergraduate thesis, I developed several prototypes over the years, aiming to learn from the mistakes of other projects and use design methods to develop a more user-friendly device.

Study in 2021

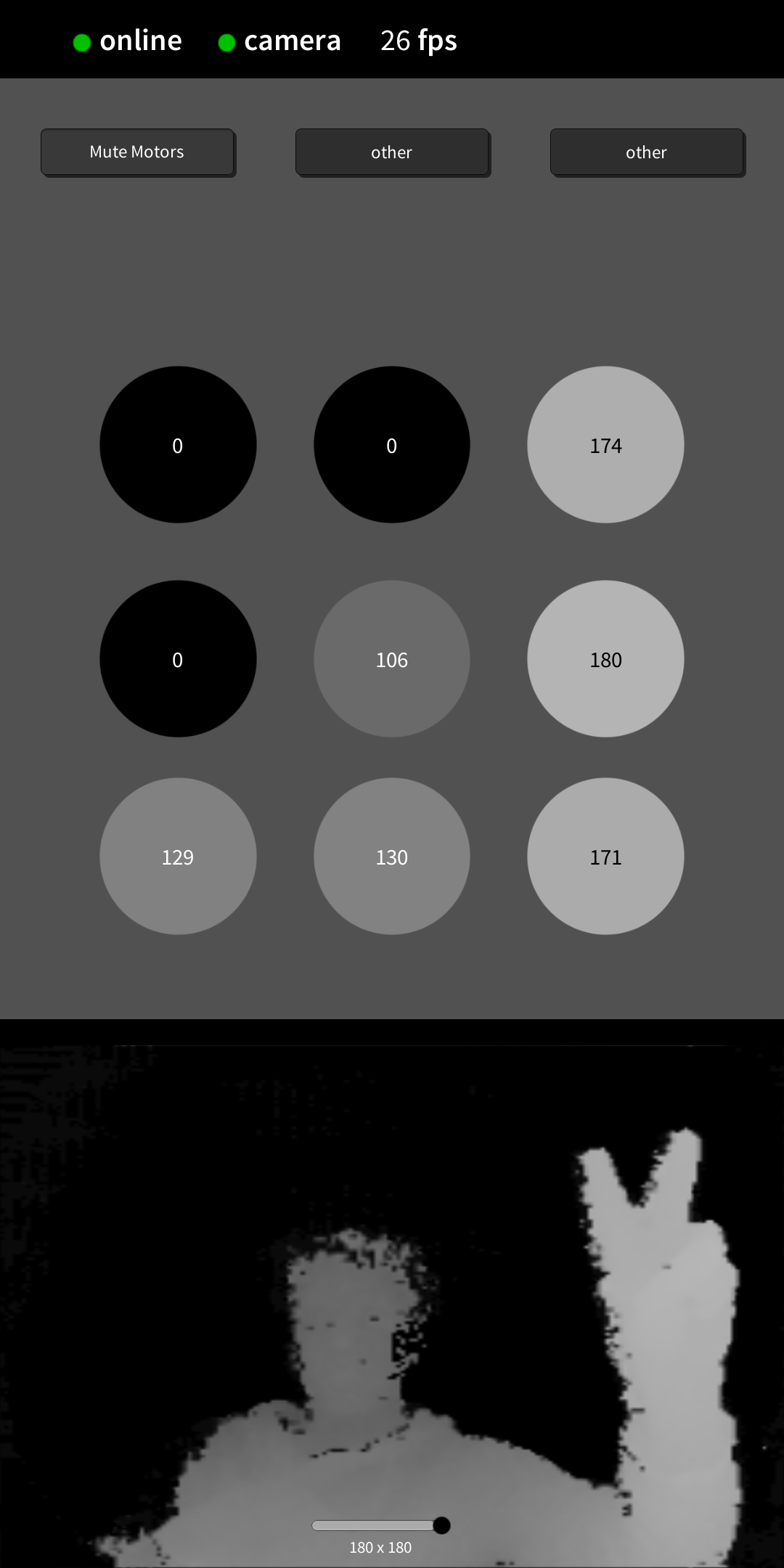

The latest prototype presented here was build in 2021 and tested in a study with 14 blind and sighted subjects (publication expected in February 2022). Also see section "Abstract of Paper" below.

Demo Video

This is a demo from a user's perspective – recorded in the obstacle course 2021 study:

Video Documentation of Previous Prototype (2019)

Originally created for submission to Hackaday Prize 2019.

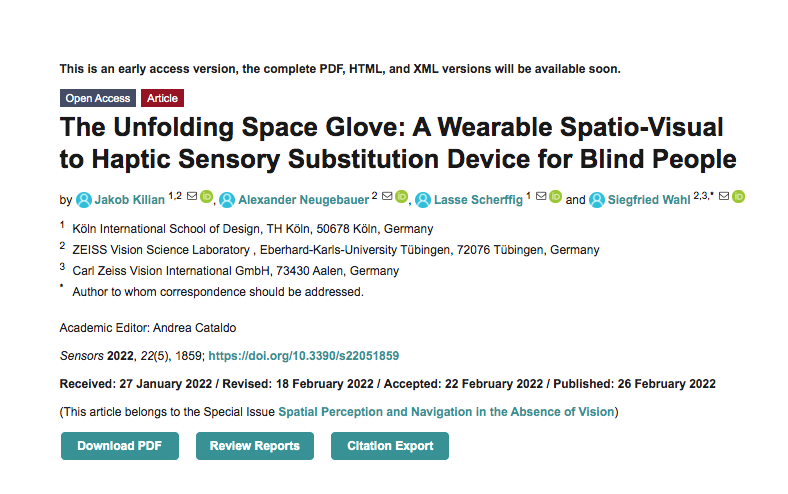

Abstract of Paper (Pending, Expected for Feb 2022)

In February 2022 the publication of a scientific paper in MDPI - Sensors about the project and the study is expected to take place. Here is the preprint abstract:

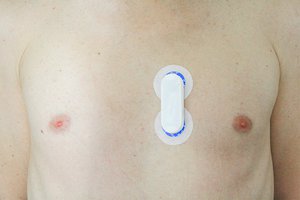

This paper documents the design, implementation and evaluation of the Unfolding Space Glove: an open source sensory substitution device that allows blind users to haptically sense the depth of their surrounding space. The prototype requires no external hardware, is highly portable, operates in all lighting conditions, and provides continuous and immediate feedback – all while being visually unobtrusive. Both blind (n = 8) and sighted but blindfolded subjects (n = 6) completed structured training and obstacle courses with the prototype and the white long cane to allow performance comparisons to be drawn between them. Although the subjects quickly learned how to use the glove and successfully completed all of the trials, they could not outperform their results with the white cane within the duration of the study. Nevertheless, the results indicate general processability of spatial information through sensory substitution by means of haptic, vibrotactile interfaces. Moreover, qualitative interviews revealed high levels of usability and user experience with the glove. Further research is necessary to investigate whether performance could be improved through further training, and how a fully functional navigational aid could be derived from this prototype.

Jakob Kilian

Jakob Kilian

Xasin

Xasin

Andrew & Ben

Andrew & Ben

Ultimate Robotics

Ultimate Robotics

Eric Wiiliam

Eric Wiiliam

So new code has to be developed or modifications can be done in your code?

please help