Approach adopted towards solving the problem :

● The track : We have chosen to replicate the Monza circuit, one of the most famous grand prix in the world notorious for its long sweeping bends and it’s few tight corners followed by high speed straights. This seemed like the ultimate ambitious challenge to solve for our self driving car, and as it turns out, the lack of guiding lanes for it to follow along the bends where the track was thicker made it hard for us to get accurate results about when exactly to take a turn. This resulted in a poorly labelled dataset causing issues with the accuracy and on-track driving.

● The Car : and the subsequent problem with the hardware. We initially decided to implement the algorithm from Nvidia’s landmark paper to predict the steering angles from the image. It was extremely hard to find a remote control car here in Delhi that could have the feature of proportional steering. (A servo that can be used to control the front steering instead of the motor in the front of the car.) As a result of this, we had to settle for hardware where we just could execute a full lef turn, a full right turn or a full ahead. This means that at the turns, we had to execute stepwise maneuvers to control the angle of the car while driving manually to train it. This again led to a lot of mislabelled images which has subsequently led to a substantial drop in the accuracy.

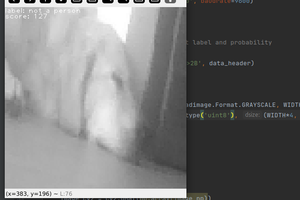

● Dataset : Custom created dataset, contains approximately 600 images of the track captured during training rounds with the associated steering direction in the form of labels. ( FF, FR, FL, NN) Sample image and the associated image processing has been provided in the proof of concept results. This dataset has been split 70:30 for training and test datasets with the help of several scripts. ( Please note that the file names are the labels, with a timestamp ) Issues that we’ve observed in the dataset : 1.)Bad Labelling. : Due to the inability to control the angle of the direction of the car, during turns of varying angles, the turning has to be in steps. For example a mild right turn is a conjunction of FF and FR. However when it is collecting the data in the form of the images and the associated direction at certain timestamps, it sometimes ends up picking up the FF in places where there is a clear right turn. 2.)Presence of other objects in the field of vision : Pillars and other features around the track are a part of the images, along with on certain occasions drains running around the track. These parallel images look very close to lane lines after image processing, and edge detection and since they move around in the frame as the car moves around, a region of interest algorithm isn’t effective.

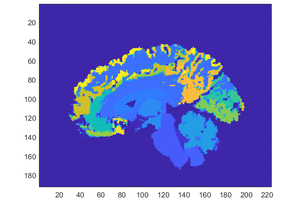

● Neural Network : We’ve used a convolutional neural network, as a method for our Deep Learning. It is a class of deep neural networks, which is mostly used to be able to analyze visual imagery. CNNs use a variation of multilayer perceptrons designed to require minimal preprocessing.

The design of ConvNets are inspired by nature, and the connectivity of the neurons are made is such a way that it resembles an animal’s visual cortex.

Sequential Model in CNN ( Keras ) : It allows sequential layers to be easily stacked ( or other kinds of layers ) of the network in order from input to output.

Convolutional Layer : Convolutional layers apply a convolution operation to the input, passing the result to the next layer. The convolution emulates the response of an individual neuron to visual stimuli. ( Mathematical combination of two functions to create a new function, in CNNs it is done with the help of some kind of filter or kernel to create feature map )

Pooling : Pooling layers are used to be able to reduce the spatial size of the representation which helps reduce the amount of parameters and computation in the network.

Flattening : I’ve had to use flattening so...

Read more » Debargha Ganguly

Debargha Ganguly

Nick Bild

Nick Bild

kasik

kasik

Tim

Tim

Nyeli Kratz

Nyeli Kratz

Hi Debargha! I'd love to learn more, can you post instructions?