Real-time feedback is an important ingredient in tool embodiment. That is, when a tool reacts fluidly to human intention and the result of its action is available immediately and continuously it can feel like an extension of the body of the user. Like riding a bike, writing with a pen, or making music on a piano. After learning little attention needs to be paid to moving the right way and so the conscious mind can occupy the abstract space of the task the tool provides leverage over.

Industrial robots are not usually built with real-time feedback in mind, because most often they repeat the same basic motions over and over again in a static environment. And real-time control can be extremely dangerous, because a bug in the software regulating the motion can lead to unexpected motion of the robot. The only control usually exposed to a human user is the ability to start or stop the activity of the machine, or change its speed. The first significant technical hurdle of this project lies in figuring out how to overcome the base limits of the controller to enable real-time control over every joint in the robot.

Remote Control with KUKAVARPROXY

The KRL programming language environment on the KUKA Robot Controller v2 (KRC2) used in this project provides programming primitives for controlling and triggering action based on basic industrial I/O, but its a pain to set up such I/O and the control frequency is very poor (roughly a few Hz). It's meant mainly for switching on equipment like welders or reading inputs like proximity switches, not low latency communication.

Ideally the robot could be controlled over Ethernet from a regular PC. A common tool for doing that with older KUKA industrial robots is a third-party tool called KUKAVARPROXY. This tool runs under Windows 95 on the KRC and sets up a server that allows global variables to be read and written over a network connection via a simple protocol. A script written in KUKA Robot Language (KRL) running on the robot can read and set global variables to communicate with external client PCs.

There is some support for this mode of control in commercial software, such as the excellent RoboDK. By running a specified KRL script on the robot RoboDK is able to connect and read the state of the robot's joints and execute basic motion commands. RoboDK has a Python API that allows mediated control of the robot. In the video above a Processing sketch is used to generate simple motion commands that are sent to a Python server (code here) which calls the RoboDK API to communicate with KUKAVARPROXY on the robot and consequently the running KRL script. For setting up jobs in RoboDK this sort of functionality is sufficient, but it does not provide an avenue to the real-time control that I'm after. There is a critical flaw with this mode of communication which has to do with how the robot does motion planning during KRL execution.

To be able to plan motion properly KRL scripts are executed from two places at once. There is the normal "program counter" which is tied to the currently running command. But there is lookahead functionality as well, which executes commands into the future to fill the motion planner's buffer. Executing motion that depends on variables which can be changed at any time throws a wrench into this lookahead mechanism. Disabling lookahead means that the robot will come to a stop after every single motion command, making it impossible to build up fluid motion from the available motion primitives in KRL like lines and arcs.

I spent a while investigating KUKAVARPROXY and KRL before ruling them out. One of the products of that work was a rudimentary transpiler from Clojure to KRL. You can find an example of that tool used to implement a KUKAVARPROXY remote control script on GitHub here.

Real-time Control with RSI

If KRL is fundamentally limited when it comes to real-time control because of the lookahead feature, what are other options? What's needed is an interface at a level lower than KRL, ideally directly into the motion control system that runs in VxWorks on the KRC (Windows 95 is actually a subtask managed by VxWorks). The KUKA Robot Sensor Interface (RSI) is exactly that interface.

RSI is a package which was primarily developed to enable robots to be used in applications where their motion needs to be adjusted in real-time for fabrication tasks. For example, when using a robot for polishing it's necessary to modulate the pressure the robot is applying to the material being worked to get an even finish. Or when doing CNC milling it can be beneficial to reduce the feed rate if the tool is experiencing too much strain. For these types of applications it's essential that corrections can be applied to the motion with minimal latency and at a high control frequency.

To achieve the needed level of control RSI taps directly into the motion control system running in the real-time operating system of the robot controller. A special Ethernet card has to be installed on the control PC in the KRC which is mapped directly into a memory space accessible from the control subsystem running in VxWorks. The motion controller executes one interpolation cycle every 6 milliseconds, so that is the smallest unit of time relevant in RSI. An external system must receive and respond to RSI communications either every 6 ms or every 12 ms, depending on the mode. If at any point this time contract is violated then an emergency stop is executed, the robot comes to a halt, and execution must be manually resumed.

Enabling RSI on the KRC2 used in this project was an entire adventure on its own. Maybe I'll get into what that took in a future update. But what's relevant here is that it works, and it provides the sought-after mechanism for real-time control of the robot.

I started by implementing the RSI communications protocol (XML-based) in a simple server written in C++. One thread handled receiving and delivering the RSI packets on time, another ran a server to accept motion targets from clients, and another interpolated the motion to derive the correction for RSI to consume every 12 ms. Then my brother wrote an ARCore-based app to control the robot based on the movement of an Android phone:

The robot is shaky because | didn't bother to tune my motion controller. I used the excellent Reflexxes online motion trajectory library to handle making velocity and acceleration nice and smooth for the robot, but ended up with some oscillation in the target by not filtering the feedback RSI was providing from the robot. Still, I was happy with it as a proof of concept.

Introducing ROS

With the basic pieces of the system up and running, and after a lot of intervening events (moving the robot to a studio in the Brooklyn Navy Yard, installing industrial power, bolting it to the floor, etc.), I went looking for a platform to build the higher level functionality of the system on top of. Very quickly I came to the conclusion that the Robot Operating System (ROS) was the right choice.

ROS essentially defines rules for how the pieces of a robot control system are configured, started, communicate, packaged, etc. It's not exactly an operating system in the usual sense of that term - it is made up of software installed on top of a conventional operating system like Linux.

ROS specifies what "things" are on a robot's internal network and how those things find and talk to one-another. "Things" on this network are referred to as nodes. Right now some of the nodes in the ROS configuration for this project include a motion planner node, a motion controller node, and an application node. These nodes find each other thanks to a master node, called the ROS Master. They all know how to find the master and when they start up they tell the master how they can be talked to. Then later when other nodes want to connect they can just ask the master who there is to talk to, and then set up a direct connection to the nodes they want to chat with.

Communication happens via "topics". For example, there is a joint_states topic which is "published to" by a special controller node that can read the current joint angles out of the low level robot driver. Any node that wants to know the current joint angles of the robot, like the motion planner node, can "subscribe" to the joint_states topic. Every time there is a new update about the joint states every joint_states subscriber gets it.

So to get everything going you launch a bunch of nodes (each a process managed by the machine's OS) which are configured to connect to one-another in a specific way that accomplishes your goal. They all talk to the Master in order to find each other. Then they start publishing, consuming, and processing messages to accomplish the robot control task.

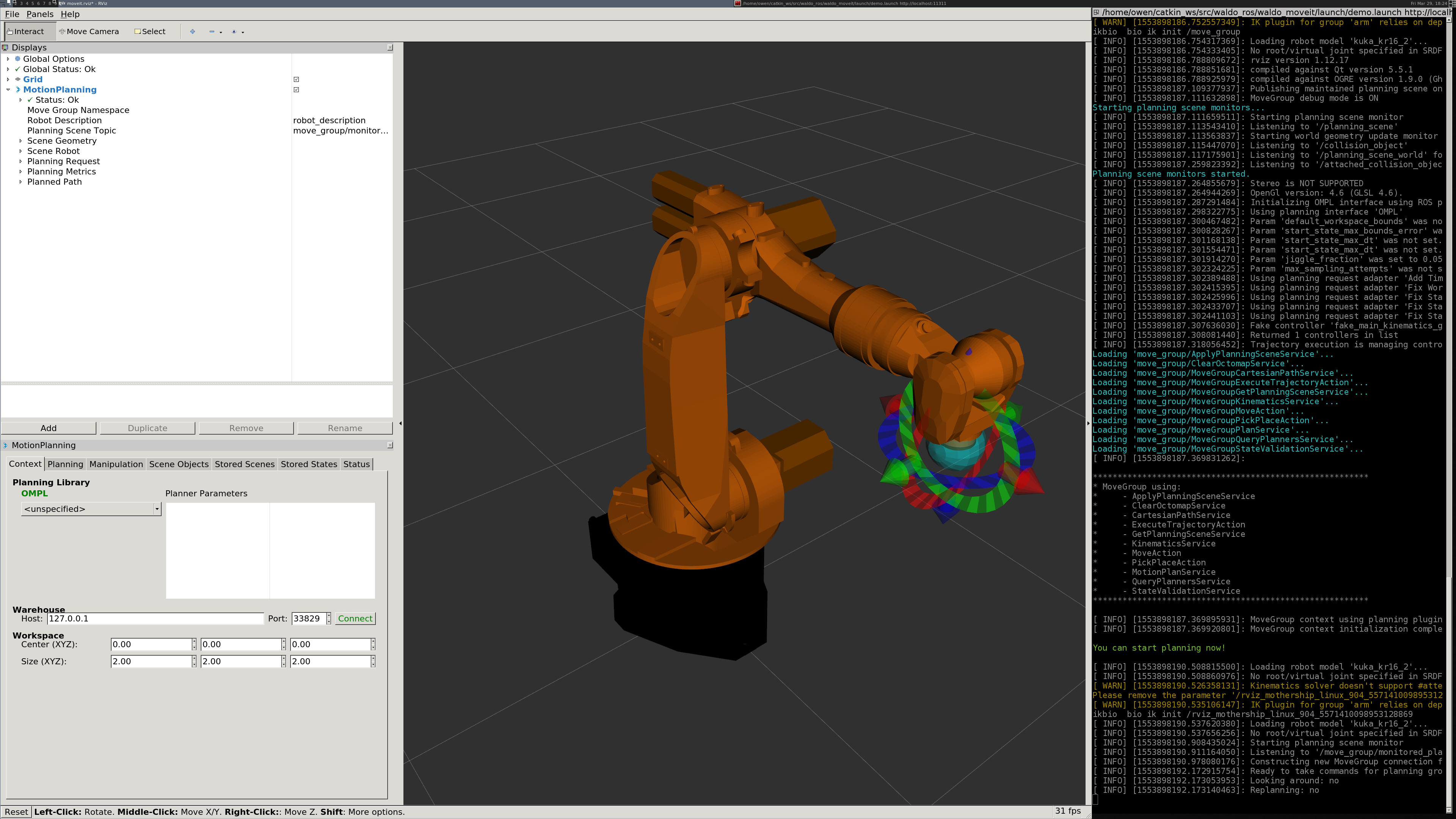

I created a set of ROS packages to setup control of the KR 16 via RSI. At the bottom of the stack is existing work done as part of the ROS Industrial project, which implements a driver for talking to the robot via RSI. Above that are some nodes from ROS Control, which handle management of the motion targets for the individual joints. Just below the top is the MoveIt motion planning framework, which figures out how the joints need to move over time to hit specified motion targets in a given coordinate space. I have it configured to use Bio IK for solving the inverse kinematics problem of the arm (what joint angles put the tool on the robot at a target position and orientation). Bio IK has nice properties in the context of real-time control, like fast execution time, graceful failure, and robust performance scaling.

Right now RViz with the MoveIt MotionPlanning plugin provides the GUI (see image above). That allows for rudimentary control of motion targets, motion planning, and execution. The system can be launched in a simulation mode to test execution without the real robot connected.

The last piece of the current progress is a Python script node which invokes the MoveIt API to paint SVG images (see video at top of post).

An obvious limitation of the current demo is that it doesn't actually involve any real-time feedback and decision-making. The motion might as well be fully preplanned, because all the constraints are known in advance. However, the underlying system is, in fact, real-time. The next update will explore what it takes to punch through MoveIt to influence the motion as it is executed moment-to-moment.

Owen Trueblood

Owen Trueblood

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

Yo! I would love to see a graph of the nodes that you are using (or intending to use) for the various real-time computations relating to motion planning.

Are you sure? yes | no

I'll include one in the upcoming post on the new real(er)-time control system. Thanks for the suggestion.

Are you sure? yes | no

Damn you really had to go through a lot of challenges just to be able to control the robot in real time.

Interesting stuff, thanks for sharing.

Are you sure? yes | no