Wall-E's software took me quite some time to develop. In fact (as anticipated) it took much longer than 3D design, mechanical or electronic assembly, etc.

Development was not linear. A couple of times, when frustrated by the bugs or unreliability, I thew it all, and rebuilt it again piece by piece, so that I could debug interactions between all parts of the software, and assess the reliability of each part. Now, while not 100% reliable, I am pretty satisfied with how it works.

You can browse (copy, modify, reuse, etc.) the software, which is available at :

* https://github.com/DIYEmbeddedSystems/wall-e : ESP8266 firmware, HTML & JS GUI

* https://github.com/DIYEmbeddedSystems/wall-e-cam : ESP32-Cam firmware, video stream.

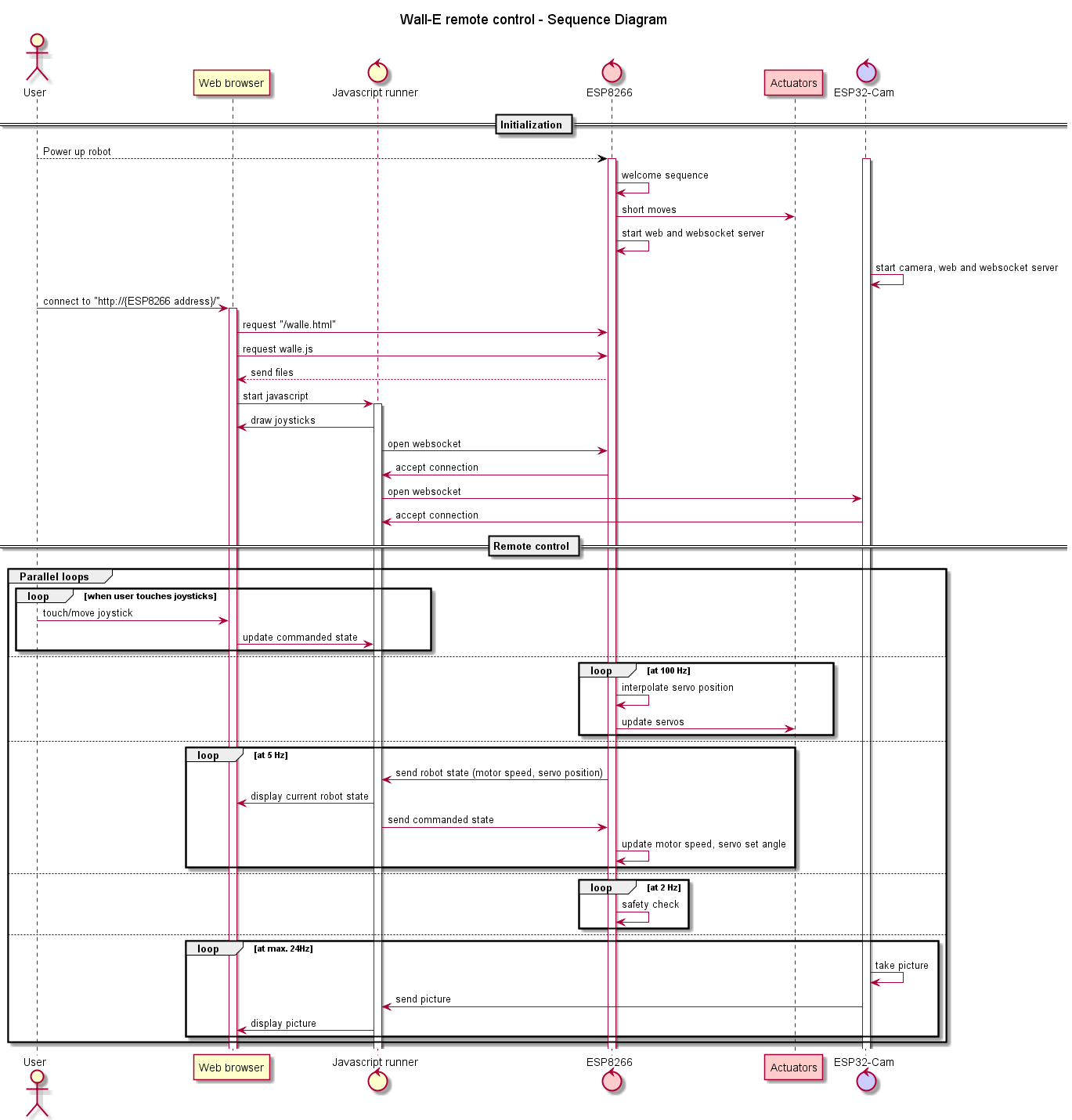

The sequence diagram below presents an overview of the control and communication loop between the three software entities:

* the ESP8266 microcontroller,

* the ESP32-Cam microcontroller,

* javascript in the smartphone or PC web browser.

The javascript code is downloaded from the ESP8266 web server together with the HTML web page. The HTML/javascript handles user interaction, through a set of joysticks that react to click or touch events. The joystick visually show both commanded state, and last known robot state.

The ESP8266 runs several "cooperative tasks" in parallel (programmed in non-blocking style).

* During start-up, the ESP8266 shows a typical Wall-E boot screen on the OLED screen, and briefly moves the actuators. This serves as both self-test, and as an audible notification when a unintended reset occurs.

* Every ~10ms the ESP8266 interpolates servo positions, to produce smooth arm and head moves (and reduce the risk of breaking servo gears...) see https://github.com/DIYEmbeddedSystems/wall-e/blob/main/lib/pca9685_servo/pca9685_servo.cpp for details.

* Every ~200ms the ESP8266 sends its current state (motor speed and interpolated servo positions) to all connected websocket clients. The javascript code then replies with the commanded state; and the ESP8266 updates the set position accordingly.

* Two times per second, the ESP8266 performs a basic safety check: if no command was received in the last second, stop all moves.

The ESP32-Cam is independent from the ESP8266. When a websocket client is connected, it takes pictures at max. 24Hz frequency, and sends them to the client.

The code was developed using PlatformIO. It should be able to compile in the Arduino IDE, probably with a few modifications (lib path, sketch filename, etc.)

There are still a few features in the dream backlog, I'm not sure when or if I can have it work: filter IMU data and compute heading, interact with user based on video stream (e.g. follow face, mimick pose, ...) so I won't say the project is complete. However it's fully functional, and the kids like to play with it so I would say "mission already accomplished!"

Etienne

Etienne

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

Excited about the future of programming, I stumbled upon a forward-looking article that evaluates the trajectory of Go and Python in 2023. From emerging trends to potential paradigm https://qit.software/go-vs-python-comparing-performance-features/ shifts, the piece promises an insightful exploration into how these languages are positioning themselves in the dynamic world of software development.

Are you sure? yes | no

this is insane

Are you sure? yes | no