Most CPU projects I’ve seen are either recreating some old processor from the early days of the PC revolution, an old arcade video game processor or some “minimist” kind of CPU. While I’m sure those are all fun, interesting and educational projects, they are all focused on the past. Kind of like building a brand new Model T automobile. That’s cool if you want a brand new Model T, but how about designing something new and unique?

Before I outline the basic design I’m looking for, let me give you a little bit of the rational (or lack thereof) for it. First and foremost I believe, and plenty of others have also made the argument, that we are at a point where designing what is best for the machine is less important than designing what is easiest for humans to learn, understand and work with. As much as possible, let’s keep it simple. In the early days of computing, resources (memory, interconnects, die area, etc.) were scarce so limiting the size of the data components (instruction\word\byte) also limited the size of the electronic components needed to process that data. So computers were designed to minimize the size of everything.

Today that is not as critical and it will continue to become less important in the future. Likewise the size of a byte has varied over the years with 8 bits being the current de facto standard. But 8 bits limits us to 256 possible combinations and is an “unnatural” base for humans. A 10 bit byte allows 1024 possible combinations and is a “natural” unit size for people to work with. Consequently my architecture is based on a 10 bit byte and 100 bit instruction size. Now that’s something new and unique!

As many people will immediately note and object, that makes this CPU incompatible! Yes, you can see that as a downside. On the other hand, if you stop and think about it, it’s also one of the biggest upsides. Since it can not run code based on 8 bit bytes, it makes it quite secure. Compatibility can be achieved by data conversion and virtualization.

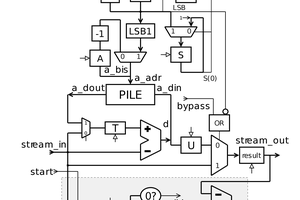

Along with a 100 bit processor and 10 bit bytes, here are some other differences. Addresses (pointers) are 50 bits. There are 3 basic data types; Binary, Integers (signed), and Decimal. Notice there isn’t a Float, we’ll use Decimals instead. Instead of the common integer sizes (8 bit, 16 bit, 32 bit, 64 bit, etc.), integers (and decimals) are defined by number of bytes (1 to 10 for integers, 1 to 100 for decimals).

Instead of 2’s compliment integers I use 1’s compliment. Consequently there is a +0 and -0. The first Integer bit is the sign bit and is the opposite of the standard integer. i.e. a 1 = Positive, 0 = Negative. The reason for making it different is that by using this method integers are naturally in the correct sort order. i.e. For a 1 byte integer “00000 00000” = -511 and “11111 11111” = +511 (I divide the binary # into two 5 bit portions just for readability).

Because I’m using 1’s compliment instead of 2’s compliment integers, the integer math unit (ALU) uses a "Complementing Subtractor" because it virtually eliminates a Negative Zero result. The only way to get a Negative Zero result using a complementing subtractor is if both factors in the equation are Negative Zero.

Decimals consist of 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, X (10), ∞ (infinity) and their negative counterparts, including negative zero. Decimals will include the actual Decimal Point if there are any decimal positions (like a text representation). The binary values for decimals start at 00000 00100 for – Infinity and go through 00000 11011 for + Infinity. The last 5 bits of the binary values for decimals create a 1’s compliment arrangement for negative and positive numbers. Just like integers,...

Read more »

kaimac

kaimac

Yann Guidon / YGDES

Yann Guidon / YGDES

Kim Chemnitz Chemnitz

Kim Chemnitz Chemnitz

Maciej Witkowiak

Maciej Witkowiak