For the most detailed description please check out the project logs.

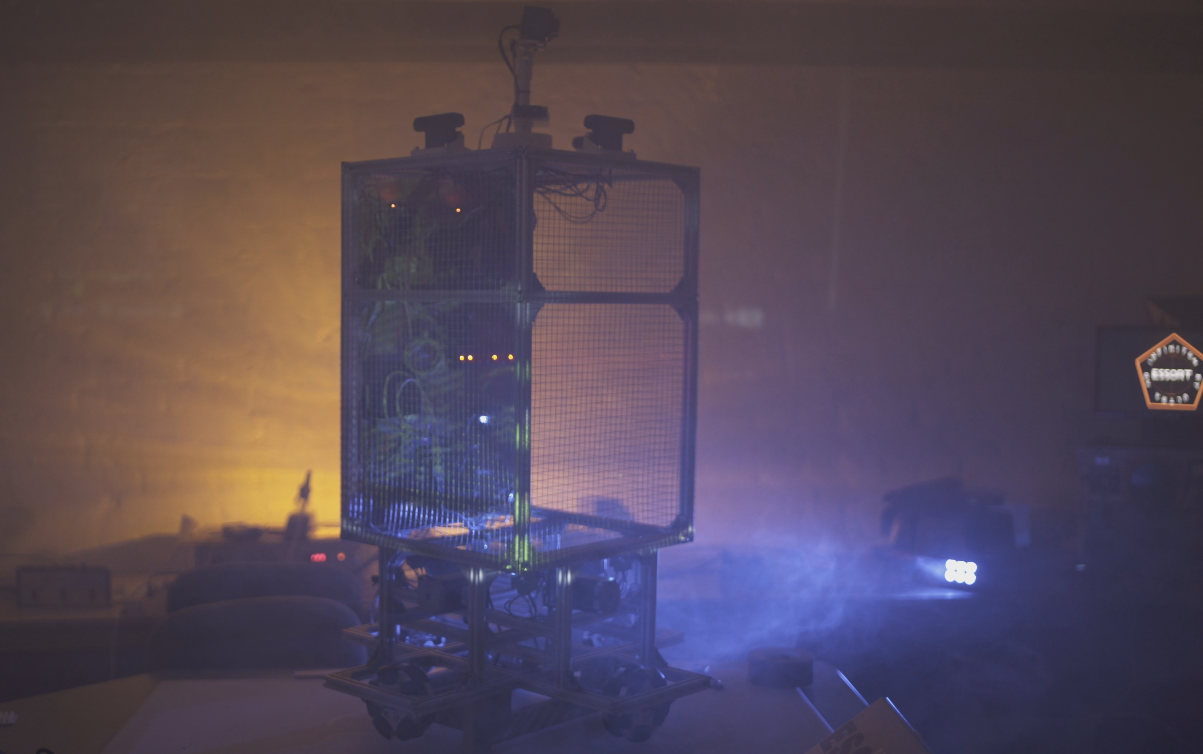

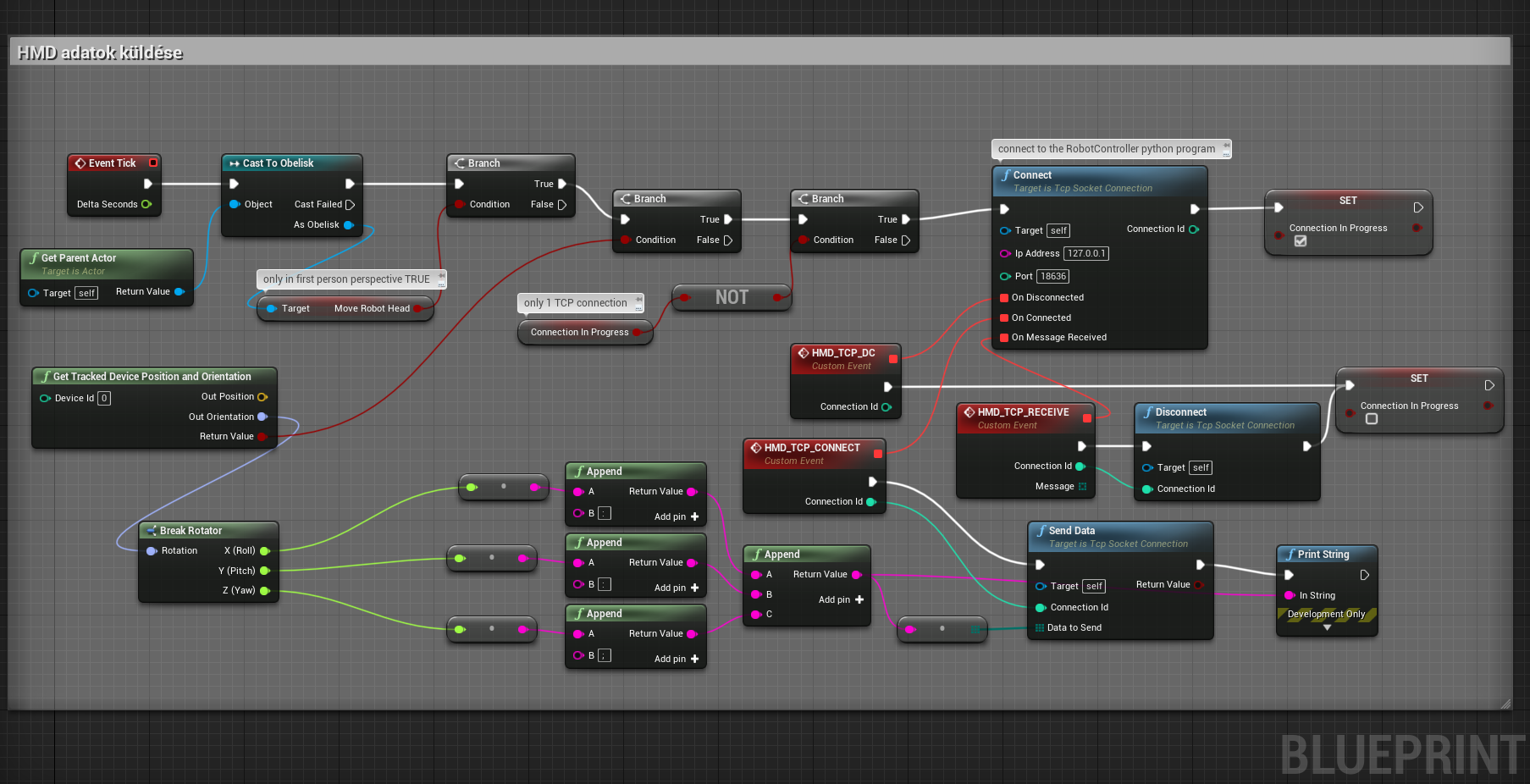

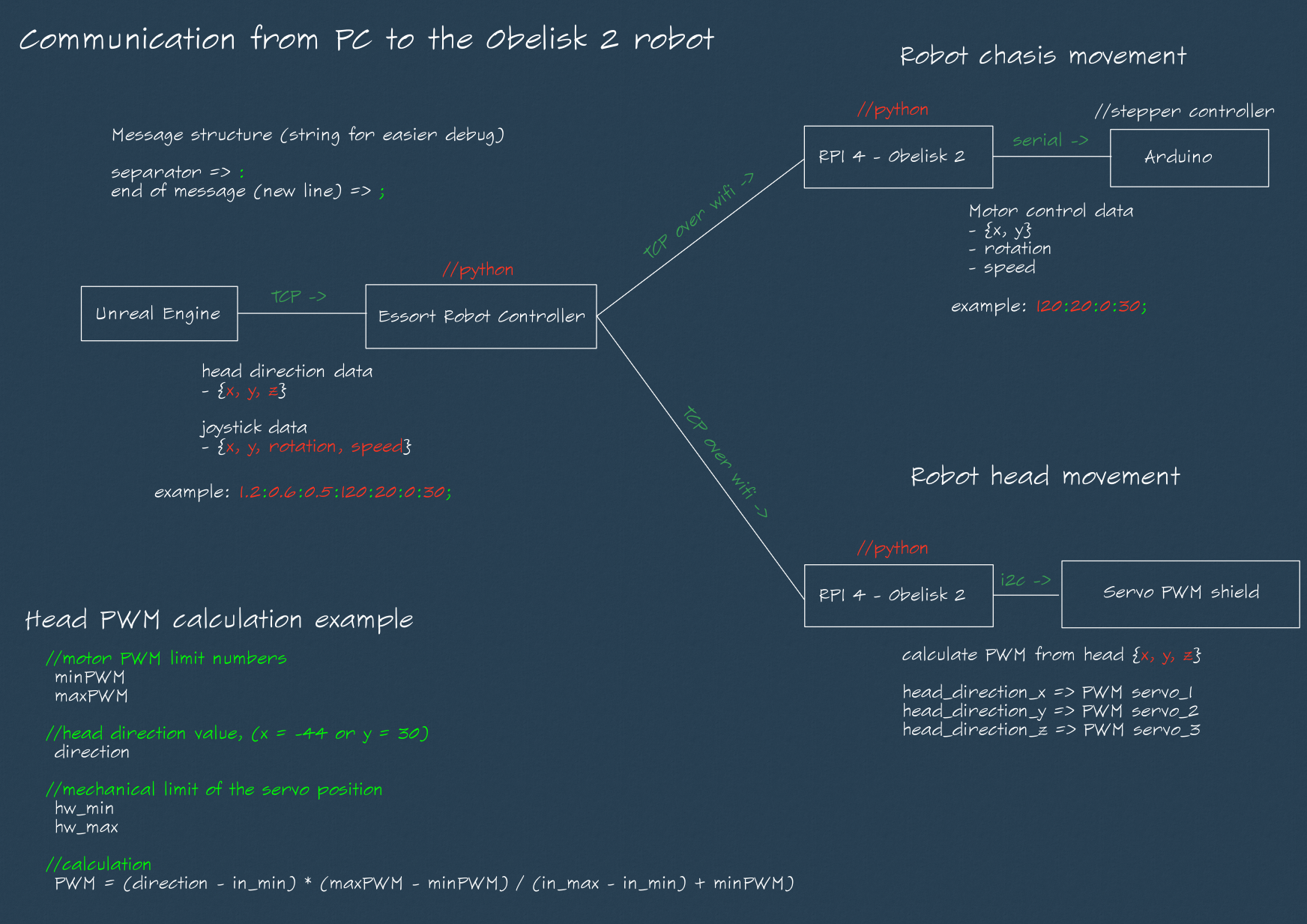

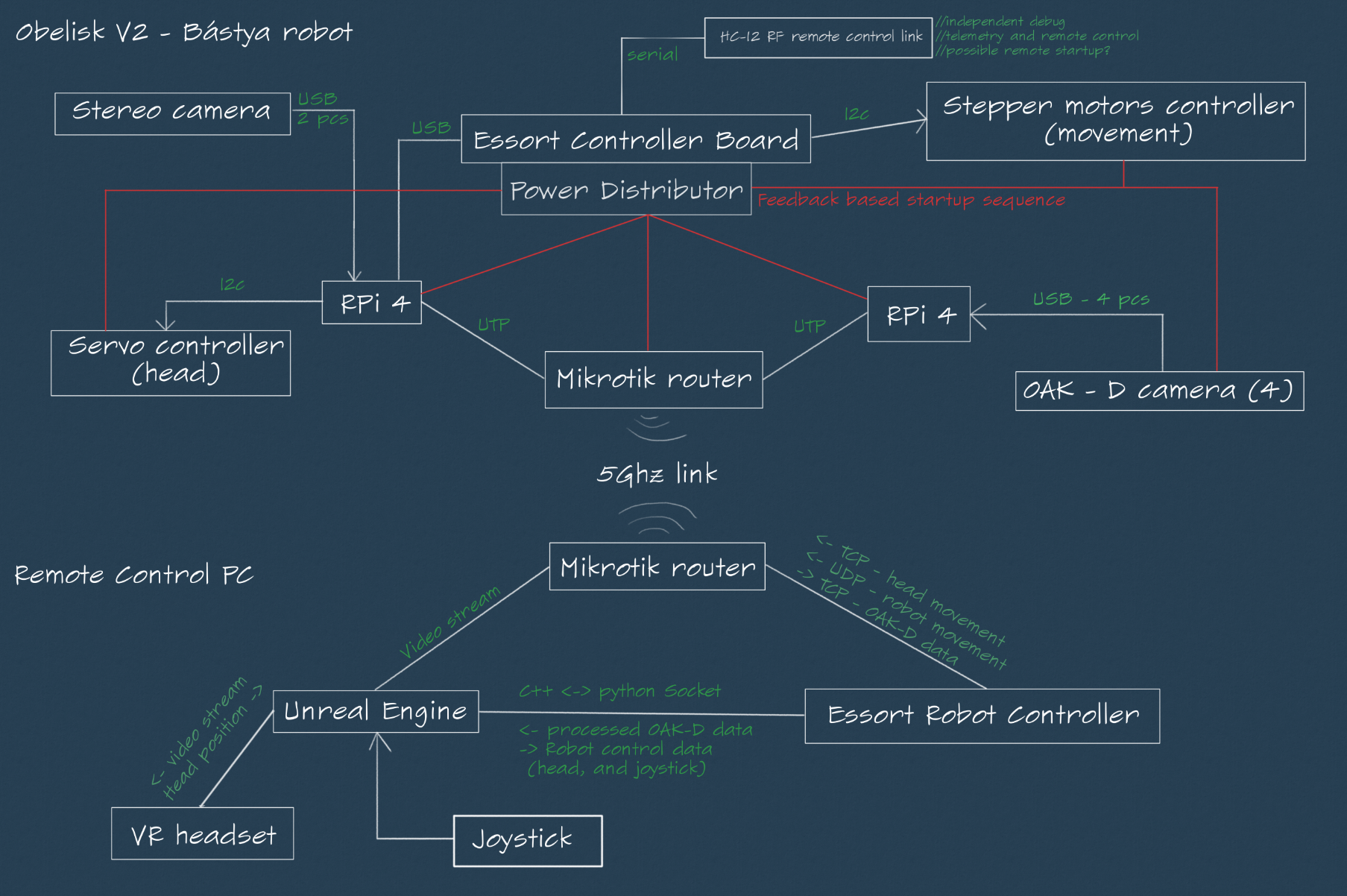

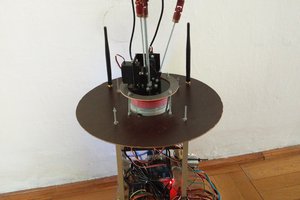

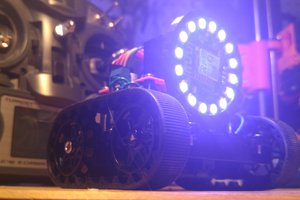

It is streaming video on wifi, and listen multiple sockets for head tracking control, and movement control.

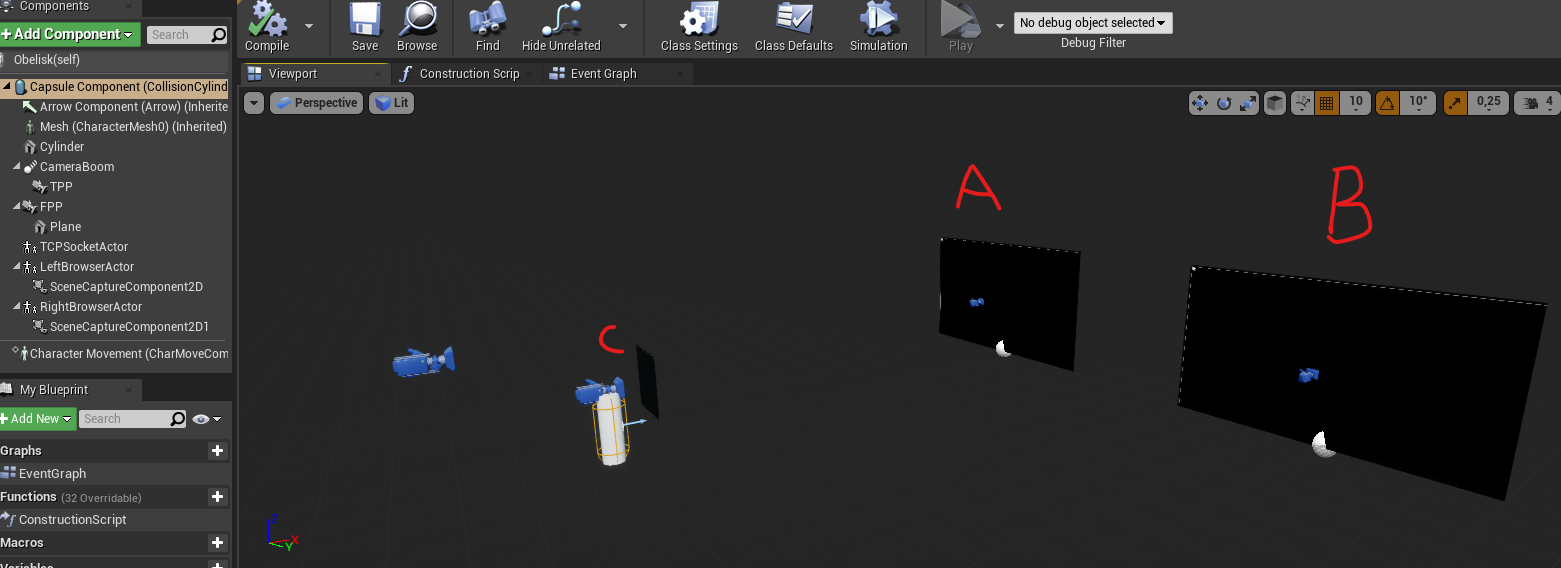

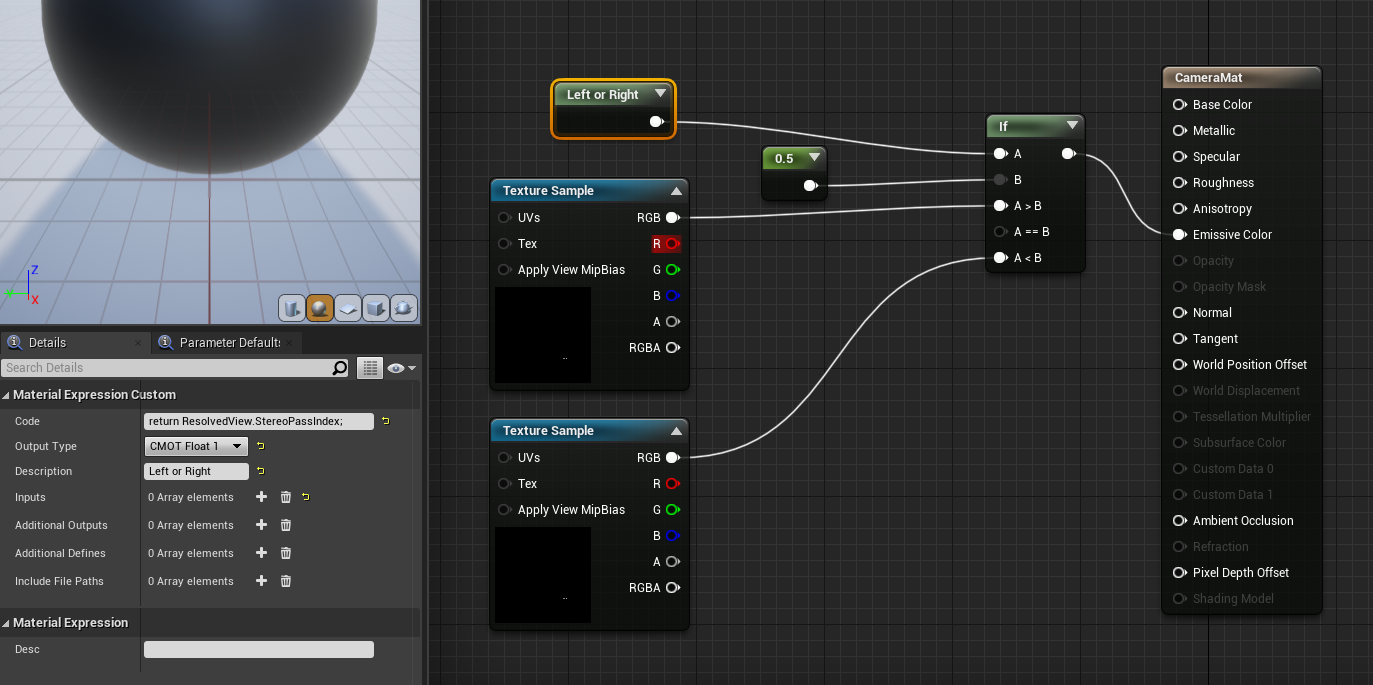

Currently we are using HTC Vive for the development, but it can be operated with similar PC connected HMDs.

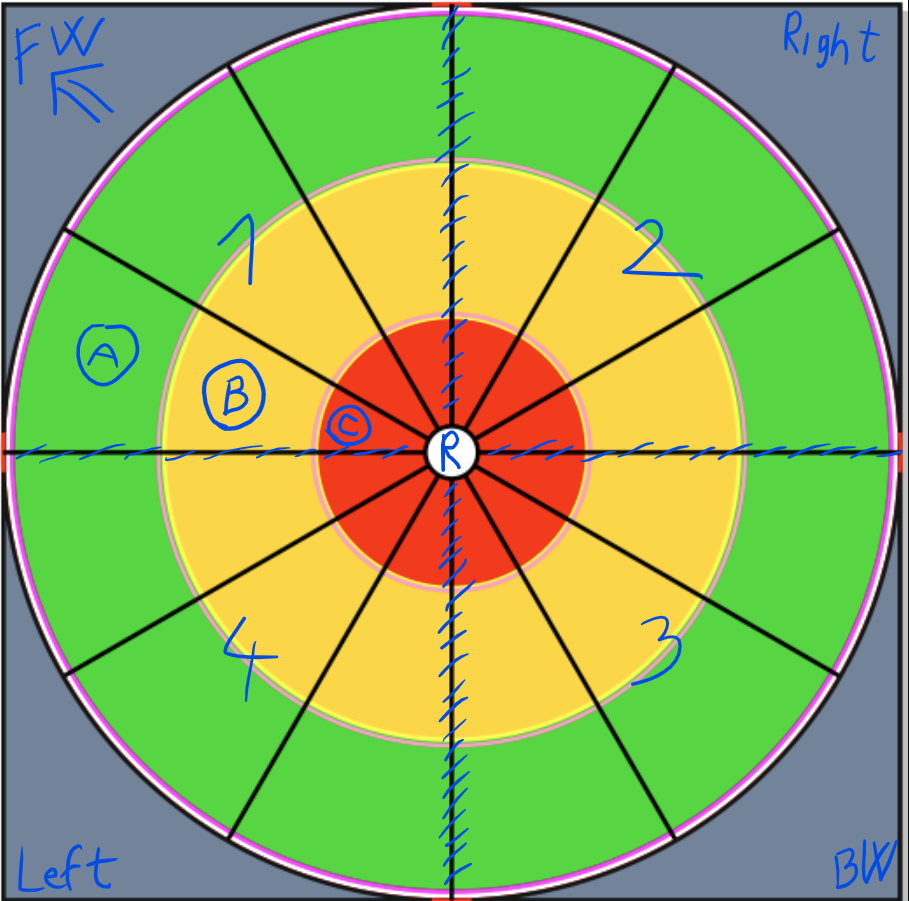

Besides that, you can connect to the robot with HTML5 compatible browser and watch the video stream. Because of the HTML5 we can send the mobil device orientation back to the robot, so it can adjust the camera position in real time. You can control the robot movement with joystick connected to PC, or tablet.

If you don't know something what we did, why we did that way, feel free to ask, and I will try to answer it.

We are glad if you make suggestions, or tell your ideas to improve our robot.

BTom

BTom

Mykolas Juraitis

Mykolas Juraitis

theotherlonestar

theotherlonestar

Dan Royer

Dan Royer