Tipper

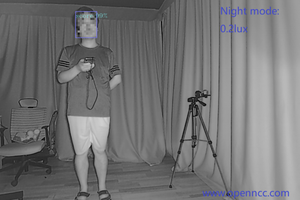

Tipper predicts if a pitch will be in or out of the strike zone in real time. The batter will see a green or red light illuminate in their peripheral vision if the pitch will be in or out of the strike zone, respectively.

Higher resolution video in Media section below.

How It Works

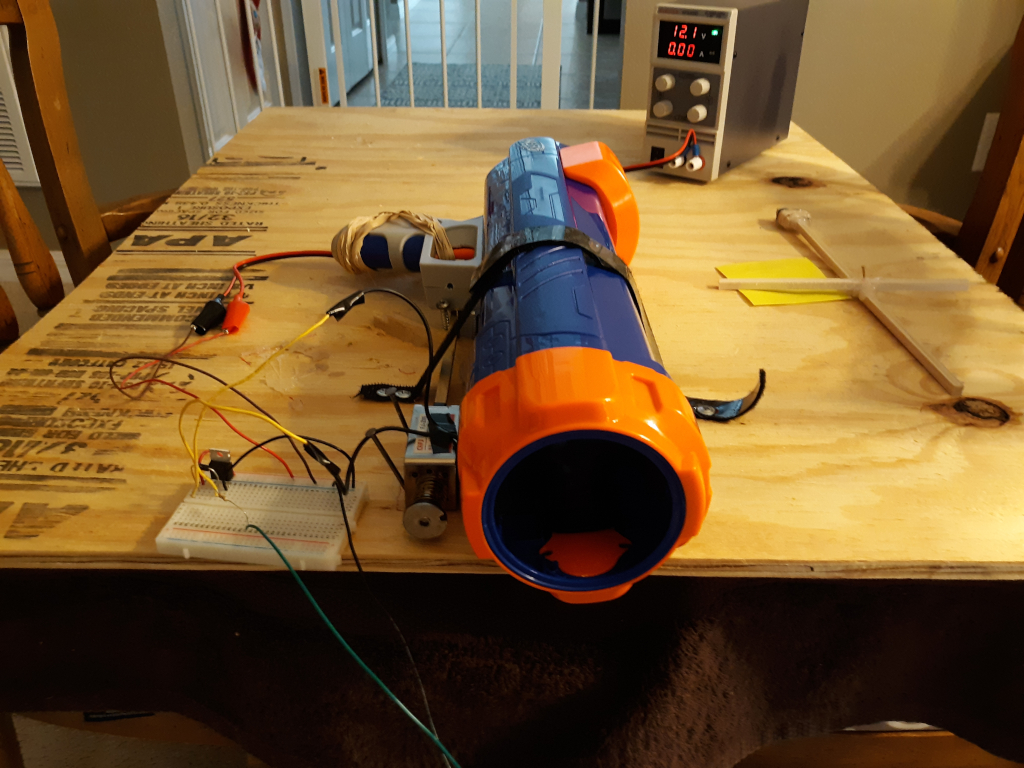

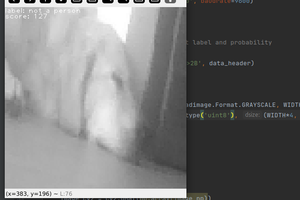

A modified Nerf tennis ball launcher is programmatically fired with a solenoid. A 100FPS camera is pointed in the direction of the launcher and captures two successive images of the ball early in flight.

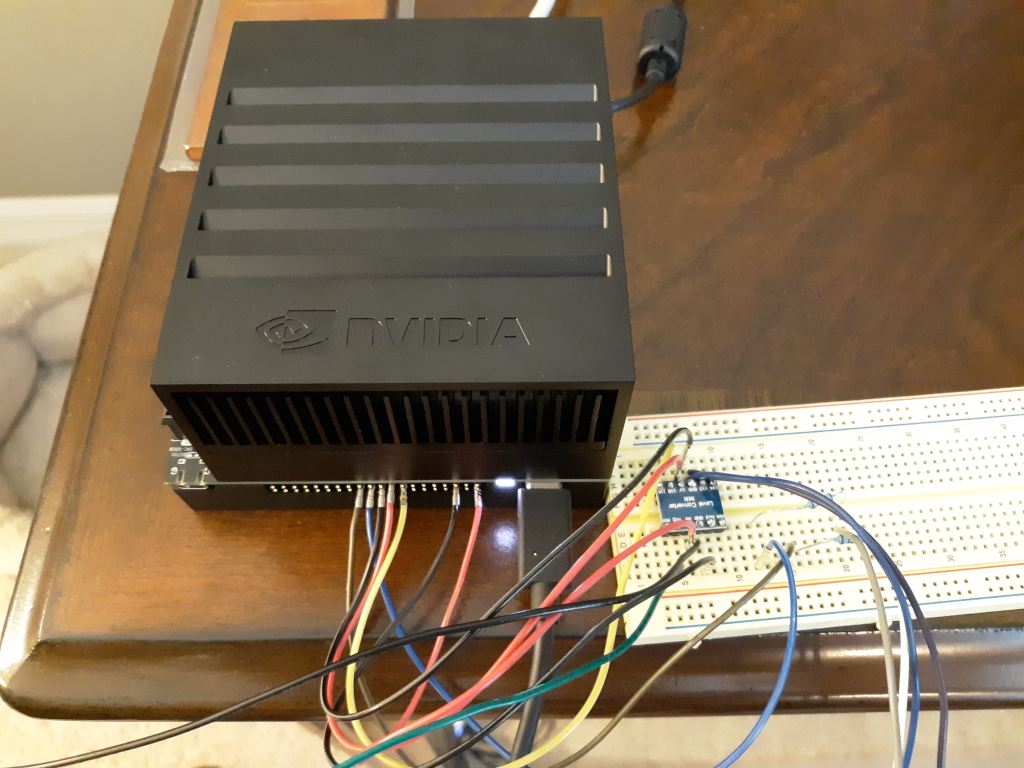

A convolutional neural network running on an NVIDIA Jetson AGX Xavier rapidly classifies these images against a model that was built during the training phase of the project. If the images are classified as in the strike zone, a green LED on a pair of glasses (in the wearer's peripheral vision) is lit. Conversely, if the ball is predicted to be out of the strike zone, a red LED is lit.

Media

See it in action: YouTube

The ball launcher. The rubber bands remove some of the force required to pull the trigger, giving the solenoid an assist. The trigger return spring was also removed for the same reason.

Software

Training

A CNN was built using PyTorch. The model was kept as small as possible as inference times on the order of tens of milliseconds are required.

Collection of training data was automated with capture_images.py.

Data should be structured as:

data/

test/

ball/

strike/

train/

ball/

strike/

Training can then be started with the command:

python3 train.py

Inference

The inference script pitches a ball and captures images of it in flight. The image is classified against the CNN model, and based on the result, a green or red LED is lit on a pair of glasses worn by the batter.

To run it, cock and load the ball launcher. Then run:

python3 infer.py

Bill of Materials

- NVIDIA Jetson AGX Xavier

- USB camera with minimum 100FPS @ 640x480

- 3V-5V logic level shifter

- Red and green LEDs

- Power MOSFET

- 45 Newton or greater solenoid

- Nerf dog tennis ball launcher

- 2 x Breadboard

- Glasses / sunglasses

- Miscellaneous copper wire

- Plywood, miscellaneous wood screws, wooden dowels, rubber bands, velcro, hot glue

Future Direction

To move this beyond prototype, I'd like to run it on faster hardware. This would allow me to run a deeper model, which can identify more subtle features to better classify a wider range of pictures. This would also allow me to feed higher resolution images into the model, which could then factor in features like the spin of the ball that are not possible at the 640x480 resolution I need to use to keep processing time fast enough with my current setup.

I would also like to experiment with other methods of reality augmentation. For example, instead of lighting up an LED, a ball in the strike zone could trigger a vibration in the right shoe, and out of the strike zone could trigger a vibration in the left shoe.

I'd also like to experiment with predicting more specific outcomes, e.g. low and away, high and tight. I imagine several LEDs in various locations around the glasses to give a quickly interpretable signal to the wearer.

About the Author

Nick Bild

Nick Bild

kasik

kasik

Johanna Shi

Johanna Shi

Great idea. I had thought about LEDs lining the glasses to give a more precise location. If I can get my hands on a GPU workstation that's a direction I'd like to go.