Whilst thinking about the results obtained and licking my LDR inflicted wounds, I thought about a way to implement this object recognition system that would not involve fiddly hard to measure intensity levels.

It occurred to me that I could change the intensity levels from 256 shades of grey, to a binary 0/1, i.e. if the intensity was higher than 127, then 1, else 0. In this way, the pictures become black and white, instead of grey shades, and we have new implementation possibilities.

Up til now we needed to measure an intensity, translate it onto an analog signal (voltage), compare it to the reference voltage and get a digital signal (In each split node: Yes/No, Left/Right, etc).

Now, we have a binary signal, we do not need to fiddle with intensity levels, analog signals, we do not need voltage comparators.

Heaven.

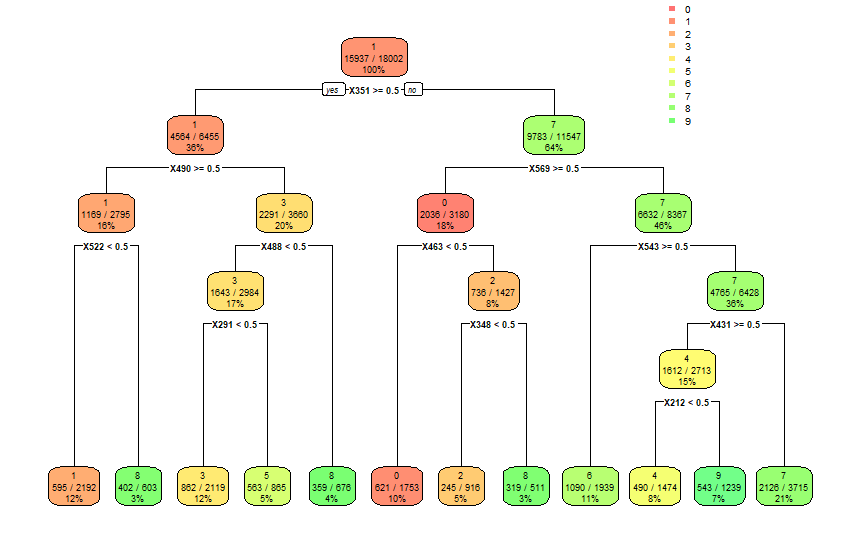

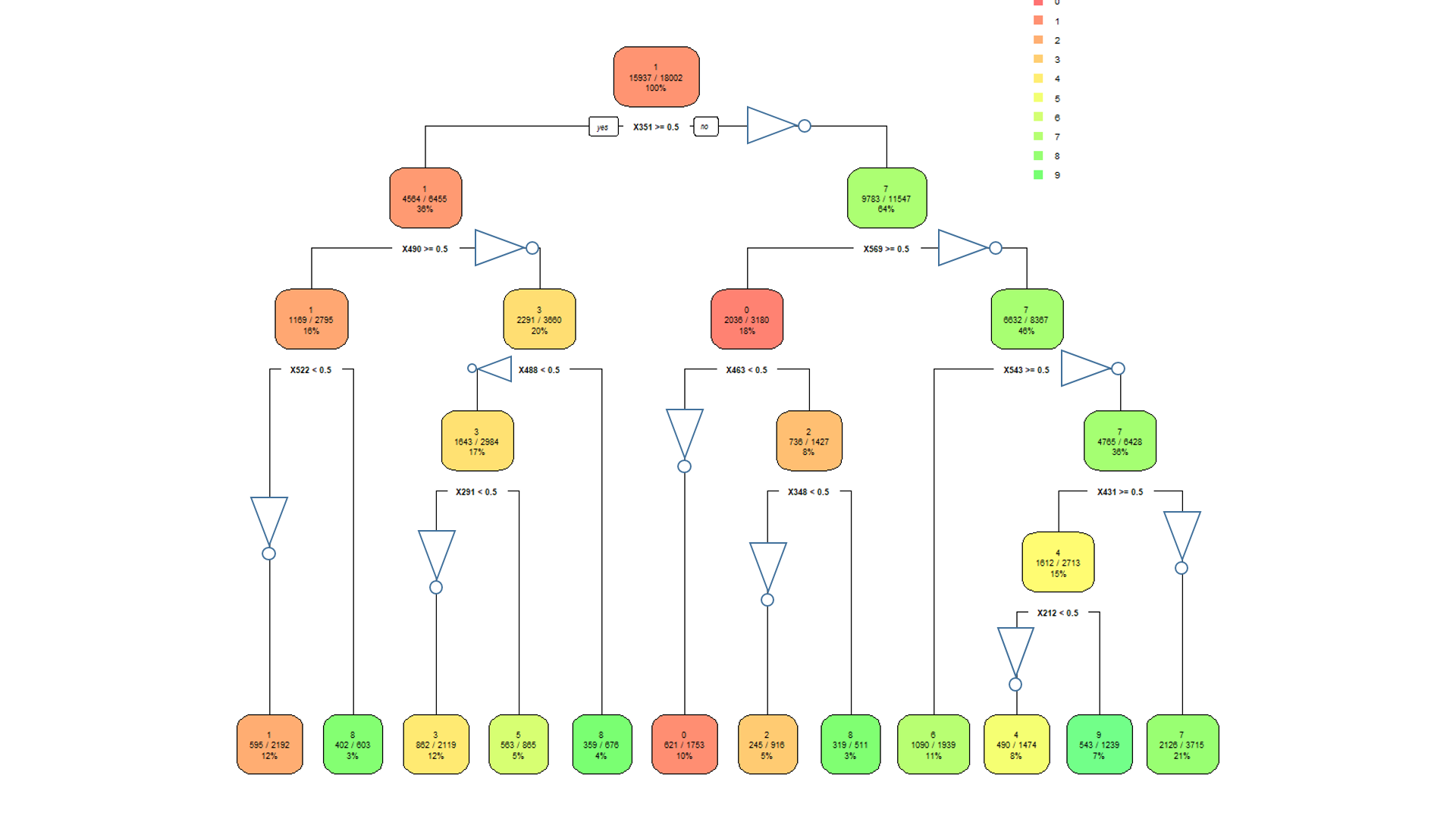

Below is a decision tree modeled on the same MNIST dataset but using the new binary system instead of the intensity level. The accuracy is in line with the models obtained in the Minimum Model task using all the default settings in Rstudio and the rpart package.

The confusion matrix below shows that a lot of digits get miss-classified. Nevertheless, this model has not been optimized nor iterated in any way. The columns represent the digit shown and the rows represent how they were classified.

0 1 2 3 4 5 6 7 8 9

0 757 4 1 28 6 51 149 131 32 23

1 6 1036 17 151 0 7 18 67 55 1

2 120 78 458 26 39 2 168 110 169 22

3 43 23 14 864 24 65 5 129 47 31

4 3 27 5 31 665 28 64 220 23 88

5 118 30 20 166 72 211 35 215 88 128

6 67 35 50 24 102 19 585 61 268 24

7 4 28 10 21 31 57 42 961 13 31

8 36 143 51 68 19 34 205 146 391 40

9 5 40 2 64 91 154 51 330 19 462

The overall accuracy was just over 53% and the individual accuracy is shown in the table below.

0 1 2 3 4 5 6 7 8 9

0.4779040 0.5866365 0.3362702 0.4736842 0.4323797 0.1406667 0.2966531 0.3686229 0.2116946 0.2876712

There is a huge improvement potential with this change.

- There is no need to calibrate the LDRs, the change in resistance between the 0 and 1 is enough to give a binary output

- There is no need to use voltage comparators, now transistors is all that is needed, implemented as NOT gates.

- 11 LDRs

- 11 NOT gates (can be built using 1 transistor and 2 resistors per gate)

Adding complexity

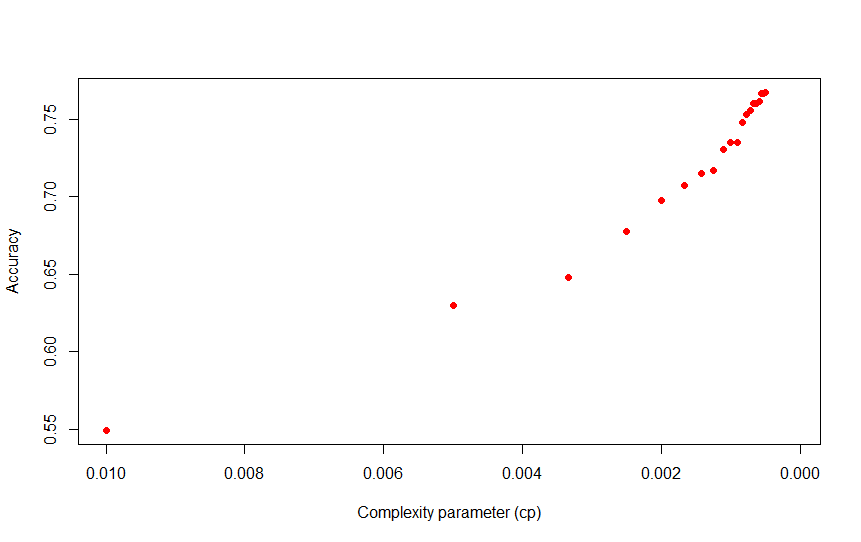

By changing the complexity parameter (cp), we can increase the accuracy significantly, at model complexity cost. The plot below shows the increase in accuracy as we reduce the cp value.

As cp decreases so does the number of splits. A model with a cp of 0.005 for example, has a sizeable jump in accuracy to 62 %, with a still manageable 24-node tree.

A step up to 0.001, increases accuracy to a respectable 74 %, with a 91-node decision tree.

There's a clear trade-off between complexity and accuracy, though more specific optimizations could potentially be carried out in order to obtain a better accuracy without a high complexity penalty.

Conclusion

We have reached a solution that could allow us to implement a really simple object recognition system using readily available components.

The accuracy could be considered to be within the goals of the project at a respectable 75 % plus if we're willing to live with the complexity level.

As a proof of concept this project allows for potential improvements by using more complex decision trees and replacing more elaborate, slower and less power efficient systems in Object Recognition applications.

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.