The goal of this task is to look into the future and determine other potential ML algorithms that could be implemented using discrete components.

Cats vs Dogs

MNIST digit recognition was nice to start with, but it's kind of the "hello world" of object recognition. Hence in the last log I focused a little bit on what could be an interesting challenge as a goal to bring Discrete component object recognition to the masses.

An appealing idea was to get the discrete components to tell apart cats versus dogs. That would have been perfect, so I set sail to try and have a decision tree tell apart cats and dogs.

The dataset was the cats vs dogs dataset from Kaggle, imported into R using the imageR package to crop the pictures square, resize them all to 100x100 pixels and reduce the RGB channels to a single grayscale intensity scale.

The images were nowhere near as pretty as the original, but I could tell apart a cat from a dog without much effort, so I expected the algorithms to do the same.

The decision tree was trained using the rpart R package and the accuracy was calculated using the same metrics used in the previous logs. In short, the number of identified cats was divided by the total of (identified cats + false cats + false dogs).

The accuracy results were short of appalling, no matter how complex the decision tree was made or how large the training set used.

Confusion matrix

cat dog

cat 1034 566

dog 827 773

Accuracy

Cats Dogs

0.4260404 0.3568790

So after looking at the pictures for a while I realised that DT were never really going to cut this, no matter how complex they were since the cats were always in different places in the image, with many other artifacts appearing around them. So I thought, this called for random forests (RF).

RF should address all of my issues, they'd build trees in different places around the image and get a better weighted response. Nevertheless, if this was indeed to be a Discrete Component affair, then I had to try and limit the size of the model. The R caret package has a standard random forest size of 500 individual trees. There is no way that is going to be built no matter how many years I had to stay at home. So I tried first with 10 trees, then 20, then 50, then 100 and then I realised this was also not going to fly.

The accuracy was indeed better, but not really the improvement I was expecting.

Confusion matrix

cat dog

cat 1043 557

dog 652 948

Accuracy

Cats Dogs

0.4631439 0.4394993

At this point I started to read about tree depth, impact of number of trees and realised that I needed a lot more trees and nodes to make this work. Cats and dogs was not going to be easy.

Nevertheless it gave me an idea. What if I could implement more trees in the MNIST model, whilst keeping the number of nodes reasonably low?

MNIST was not so boring after all

So I came back to the MNIST dataset and tried to test the null hypothesis:

"For the same overall number of nodes, decision trees have the same accuracy as random forest models"

I started with a random forest with a single decision tree, maximum 20 nodes. The accuracy results were:

0 1 2 3 4 5 6 7 8 9

0.4165733 0.5352261 0.3046776 0.2667543 0.3531469 0.0000000 0.2709812 0.3712766 0.1717026 0.2116372

Not pretty, but in line with I had already obtained in the minimum model using 10000 records.

So, with the benchmark set, I trained a random forest with two decision trees, maximum 10 nodes each. And the results were:

0 1 2 3 4 5 6 7 8 9

0.4800394 0.2957070 0.2172073 0.2161445 0.2116564 0.1522659 0.1727875 0.1840206 0.1464000 0.1265281

Surprise! For most digits, the random forest fared much worst than the single tree. There goes my null hypothesis.

I wouldn't give up so easily so I increased the number of nodes and trees with more or less the same results.

Only once I really increased the number of nodes to 1000 did I notice a difference between the two algorithms. For a single 1000-node tree the accuracy was:

0 1 2 3 4 5 6 7 8 9

0.6784969 0.7598280 0.5159106 0.4412485 0.4515939 0.4144869 0.6269678 0.5813234 0.3870130 0.4326037

For a random forest of 20 trees with maximum 50 nodes each, total 1000 nodes as in the above model, the accuracy was:

0 1 2 3 4 5 6 7 8 9

0.8503351 0.7897623 0.6440799 0.5855583 0.6387727 0.4900605 0.7507225 0.6986129 0.5977742 0.5315034

This is a significant improvement for all the digits, but at this complexity level, it's kind of difficult to build a discrete component system.

An interesting idea was, maybe the fact that we have a lot of trees helps. What would happen if I use 50 trees with 20 nodes max each, I'd still use 1000 nodes total? The answer was kind of disappointing. The accuracy was actually worse than having 20 trees and 50 nodes, but still better than the single tree.

In the end, the null hypothesis was destroyed. Twice.

For a small number of nodes, decision trees are better than random forests. For a lot of nodes, random forests trump decision trees.

Schroedinger's cats and dogs

I was also very disappointed when I came to the realisation that at least half of the cats and dogs in these pictures were dead. The kaggle dataset was 6 years old. I'd safely assume the pictures to be at least as old as the dataset and considering the life expectancy of cats and dogs, we're well past the half life of the dataset.

The future

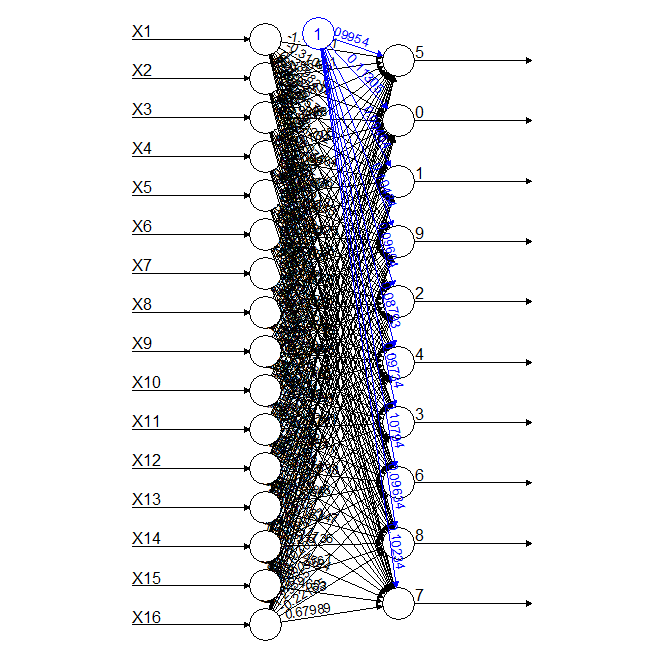

Well, I was wondering, random forests are a no go. Decision trees don't really cut it. Why not neural networks (NN)?

As far as I could remember NNs tend to have multiplication and additions, that's all. Could this be implemented using discrete components? Below is an example of a NN accepting all 16-pixels from the 4x4 MNIST digital matrix.

So, let's assume we have the signals from the pixel sensors (LDRs). The signal would have been a 1 or a 0, or a higher voltage versus a lower voltage. Could I multiply this voltage by a constant (voltage) and add it to another voltage multiplied by a different constant (voltage)? This was a humbling moment for me since I realised how little I knew about the treatment of analog signals in general.

So, for those of you who also don't have an EE background, you can add voltages, and you can multiply them as well, all using discrete components.

We'd need a summing amplifier and an analog multiplier. So these are quite common components (still quite discrete if you ask me) and even some of the analog multipliers accept an offset, i.e. I could multiply and add in the same component.

I've just found myself the building blocks for a discrete component neural network.

This project is just getting started,

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.