A few weeks ago I created a game that learns how to play itself using reinforcement learning (more detail here :game that learn how to play itself).

Although this was a fun and great learning experience, the AI making the decision to control the gamepad was directly collecting data in game and never felt like a real player .

This is why I decided to come back with this project . This time I wanted to make a standalone robot that can play without any connection to the game or the computer running the game .

I also wanted to lean more a computer vision a more specifically about convolution neural networks .

A few months prior I put my hand on a Nvidia jetson nano so I decided to build my robot around that . It was a great fit as it can use a picamera and has GPIOs which makes control of servos easy .

I started with the design as I wanted to have a bit of fun building something a bit funky looking . I decided to base the design of this robot on one of my favourite video game character, GLaDOS from the portal franchise (What could go wrong here) . I tried imagining how GLaDOS could have been born if she was real .

I ended up with something like this :

isn't she cute ?

The next phase was to create a game . I needed something simple . The goal of the game is to place the portal over the cake . Of course as soon as she gets it the game reset . The cake is a lie you can never get it !!!

The following step was to create and train the neural network . neural network training requires data, a lot of it if possible . Here the data would basically be an image of the game and its associated joystick position . There are only 9 joystick positions in this case, left, left up, up, right up....... and centre .

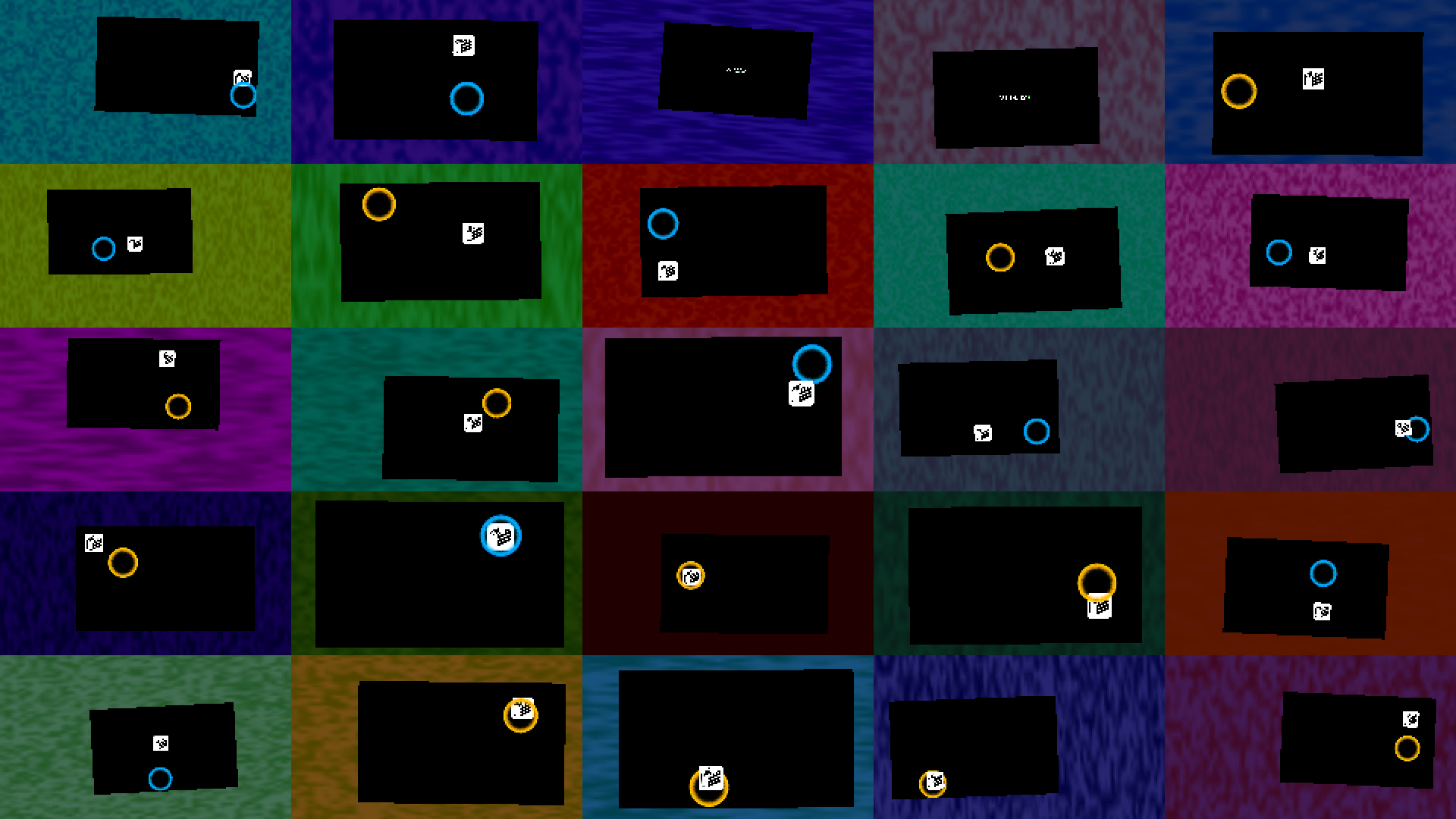

Data can be very time consuming to collect this is why I decided to generate synthetic data . instead of taking photos of my screen with the game running I decided to render digital images of the game .

I used random noise in the background hoping that it would force the neural network to learn to ignore it and only focus on what is inside the screen . I also randomly moved the screen so the robot would learn how to play without being perfectly align with the TV .

Using this technique I was able to generate half a million of labelled images in only a few hours .

After a bit of messing around with the training parameters and architecture of the network it was able to predict what action to take with about 90% accuracy on the synthetic data .

Unfortunately the performance on real images are pretty poor .

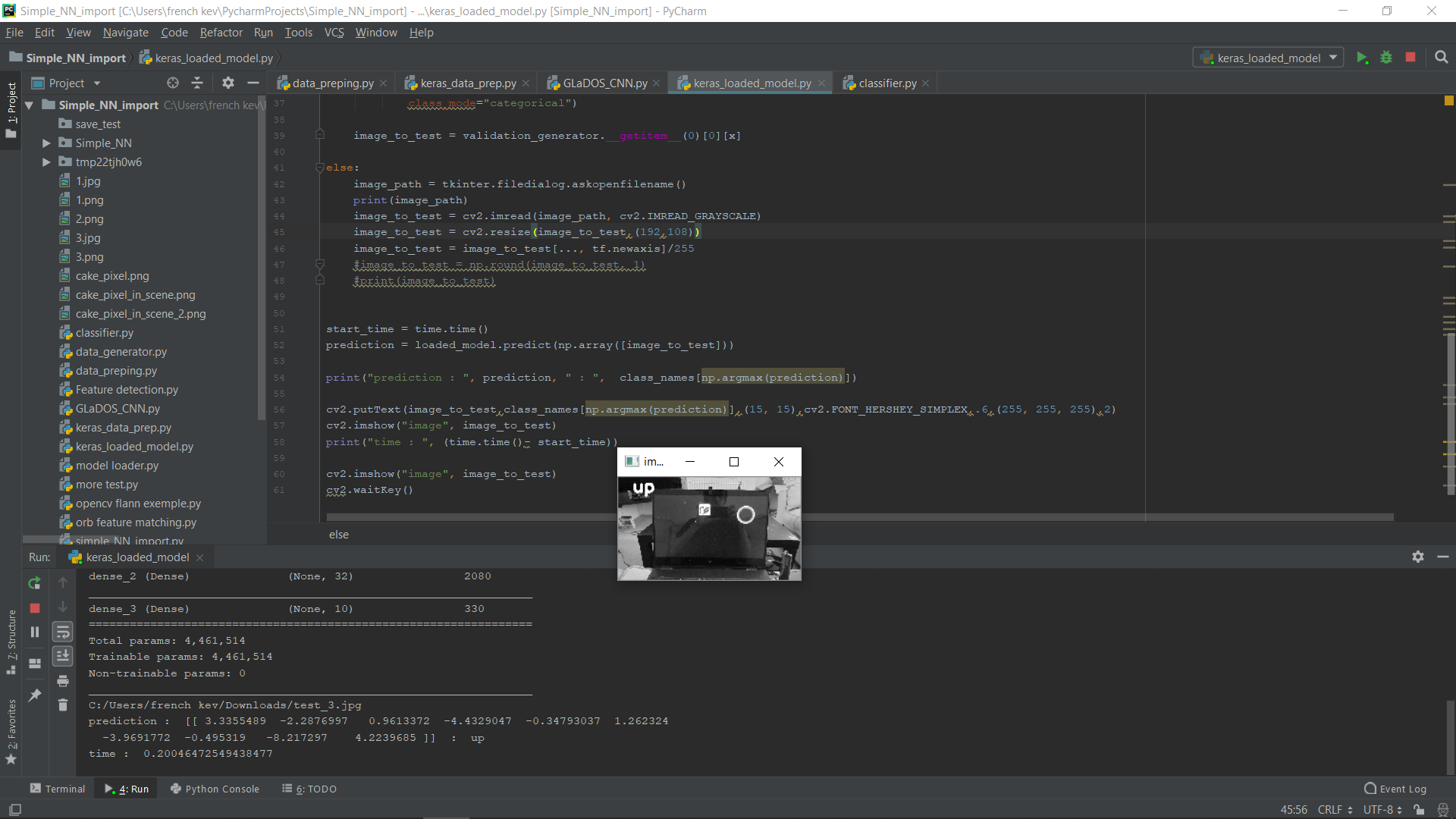

As you can see in the picture above the neural network predicts UP when it should pretty clearly be predicting LEFT or LEFT UP . I think this is due to my synthetic data being too simplistic with no reflection on the screen an a background way too uniform .

I then decided to collect data directly from the Pi camera mounted on the jetson Nano . For this I setup the Jetson Nano as a server and the PC running the game as a client . Once the game is started it connect to the server, the server then start taking picture and ask the game to change frame after each picture taken .

After inspecting the new data I realized that it could do with a bit of cleaning . Some images were taken between to frame and can have multiple target displayed which make them impossible to classify (even for a human) . Being a bit lazy I decided to retrain the neural network without doing any data cleaning . It still managed to reach 75% accuracy . Not great but I believe that the 'uncleaned' data is probably the cause .

I was curious to see how it would perform on the nano so I loaded the model and starting feeding it the video feed from the camera . It didn't disappoint as it ran at around 25FPS!

The other task I had to tackle was to control the arm actuating the gamepad joystick . I assumed I could just control the servos directly from the GPIO's of the jetson Nano . Unfortunately it was not the case . I decided that reusing the arduino from the previous project would be the easiest way to deal with this issue . The jetson Nano send some data to the arduino that move the servos .

After giving it a try with the badly performing network I ending up with something nearly working...

After collecting more good quality data and retrained the network the result is better but it still get stuck in loops from time to time .

I kept testing different network architectures and parameters and ended up with something that worked OK . I did try to torture test it a little bit . I am now pretty sure the day it become sentient I should expect retribution !

little french kev

little french kev