The Akai Fire is a very affordable MIDI controller designed specifically for FL Studio. I want to use it without FL Studio.

Why?

Because I like the layout and physical interface of the Fire, but I'm not a huge fan of FL Studio. In fact, I kinda dislike it. So now I have a MIDI controller designed only to work in FL Studio gathering dust. See this YouTube video for an intro to the Fire.

Introduction

The Fire interfaces with the host using class compliant USB MIDI and sends data to the host application as standard MIDI events. The host application controls the LEDs and OLED screen on the Fire with a combination of standard MIDI events and some custom system exclusive data. This was very well documented by Paul Curtis on Segger.com in a series of blog posts. Using his findings, I started experimenting with the Fire using a Teensy 3.6 but discovered that the USB host implementation on the Teensy was too slow for this project. Enter the RasPi 4.

The RasPi 4, runs a fairly vanilla version of Raspberry Pi OS in headless mode. This provides robust and "driverless" USB host support for class complaint devices which makes software development easier since I don't have to futz with USB implementations. Code is written in Javascript and runs on the node.js framework.

Goals

1. Emulate as much functionality that exists in FL Studio as possible

2. Have the ability to interface with a wide range of hardware

3. Perform well enough that it actually functions as a sequencer

Goal 1. Emulate as much functionality that exists in FL Studio as possible

The Fire has four different modes that it can operate within: Step, Note, Drum and Perform.

Step mode is used to toggle steps in the step view within FL Studio.

Each row corresponds to a given instrument and each column is a step in the sequence. This is the essence of a step sequencer the most basic functionality I want to implement. However, I don't plan on implementing it exactly as it is in FL Studio. More on that later.

Note mode plays notes on the selected instrument in FL Studio. The color of the button corresponds to whether a note is a white key, a black key, or the root of the scale. The layout of the notes can be selected from a list of scales, including a piano emulated layout.

I want to recreate this functionality almost exactly as it exists in FL Studio.

Drum mode is similar to note mode in that it just sends notes to an instrument, but it can use customized note layouts and it can send notes to multiple instruments within a single layout.

This is another mode I want to recreate almost exactly.

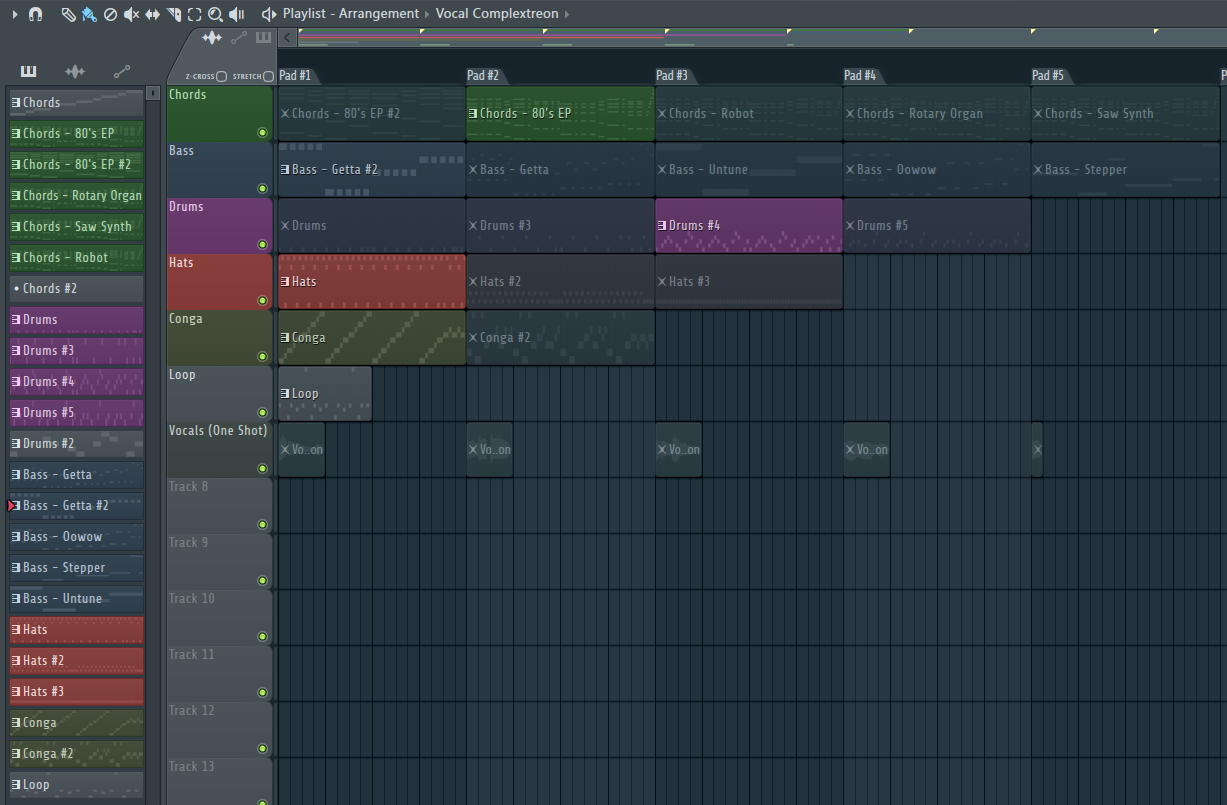

In Perform mode, the buttons in the grid correspond to patterns, loops, or samples in FL Studio's playlist view. Pressing a button on the grid will trigger the corresponding pattern, loop or sample. Depending on the exact configuration within the FL Studio project, pressing a button on track that already has a pattern, loop or sample playing can do one of the following: stop the currently playing item and start a new item, queue up a new item to play after the current has finished, or play the new item at the same time as the current item. Samples can be configured to play immediately or to start on a particular beat.

I want to implement this mode a bit differently. The only thing that a button will trigger is a pattern for that track.

Goal 2. Have the ability to interface with a wide range of hardware

In order to interface with class compliant USB MIDI devices, we need to implement a way to send MIDI data to them. Using a menu system that the user interacts with entirely on the Fire, we can design a UI that allows the user to configure which device a track sends data to.

Interfacing with modular hardware will be a bit different. The user should still be able to configure the output via the menu system but there will need to be a hardware implementation that outputs CV, Gate, and Clock. This can probably be done through the GPIOs on the RasPi via level translation, but the software to control it will need to be implemented in parallel to the main sequencer to avoid timing conflicts.

Goal 3. Perform well enough that it actually functions as a sequencer

Writing all this code in JS may end up being a poor choice since in the end it may not perform well enough, but whatever. I'm in charge and I using node.js.

Anyone feel like porting this project to native C?

AndrewMcDan

AndrewMcDan