In this post entry we continue explore the prior work, on controlling the smart home for locked-in patients using the most universal controller - the brain. Though this technology is still relatively new, and we might not use it in this particular project, a lot of exploration is already there, worth mentioning and thinking about as of the ultimate future.

In this Part 2 I will walk you through the materials we have used as well as the open source setup. We talk about speech recognition, as well as location of the person within their apartment (which specific room they are in).

Design of the Smart Home

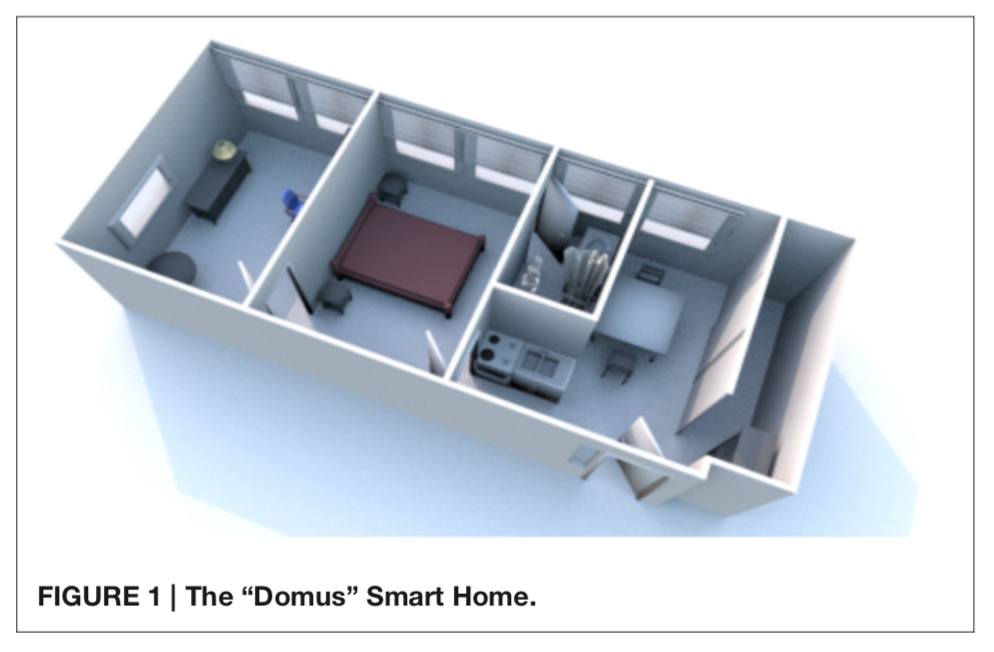

“Domus” Platform

We have performed our study at The “Domus” smart home (http://domus.liglab.fr) which is part of the experimentation platform of the Laboratory of Informatics of Grenoble (Figure 1). “Domus” is a fully functional 40 meters square flat with 4 rooms, including a kitchen, a bedroom, a bathroom and a living room. The flat is equipped with 6 cameras and 7 microphones to record audio, video and to monitor experiments from a control room connected to “Domus.”

Appliances

The flat is equipped with a set of sensors and actuators using the KNX (Konnex) home automation protocol (https://knx.org/knx- en/index.php).

The sensors monitor data for hot and cold water consumption, temperature, CO2 levels, humidity, motion detection, electrical consumption and ambient lighting levels. Each room is also equipped with dimmable lights, roller shutters (plus curtains in the bedroom) and connected power plugs that can be remotely actuated.

For example, in this experiment, in order to turn the kettle on or off, we directly control the power plug rather than interacting with the kettle. 26 led strips are integrated in the ceiling of the kitchen, the bedroom and the living room.

The color and brightness of the strips can be set separately, for an entire room or individually using the DMX protocol (http://www.dmx- 512.com/dmx- protocol/).

The bedroom is equipped with a UPnP enabled TV located in front of the bed that can be used to play or stream video files and pictures.

Architecture

The software architecture of “Domus” is based on the open source home automation software openHAB (http://www.openhab.org). It allows monitoring and controlling all the appliances in the flat with a single system that can integrate the various protocols used. It is based on an OSGI framework (https://www.osgi.org) that contains a set of bundles that can be started, stopped or updated while the system is running without stopping the other components. The system contains a repository of items of different types (switch, number, color, string) that stores the description of the item and its current state (e.g., ON or OFF for a switch). There are also virtual items that exist only in the system or that serve as an abstract representation of an existing appliance function. The item is bound to a specific OSGI bundle binding that implements the protocol of the appliance, allowing the system to synchronize the virtual item state with the physical appliance state. All appliance functions in “Domus,” such as power plug control, setting the color of the led strips or the audio/video multimedia control are represented as items in the system. The event bus is also an important feature of openHAB. All the events generated by the OSGI bundles, like a change in an item state or the update of a bundle are reported in the event bus. Sending a command to change the state of an item will generate a new event on the bus. If the item is bound to a physical object, the binding bundle will send the command to the appliance using the specific protocol, changing its physical state (e.g., switching the light on). The commands can be sent to an item through HTTP requests made on the provided REST (Gallese et al., 1996) interface. A web server is also deployed in openHAB, allowing the user to create specific UIs with a simple description file containing the items to control or monitor (Figure 2). The user can also create a set of rules and scripts that react to bus events and generate new commands. Persistence services are also implemented to store the evolution of states of items in log files, databases, etc.

Control capabilities

A virtual item was created in the repository to represent the classification produced by the brain-computer interface (BCI) and speech recognition systems. A set of rules was also set to be triggered when this virtual item state changed. Depending on this new state and the current room where the user is located, the rule sent a command to the specifics items to control the physical appliances associated to classification outcomes (TV, kettle power plug, etc.). To control the virtual item, the BCI/speech programs communicated with openHAB using the provided REST API. After a classification was performed, a single http POST request containing the classification outcome a string was sent to the REST interface, changing the state of the virtual item, triggering the rule and therefore allowing the user to control the desired appliance using their brain activity only.

Figure 2. Visual stimulations used for each concept for BCI control. The cup toggles the kettle on or off, the lamp toggles the lights on or off, the blinds toggle the blinds to be raised or lowered and the TV triggers the TV on or off.

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.