I run an interactive live stream. I wear an old tv (with working led display) like a helmet and backpack with a display. Twitch chat controls what's displayed on the television screen and the backpack screen through chat commands. Together Twitch chat and I go through the city of Atlanta, Ga spreading cheer.

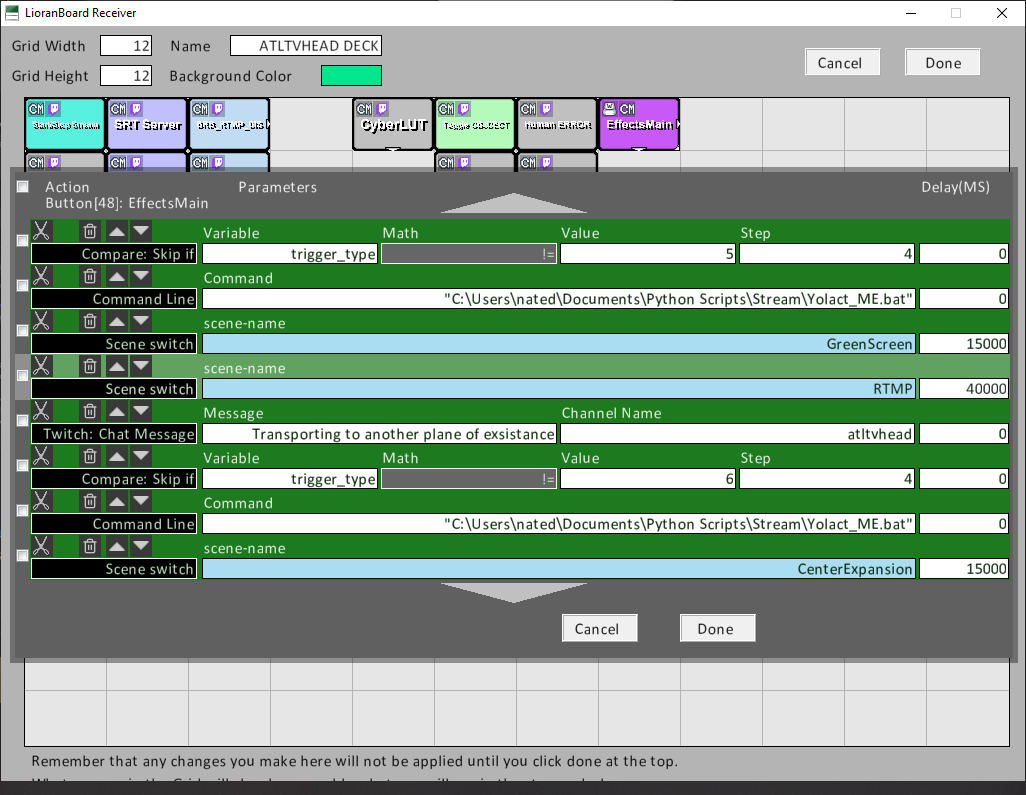

As time has gone on, I have over 20 channel commands for the tv display. Remembering and even copy pasting all has become complicated and tedious. So it's time to simplify my interface to the tvhead.

What are my resources?

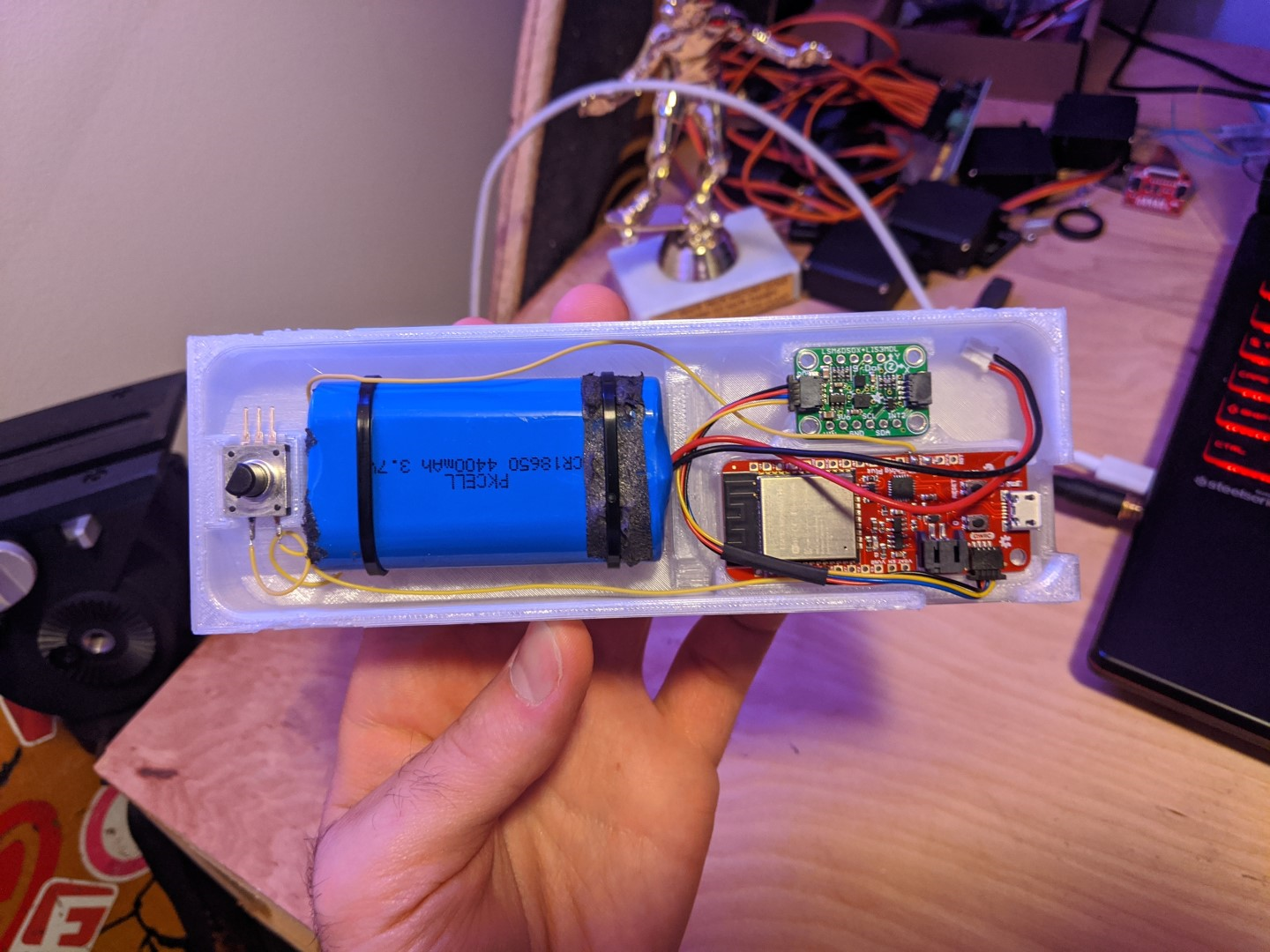

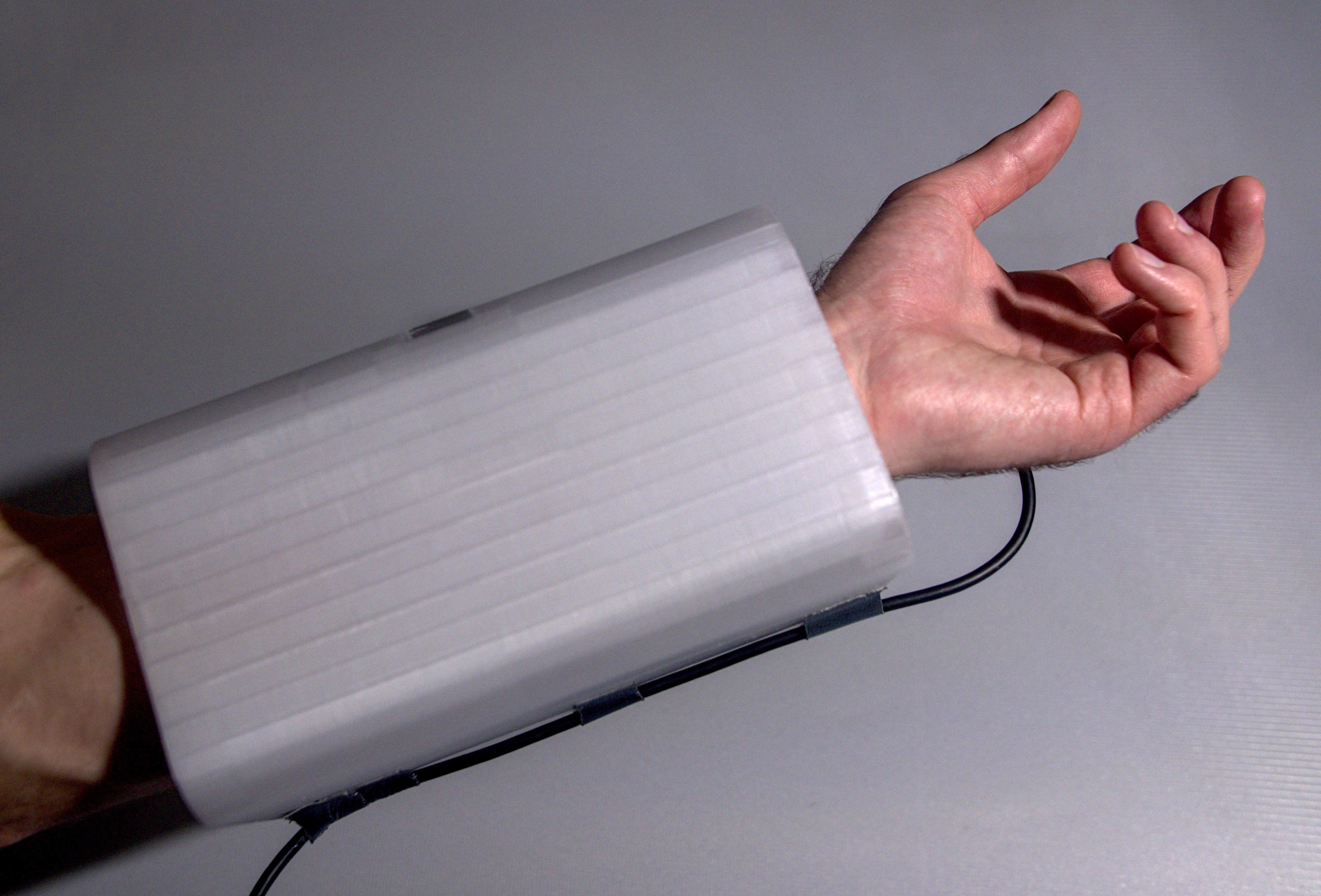

During the live stream, I am on rollerblades, my right hand is holding the camera, my left hand has a high five detecting glove I've built from a time-of-flight sensor and esp32, my backpack has a raspberry pi 4, and a website with buttons that post commands in twitch chat.

What to simplify?

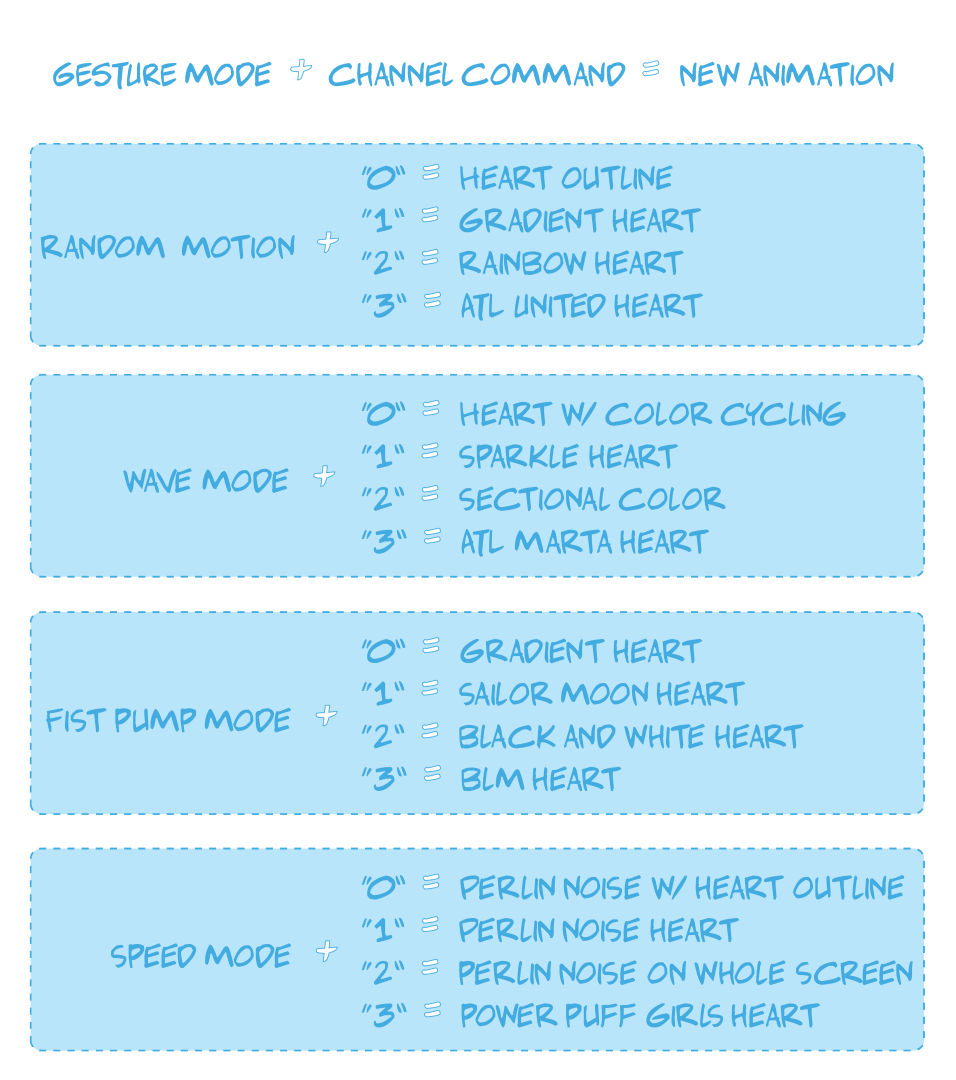

I'd like to simplify the channel commands and gamify it a bit more.

What resources to use?

I am going to change my high five gloves, removing the time-of-flight lidar sensor, and feed the raspberry pi with acceleration and gyroscope data. So that the Pi can inference a gesture performed from the arm data.

atltvhead

atltvhead Click the plus and add chat command, type in the command and the role associated. You are done. With triggering the effect.

Click the plus and add chat command, type in the command and the role associated. You are done. With triggering the effect.

Steven Hickson

Steven Hickson

@TaiksonTexas

@TaiksonTexas

Philip Zucker

Philip Zucker