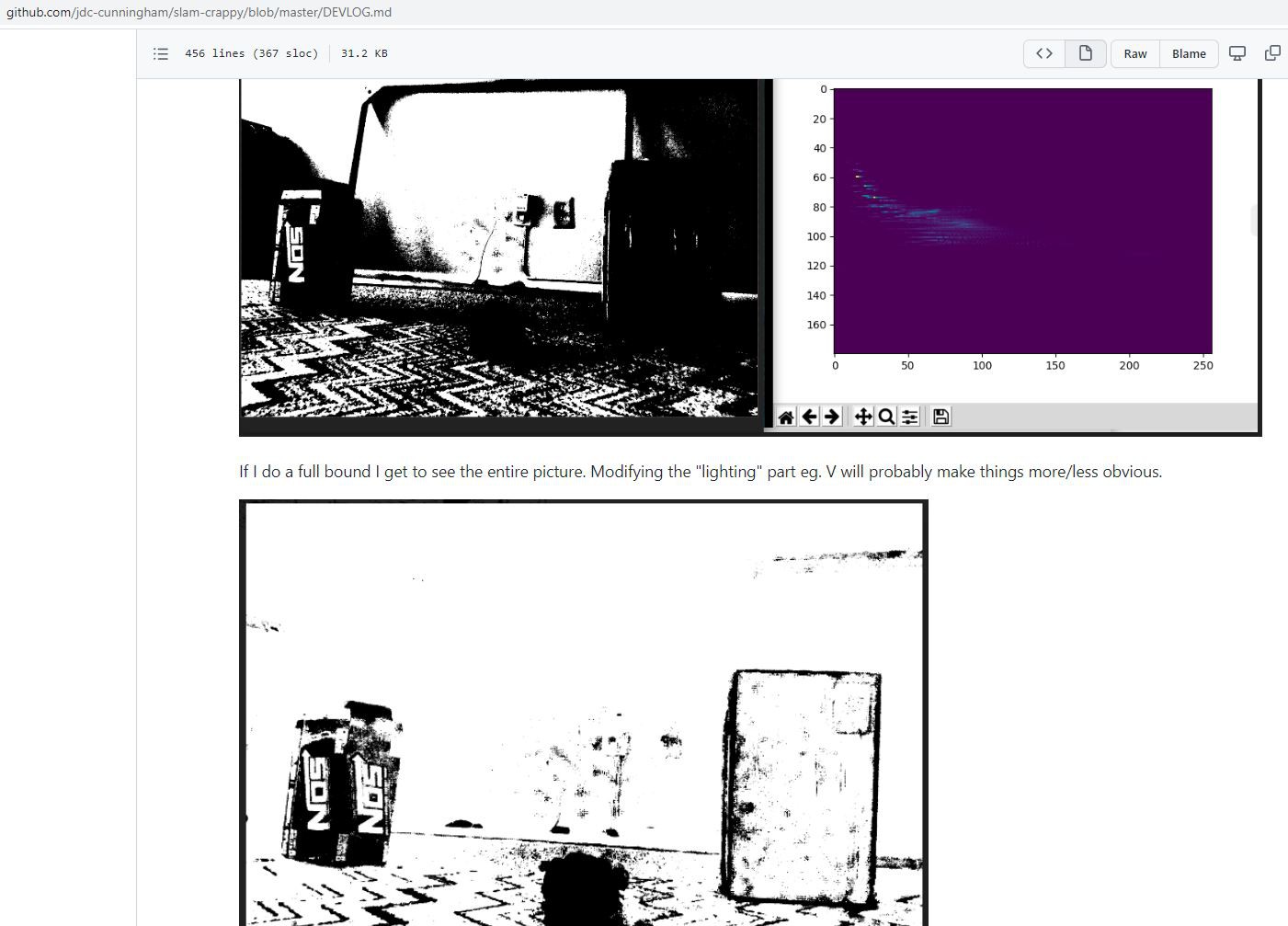

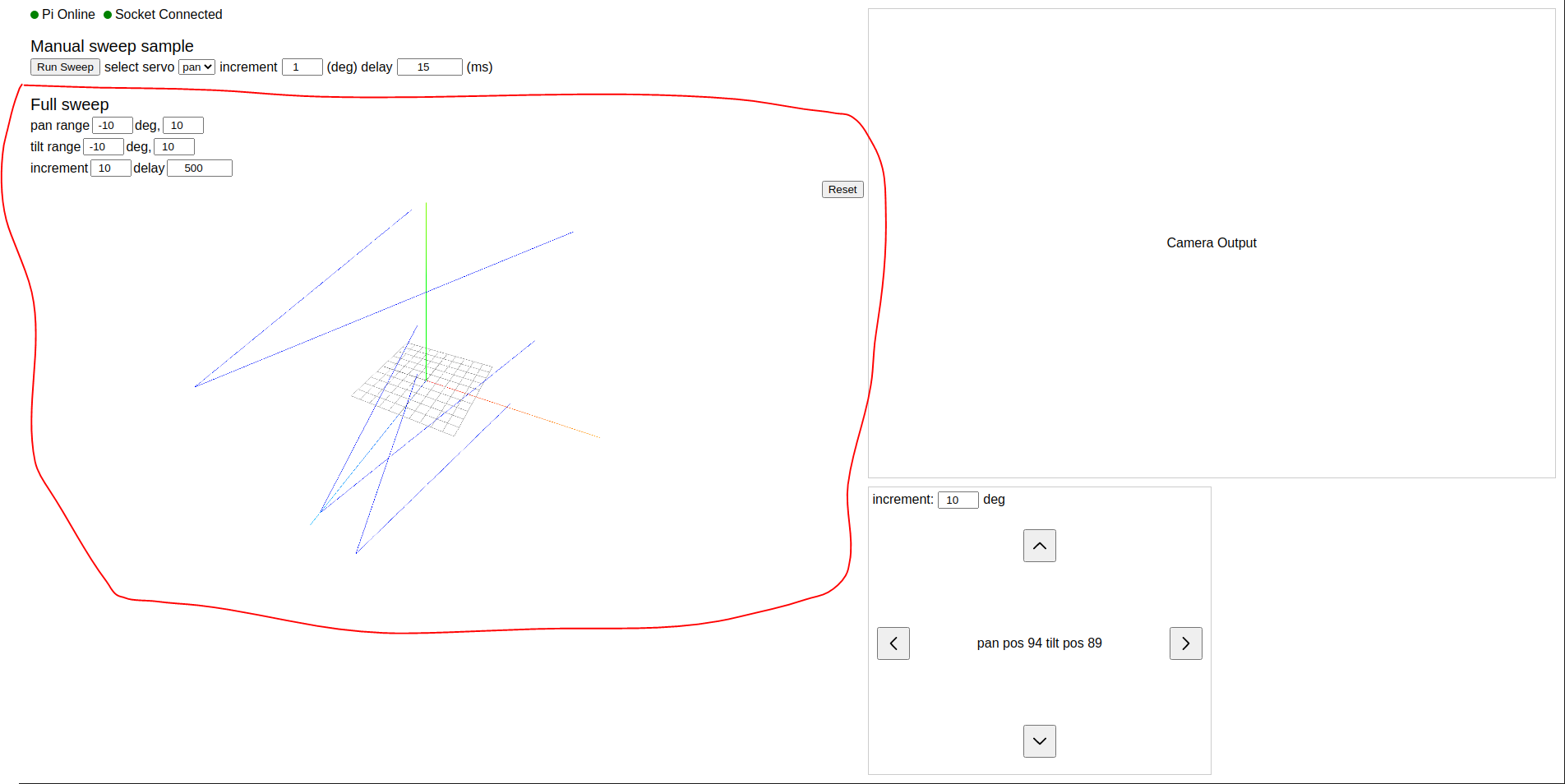

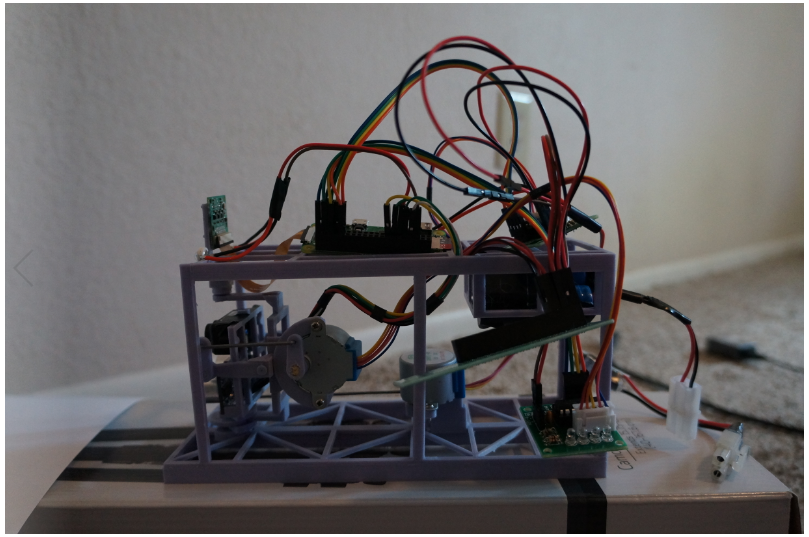

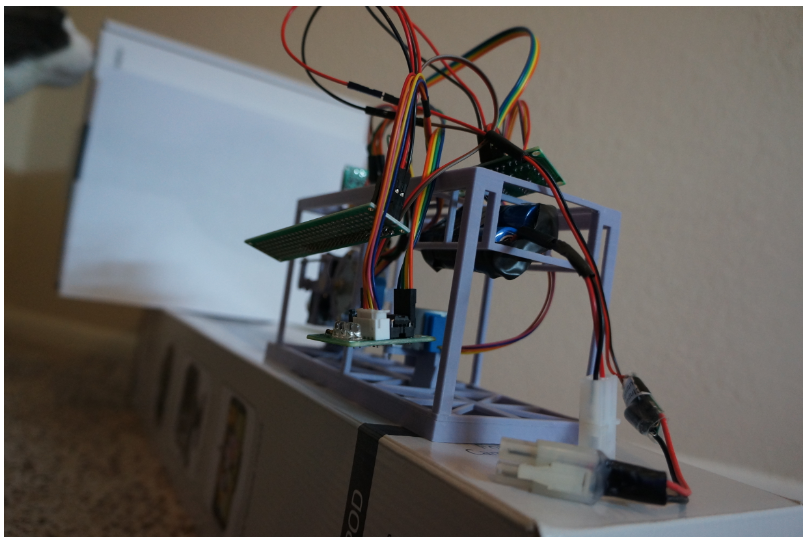

This projected stalled, OpenCV is hard who knew.

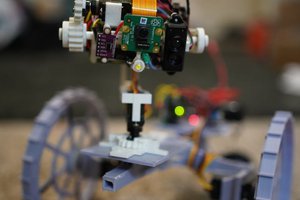

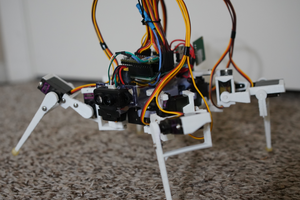

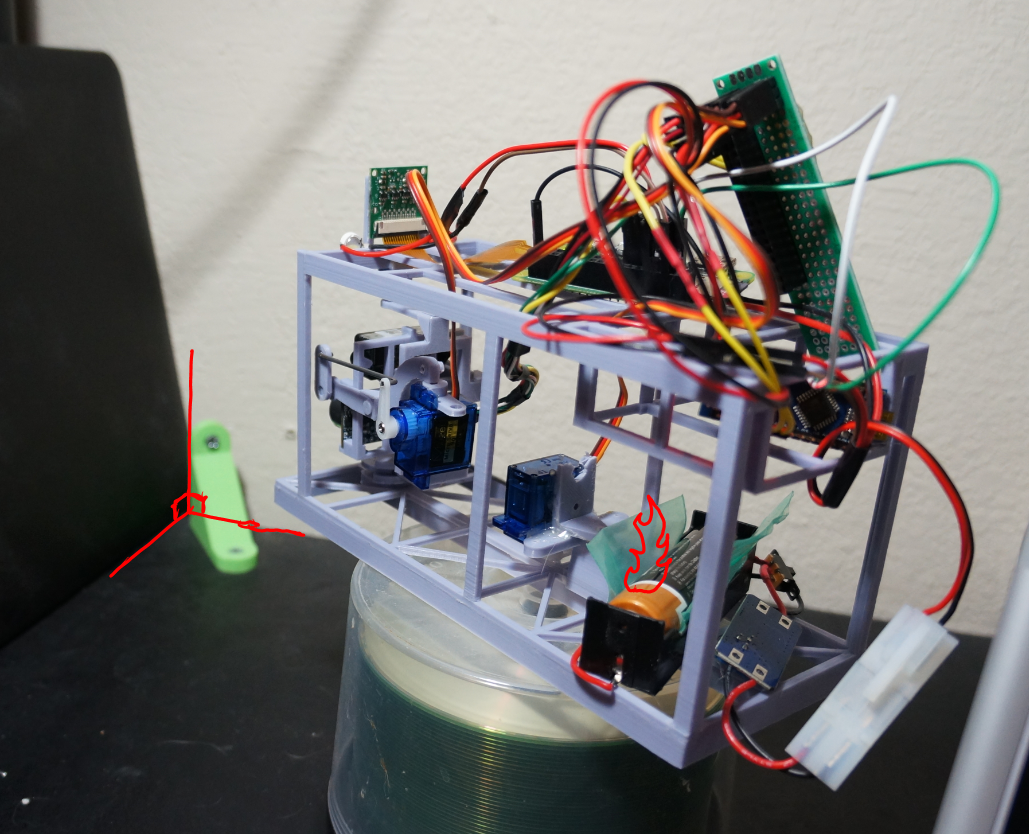

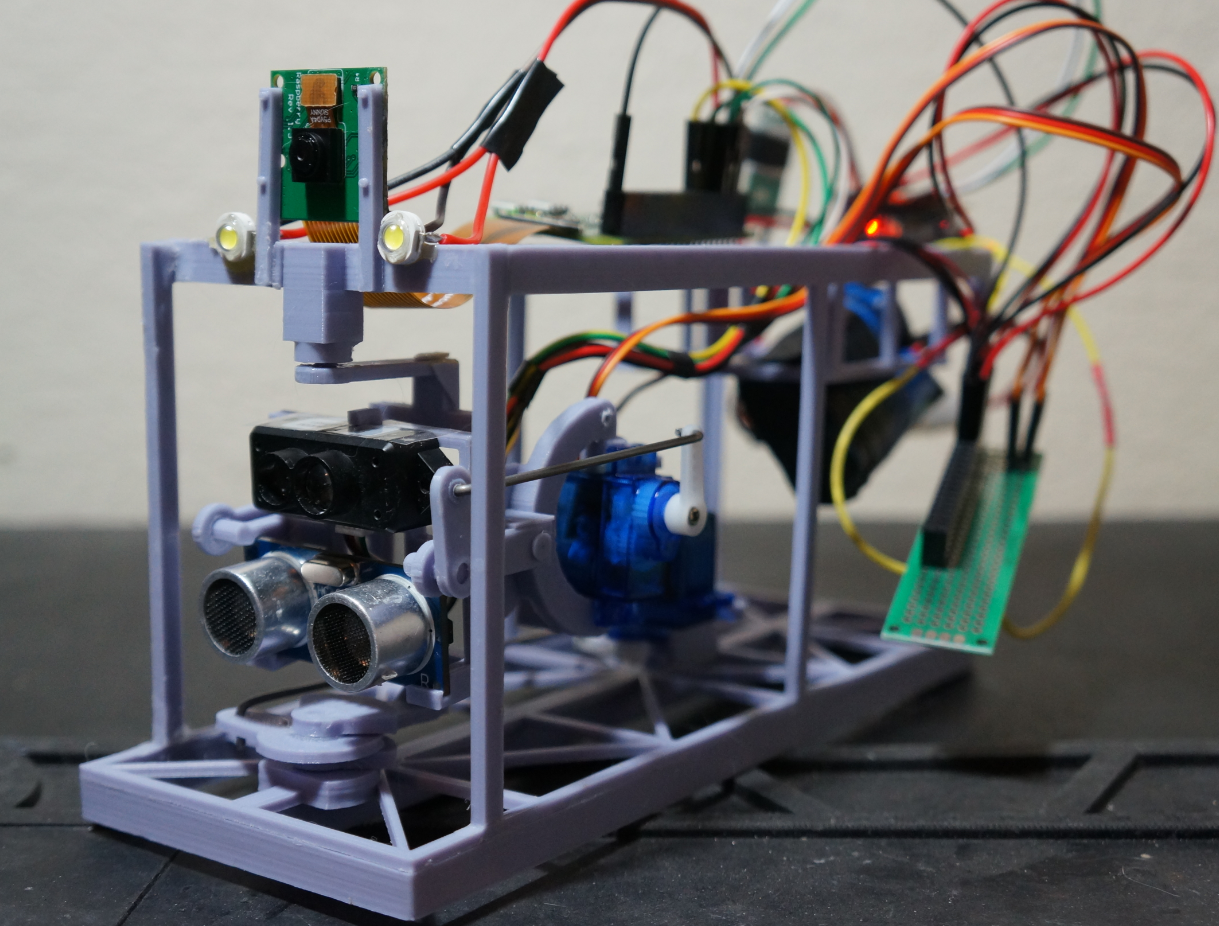

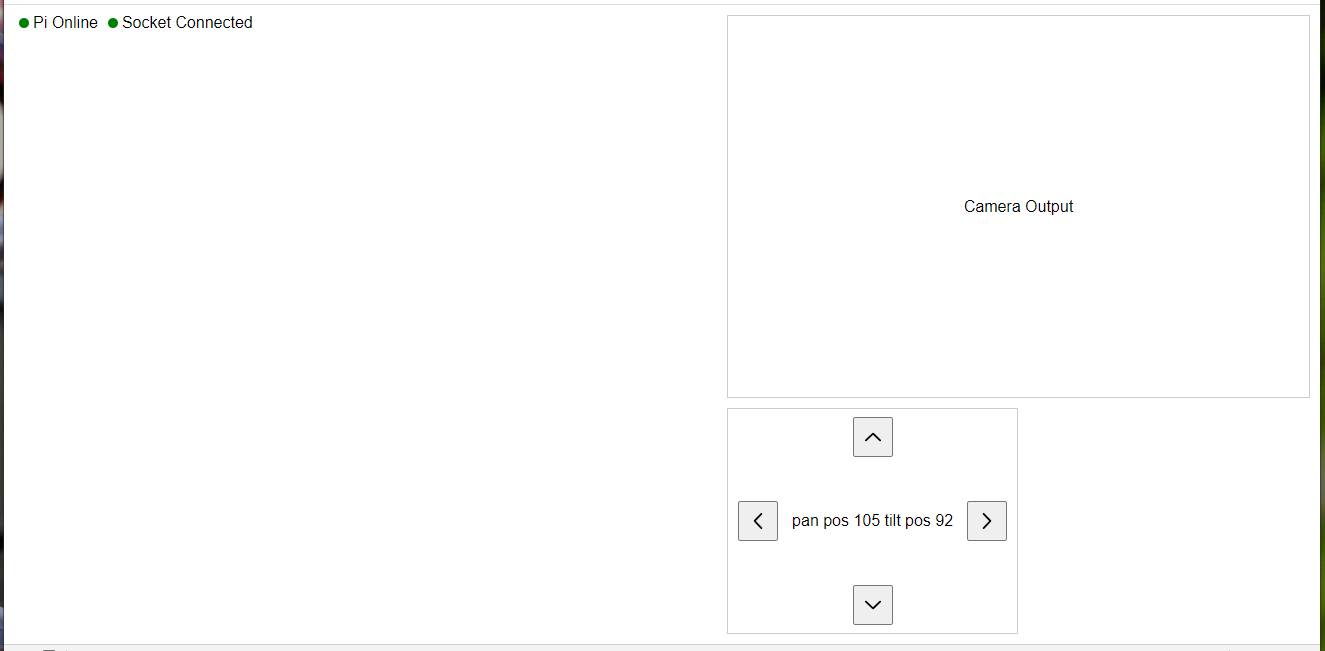

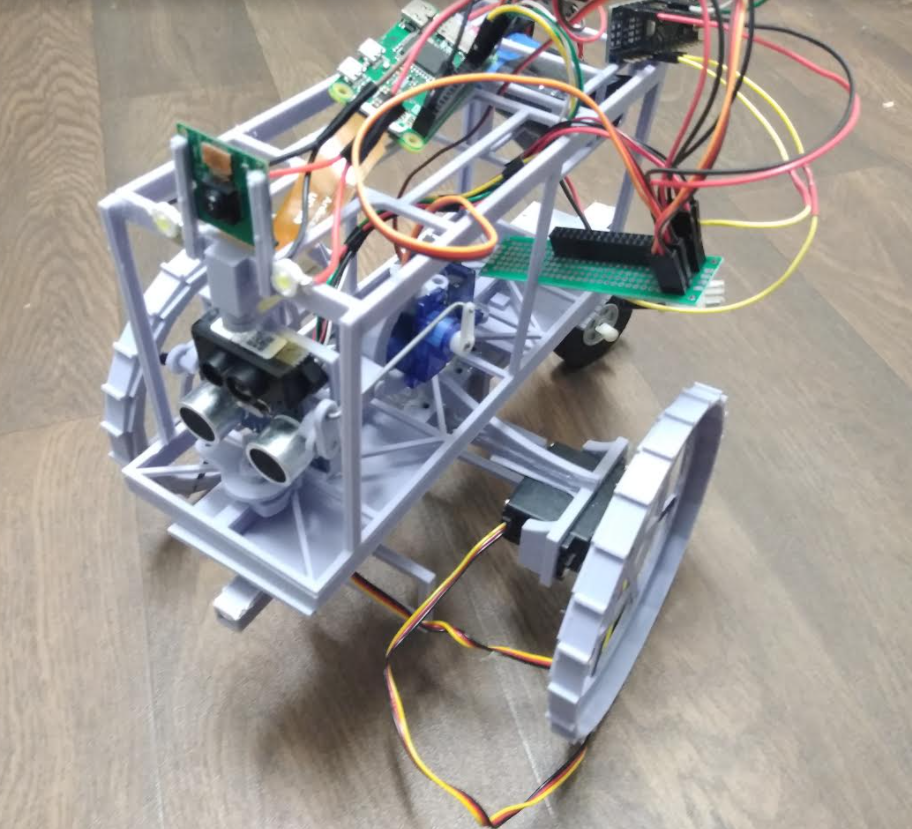

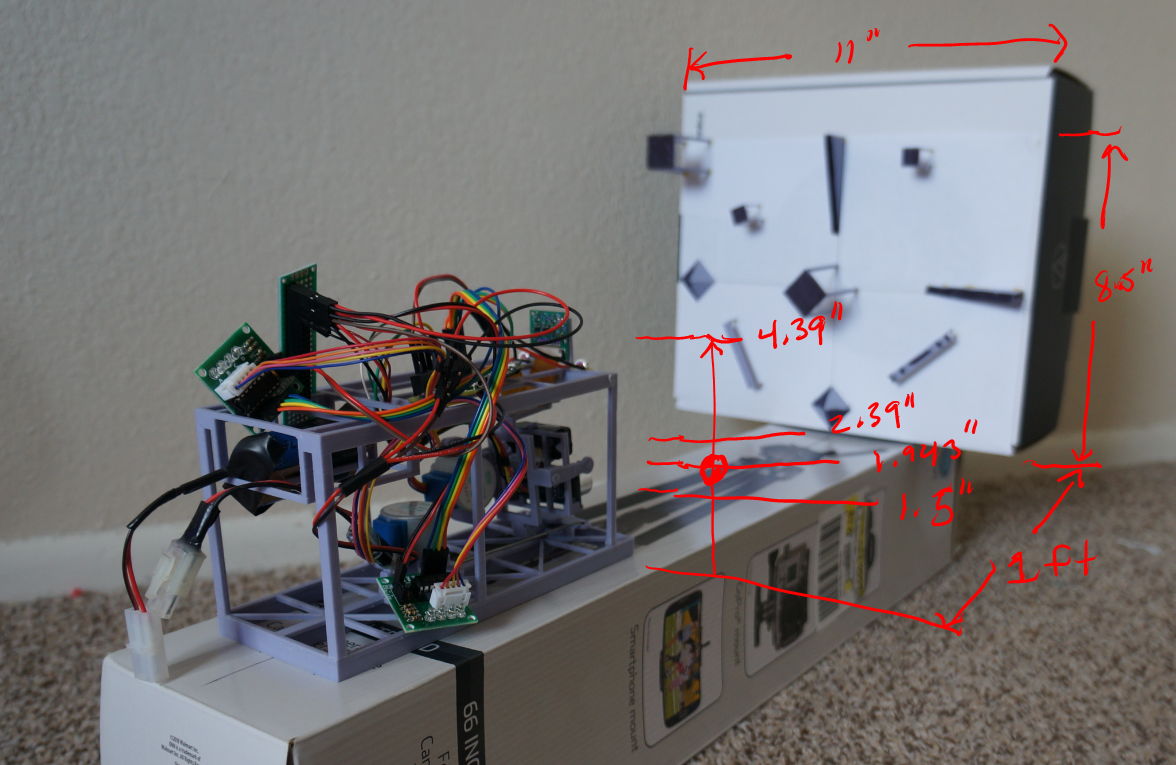

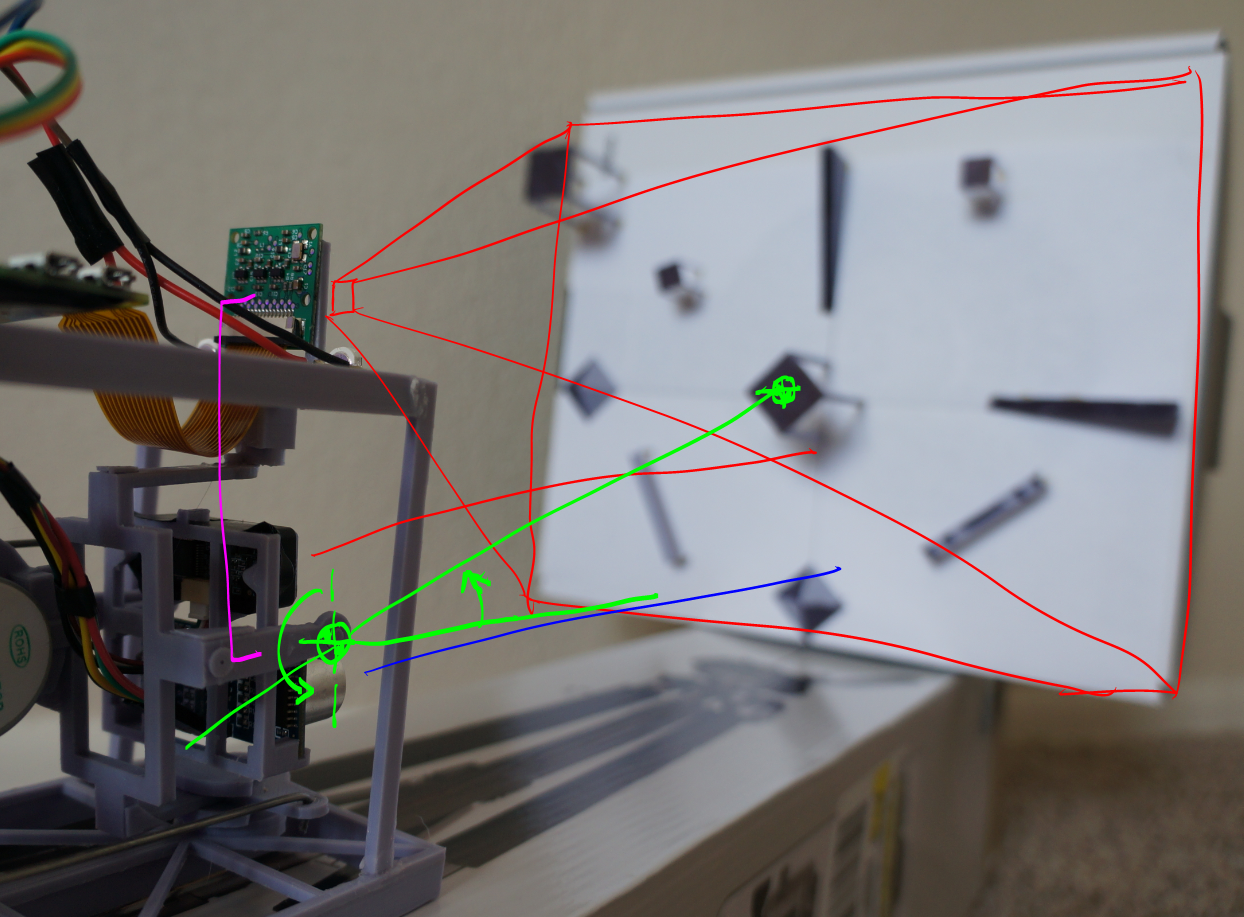

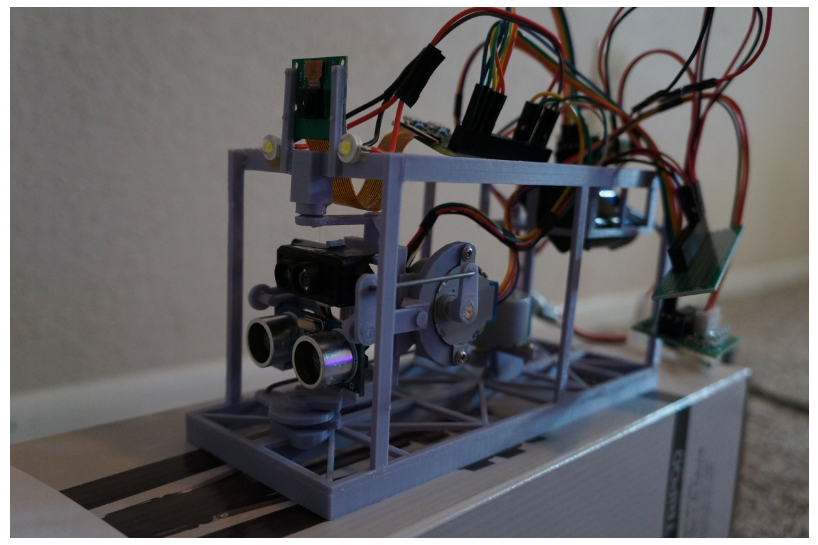

Also the pan/tilt mechanism was kind of a fail due to the stiffness of the wires fighting the servos/preventing them from returning/going to the right position. Also no positional feedback.

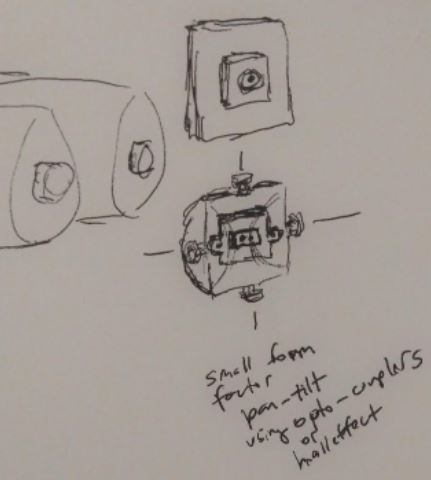

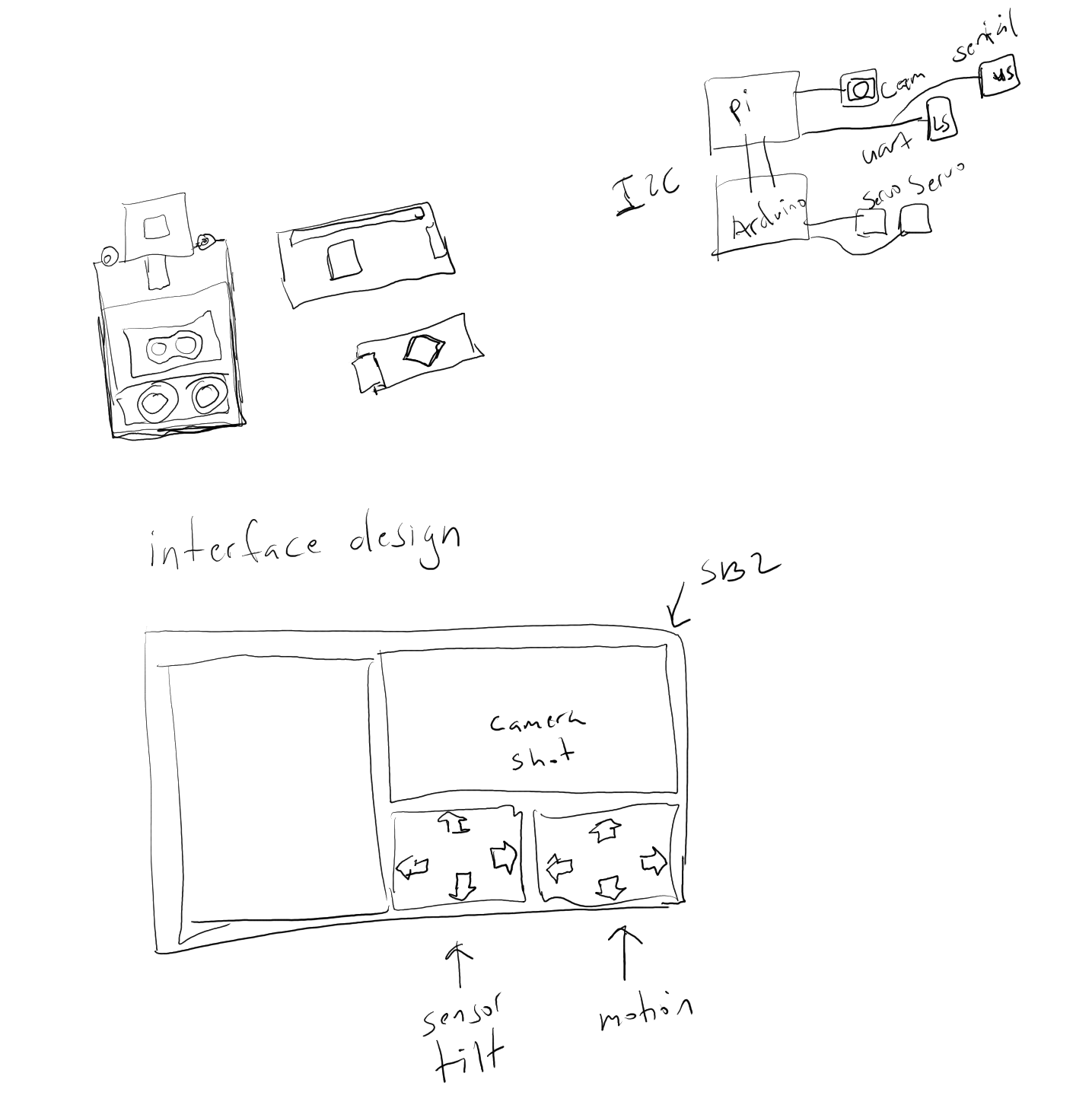

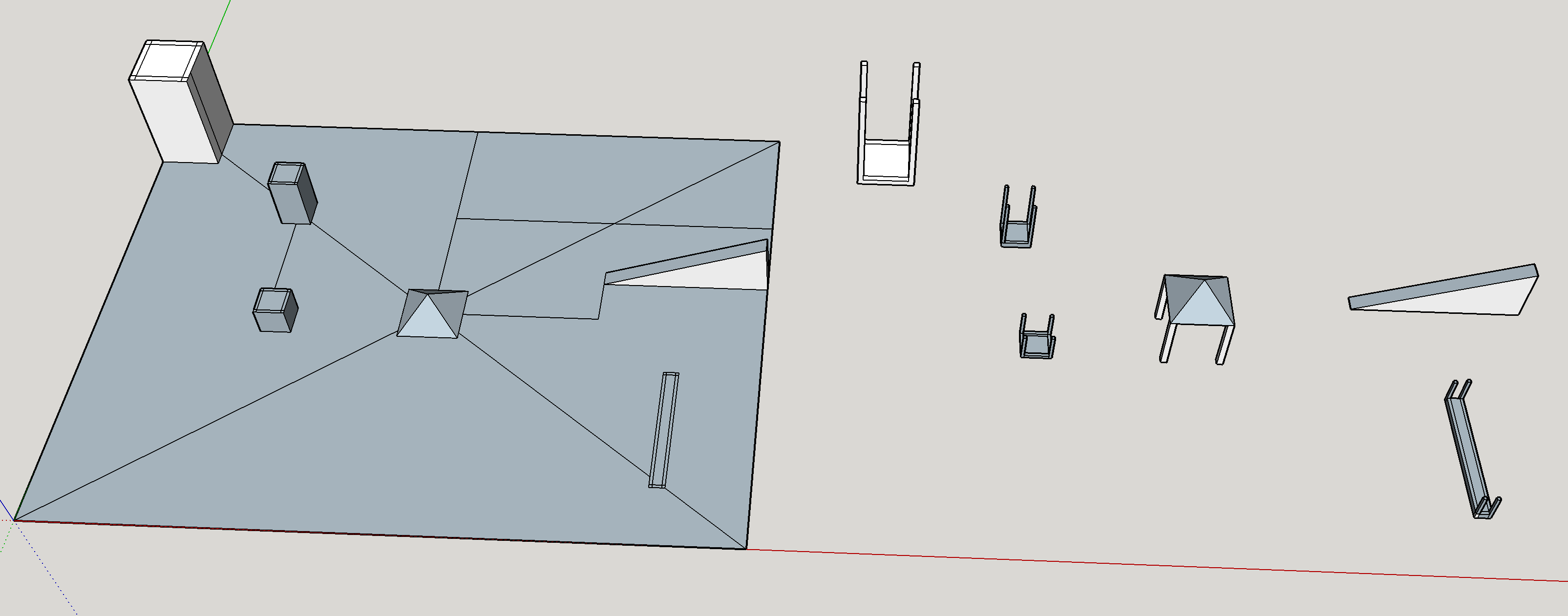

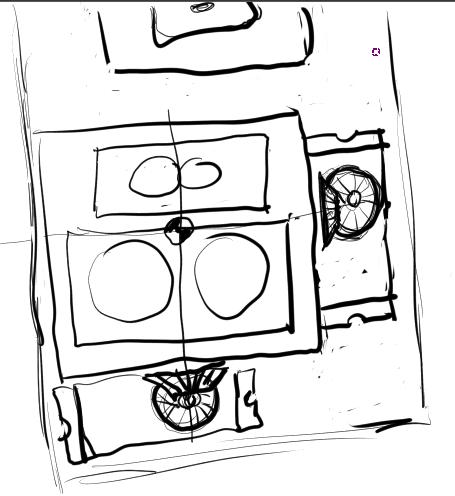

I have another version in mind, smaller like this:

Anyway time will tell, my life might get rough for a bit (transitioning to a new job in the near future).

Jacob David C Cunningham

Jacob David C Cunningham

Mister Malware

Mister Malware

Crypto [Neo]

Crypto [Neo]