Summary

We do something a little more interesting by using a curated image with a useful application running inside. In this case, we run nginx as our web server.

Deets

The Dockerhub is a great place to look for images that have already been created for common things. In this episode I will use an existing docker image for nginx to host a website. This will consist of setting up configuration, mounting volumes, publishing ports, and setting up systemctld to run the image on startup.

Getting the Image and Getting Ready

The image we will use is an nginx deployed on Alpine.

docker image pull nginx:alpine

Docker has the sense to figure out CPU architecture, but not all docker images out there have been built for all architectures, so do take note of that when shopping at Dockerhub. This will be running on a Raspberry Pi 3, so it needs to be ARM64.

Docker creates private networks that the various containers run on. Typically these are bridges, so you poossibly need to install the bridge-utils package on the host so that docker can manage them:

sudo apt install bridge-utils

Getting Busy

We can do a quick test:

docker run -it --rm -d -p 80:80 --name devweb nginx:alpine

The new things here are '-d', which is shorthand for '--detach', which lets the container run in the background, and '-p', which is shorthand for '--publish', which makes the ports in the container be exposed on the host system. You can translate the port numbers, hence the '80:80' nomenclature -- the first number is the host's port and the second is the container's. Here they are the same. Also, we explicitly named the container 'devweb' just because.

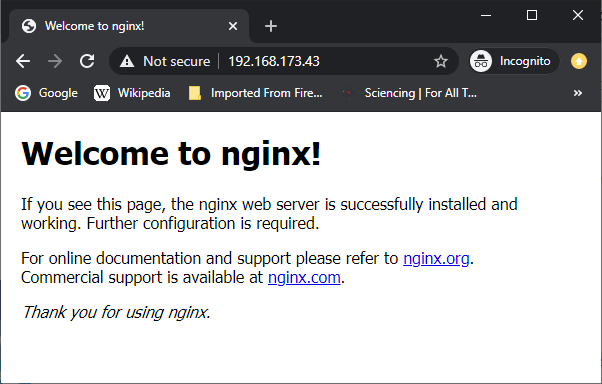

You can drive a web browser to the host system and see the default site:

OK, that's a start, but we need to serve our own web pages. Let's move on...

docker stop devweb

As mentioned before, my server has a 'datadrive' (which used to be a physical drive, but now is just a partition), and that drive contains all the data files for the various services. In this case, the web stuff is in /mnt/datadrive/srv/www. Subdirectories of that are for the various virtual servers. That was how I set it up way back when for an Apache2 system, but this go-round we are going to do it with Nginx. Cuz 2020.

Docker has a facility for projecting host filesystem objects into containers. This can be specific files, or directory trees. We will use this to project the tree of virtual hosts into the container, and then also to project the nginx config file into the container as well. So, the config file and web content reside on the host as per usual, and then the stock nginx container from Dockerhub can be used without modification.

There are two ways of projecting the host filesystem objects into the container. One is by using a docker 'volume', which is a virtual filesystem object like the docker image itself, or a 'bind', which is like a symbolic link to things in the host filesystem. Both methods facilitate persistence across multiple runnings of the image, and they have their relative merits. Since I have this legacy data mass and I'm less interested right now in shuffling it around, I am currently using the 'bind' method. What I have added is some service-specific directories on the 'datadrive' (e.g. 'nginx') that contain config files for that service, which I will mount into the container filesystem and thereby override what is there in the stock container. In the case of nginx, I replace the 'default.conf' with one of my concoction on the host system. I should point out that the more sophisticated way of configuring nginx is with 'sites-available' and symlinks in 'sites-enabled', but for this simple case I'm not going to do all that. I will just override the default config with my own config.

default.conf:

server {

listen 80;

listen [::]:80;

server_name example.com www.example.com;

#charset koi8-r;

#access_log /var/log/nginx/host.access.log main;

root /srv/www/vhosts/example.com;

index index.html index.htm;

location / {

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

I stowed this config in a directory on the data drive /mnt/datadrive/srv/nginx/default.conf

Then I can test it out:

docker run -it --rm -d -p 80:80 --name devweb \

--mount 'type=bind,src=/mnt/datadrive/srv/nginx/default.conf,dst=/etc/nginx/conf.d/default.conf' \

--mount 'type=bind,src=/mnt/datadrive/srv/www,dst=/srv/www' \

nginx:alpine

And now if I drive to 'example.com' (not my actual domain, of course), I will get the web site. Note: if you try to reach the web site from the domain name on your local network, you might have some troubles if your server is behind NAT (which is likely). This is because the domain name will resolve to your external IP, and the router will probably not forward that request back inside if it coming from the inside already. You can work around this by adding some entries to your hosts file that resolve the names to your internal IP address, then it should work as expected even from inside the network.

Systemd

The Ubuntu on the host uses 'systemd' to start daemons (services). We want the nginx to start serving web pages on boot, so we need to do a little more config. First we need to create a 'service descriptor file' on the host:

/etc/systemd/system/docker.www.service

[Unit]

Description=WWW Service

After=docker.service

Requires=docker.service

[Service]

TimeoutStartSec=0

Restart=always

ExecStartPre=-/usr/bin/docker exec %n stop

ExecStartPre=-/usr/bin/docker rm %n

ExecStartPre=/usr/bin/docker pull nginx:alpine

ExecStart=/usr/bin/docker run --rm --name %n \

--mount 'type=bind,src=/mnt/datadrive/srv/nginx/default.conf,dst=/etc/nginx/conf.d/default.conf' \

--mount 'type=bind,src=/mnt/datadrive/srv/www,dst=/srv/www' \

-p 80:80 \

nginx:alpine

[Install]

WantedBy=default.target

This simply runs the nginx container much as we did before. It does a little cleanup beforehand to handle things like unexpected system crashes that might leave a container around.

After that file is in place, we tell systemd that we want it to be started automatically:

systemctl enable docker.www

This won't do anything for the current session, so this time we manually start it:

sudo service docker.www start

Ultimately, this is not how I'm going to be operating this particular service, but it's useful as a simple example, and it is perfectly fine in many cases. However, I also need PHP for my web site. PHP with nginx is done via 'PHP-FPM', which is a separate process. I am going to run that as a separate docker image. Since my www service will effectively be consisting of two docker images (at this point), I'm going to next use a tool called 'docker-compose' for that. It is a convenient way of composing services from multiple docker images, and is perfectly serviceable when you don't need full-blown container orchestration like with kubernetes.

Next

Add another service that provides PHP processor capability.

ziggurat29

ziggurat29

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

I was always curious how you'd get to update docker images. If I read correctly, you systemd script will try to update the docker image every time it starts. Is that correct?

Are you sure? yes | no

yes, in that example. but in the next post (that I haven't published yet -- writing takes so much time away from doing! lol) I will break that functionality. This is a side effect of how I'm fixin' to do the systemd stuff to make it more 'generic', and as a consequence it will no longer have carnal knowledge of what images are involved. Not that there's anything wrong with doing it the way I showed here (and which is perfectly fine for simple single-docker-image services).

Meta all that, I wonder if auto-updating is the best idea, anyway? Usually you want your services to be stable. If the underlying image was auto-updated to a newer version of XXX which had breaking changes, then you would be unexpectedly broken, and have to fix it. If instead you tie to a specific version, and then conscientiously test every so often what would happen if you were to upgrade before deploying to 'production', then you can better maintain continuity-of-service.

Related to this, I know it is considered 'best practice' when creating a Dockerfile that you specify by version in the 'FROM' clause for that reason (of ensuring a functional repeatable build).

You've given me some food for thought; thanks!

Are you sure? yes | no