See the project New home!

Project status: working. Audio clips are merged and synced to corresponding video, see this log.

Project Stage: alpha

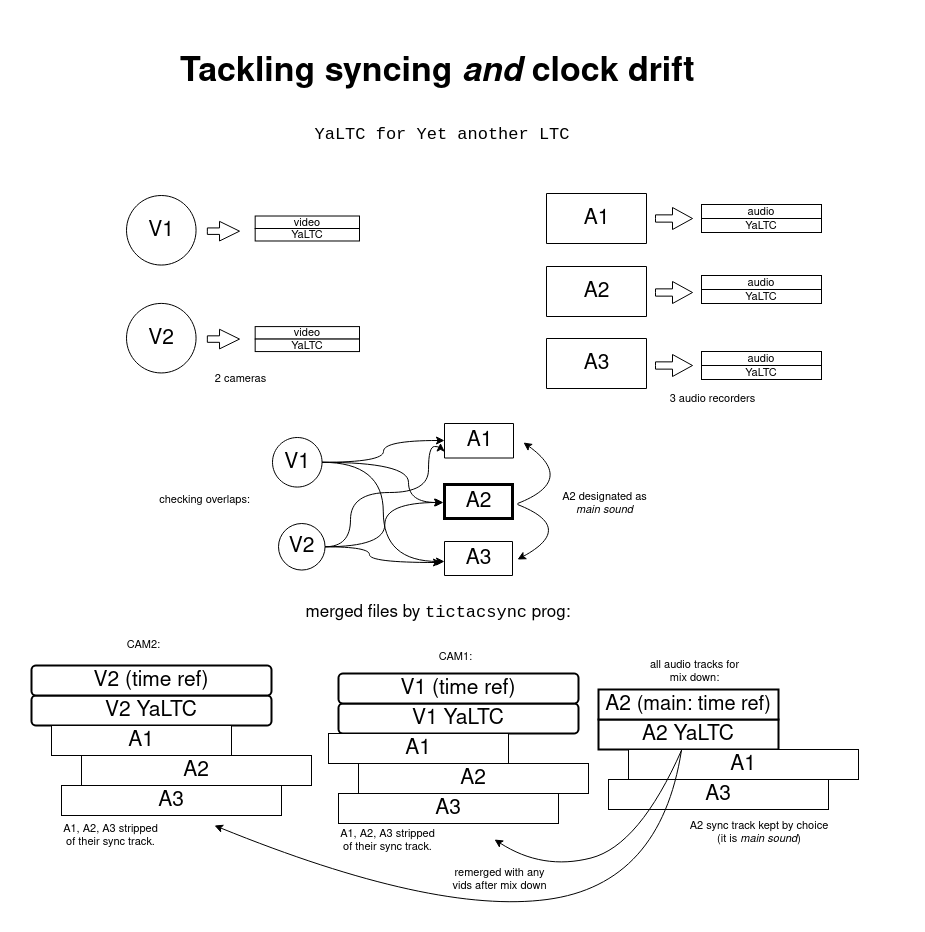

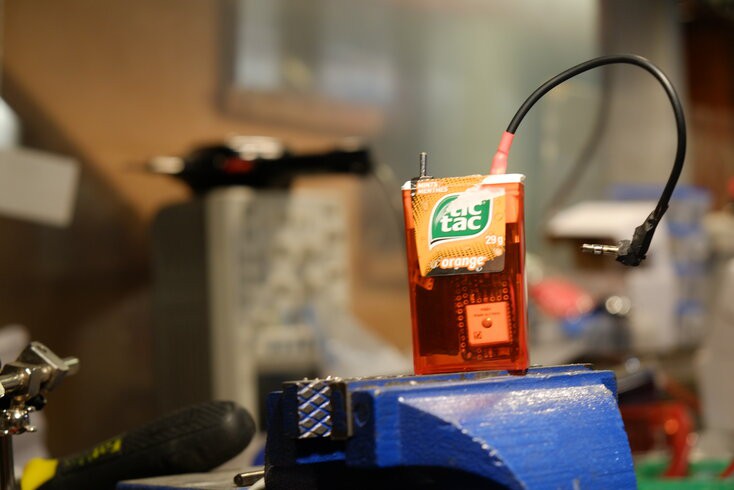

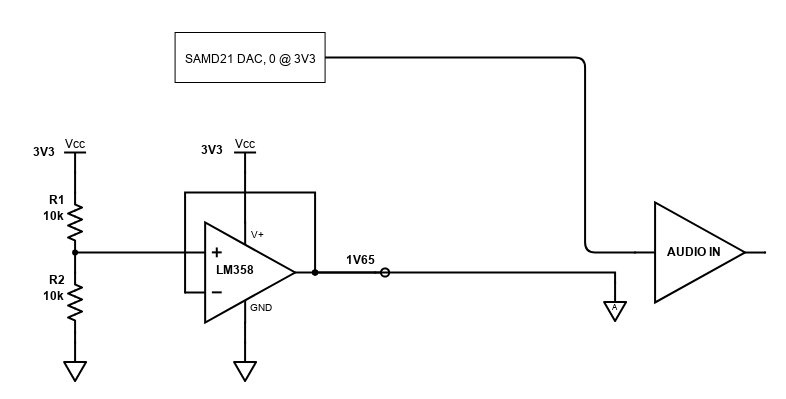

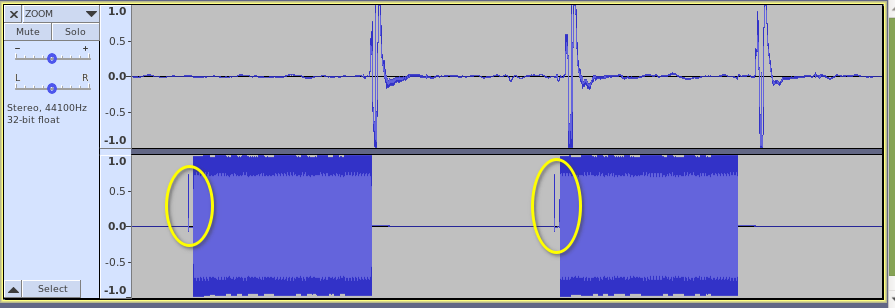

I've implemented an audio sync track generator using the 1 PPS from a GPS receiver. Between each seconds the time of day is BFSK modulated into an audio signal and parsed in post-production by tictacsync.py, merging sound clips with their corresponding video tracks (thanks to FFmpeg) and this, before importing into your NLE of choice.

Side Note: Someone more competent than me should have tackled this! I've NO formal compsci education except two programming introduction courses 40 years ago! (Pascal and Fortran) But here we go...

Pros and cons:

- no jam sync required

- no settings. Plug'n go, it is frame rate agnostic (DF, NDF? meh)

- no drift in GNSS mode (it's atomic)

- subframe precision (tests show files are synced within 83 μs, that's 0.003 frame!)

- low cost (20-30 USD per device)

- can be used with cameras lacking mic input (acoustic coupling was a pain in the ass to R&D, though)

- more resilient than NLE waveform analysis

- it's FOSS

but

- some assembly required

- not standard (it's a feature, not a bug)

- adequate GPS signal strength needed for wireless mode (not a problem for most residential buildings)

- CLI only, for now

- one guy in his basement project (hopefully not for long)

- crappy code (but I'm working on it)

All three hard/soft components have been implemented and tested:

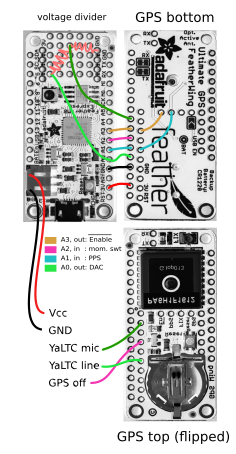

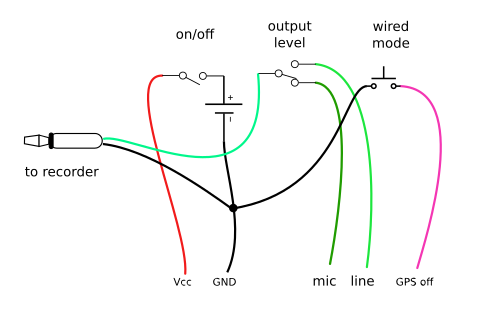

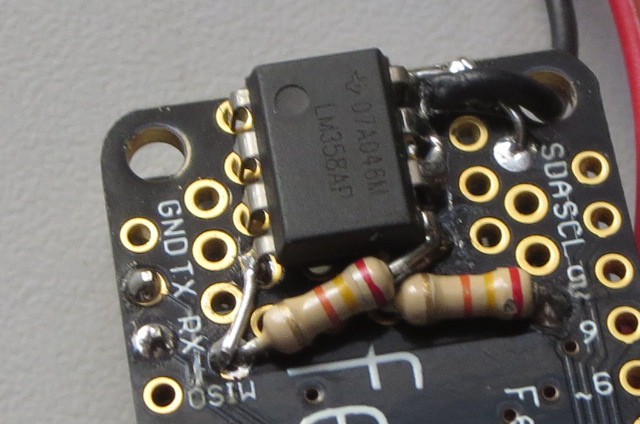

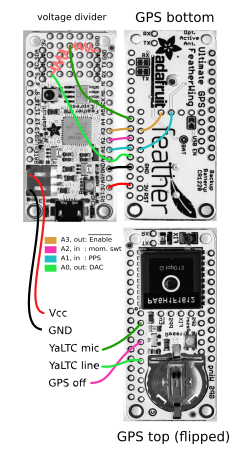

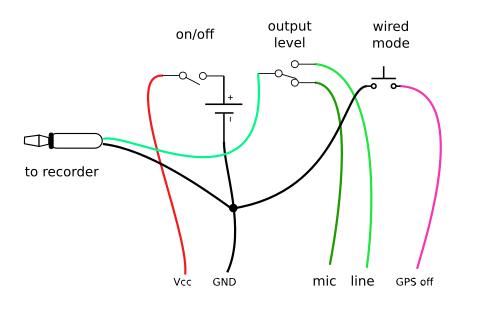

- two hardware dongles (SAMD21 based)

- firmware

- post-production analysis desktop software (proof-of-concept version)

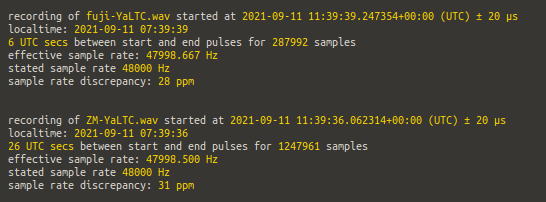

Here's the analysis results for two test files:

Watch here the first complete automated audio/video merge: Syncing in the kitchen. For multicam sync, I'll use Olive capacity to read OpenTimelinIO files.

Raymond Lutz

Raymond Lutz

Fabien-Chouteau

Fabien-Chouteau

E/S Pronk

E/S Pronk

Est

Est

My cooperation with the project has been really beneficial to me. I'd want to share it with the CapCut apk mod team so they can read it and apply something new.