Face tracking based on size alone is pretty bad & nieve. It desperately needs a recognition step.

There is an older face detector using haar cascades.

opencv/samples/python/facedetect.py

These guys used a haar cascade with a dlib correlation function to match the most similar region in 2 frames.

https://www.guidodiepen.nl/2017/02/detecting-and-tracking-a-face-with-python-and-opencv/

These guys similarly went with the largest face when no previous face existed.

Well, HAAR was nowhere close & ran at 5fps instead of 7.8fps. Obviously the DNN has a speed & accuracy advantage.

There was a brief test of absolute differencing the current face with the previous frame's face. When the face rotated, the score of a match was about equal to the score of a mismatch. The problem with anything that compares 2 frames is reacquiring the right face after dropping a few frames. It always has to go back to largest face.

The Intel Movidius seems to be the only embedded GPU still produced. Intel bought Movidius in 2016. As is typical, they released a revised Compute Stick 2 in 2018 & didn't do anything since then but vest in peace. It's bulky & expensive for what it is. It takes some doing to port any vision model to it. Multiple movidiuses can be plugged in for more cores.

Another idea is daisychaining 2 raspberry pi's so 1 would do face detection & the other would do recognition. It would have a latency penalty & take a lot of space.

The next step might be letting it run the recognizer at 3fps & seeing what happens. The mane problem is the recognizer has to be limited to testing 1 face to hit 3fps. Maybe it could optical flow track every face in a frame. The optical flow algorithm just says the face in the next frame closest to the previous face is the same. It could use the optical flow data to recognition test a different face in every frame until it got a maximum score for all. Then, it could track the maximum score face while doing another recognition pass, 1 face per frame at a time.

Optical flow tracking is quite error prone to reliably ensure recognition is applied to a different face in each frame. At 3fps, the time required for the recognizer to pick the maximum score might be the same time it takes the nieve algorithm to recover.

The mane problem is when the lion isn't detected in 1 frame while another face is. It would need a maximum change in position, beyond which it ignores any face & assumes the lion is in the same position until a certain time has passed. Then it would pick the largest face.

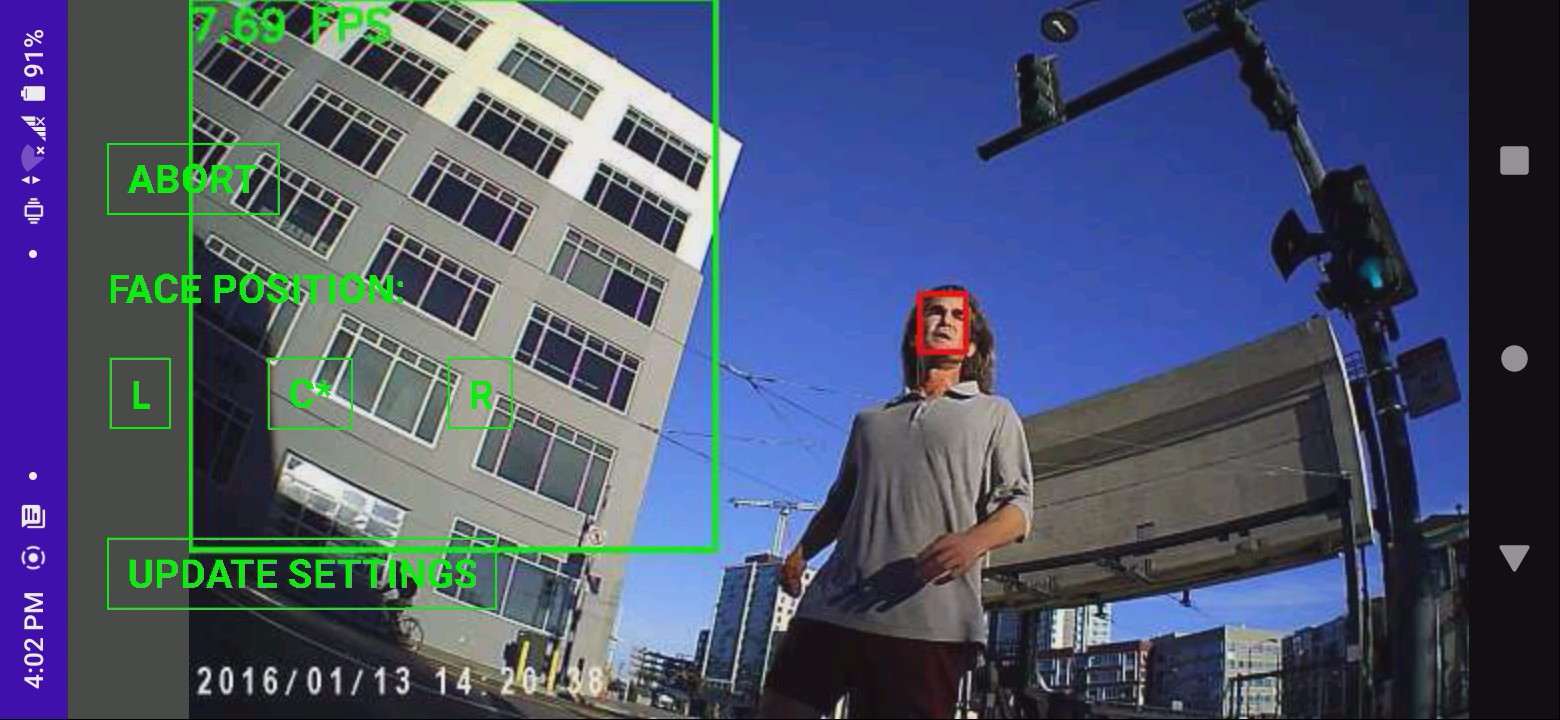

The only beneficial change so far ended up being simplifying optical flow to tracking the nearest face instead of enforcing maximum change in position, size & color. It would only revert to largest face if no faces were in frame. This ended up subjectively doing a better job.

Any further changes to the algorithm are probably a waste of time in lieu of waiting for better hardware to come along years from now & running a pose tracker + face tracker. Face tracking is used on autofocusing cameras because it's cheap.

2 hits where the lion was detected continuously.

2 hits where the lion was detected continuously.

2 misses where the lion wasn't detected at all.

2 misses where the lion wasn't detected at all.

Complete face tracked run

Face tracking failures

It continued to manely struggle with back lighting & indian burial grounds while at the same time, the optical flow tweek might have saved a few shots. The keychain cam blacks out fast when the sun sets. It might benefit from an unstabilized gopro + HDMI as the tracking cam. It would be bulky, heavy & require another battery.

There might be some benefit to capturing the face tracking stream on the raspberry pi instead of leaving the phone's screencap on. It would be another toggle on the phone app. The quality would be vastly better than the wifi stream but it might slow things down. The screencap stops at 4.5GB or around 50 minutes. This would be applicable to better hardware.

lion mclionhead

lion mclionhead

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.