With Maelstrom, I want to give visitors the experience of seeing their own data "go viral" in the safe confines of a creepy exhibition--without seriously scaring them. You can see how one sentence in, this project is full of contradictions: is it fun or creepy? is their data at risk, or not?

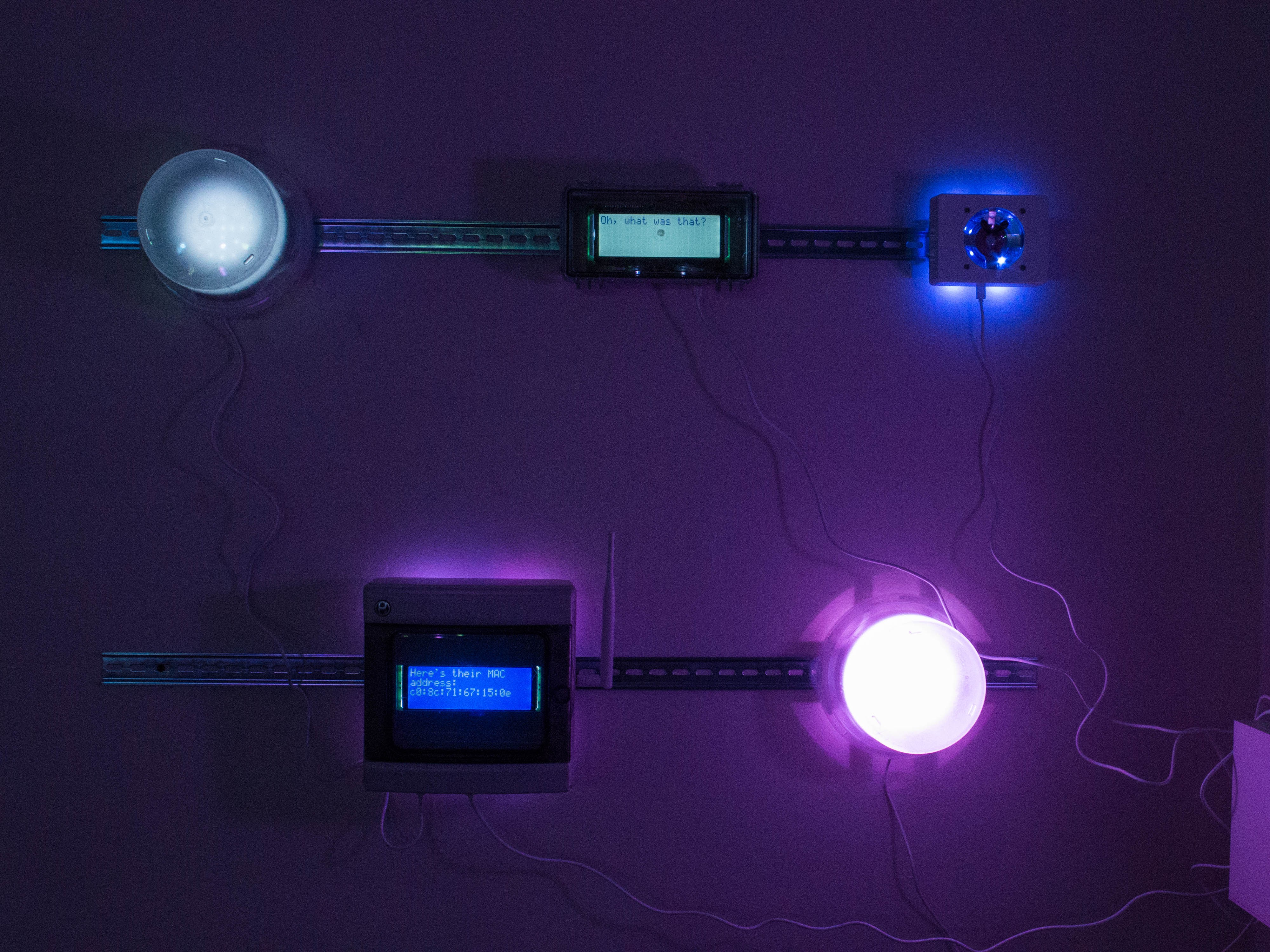

I felt it was important that the installation itself felt slightly overwhelming or stifling--like a "server room of the damned." This guided many aesthetic decisions I made, and also one key architectural decision: it needed to feel like a lot of individual machines all around you, each commenting on you at a slightly-too-fast pace (mimicking users on a social media network).

I ended up using one computer per display. You might be wondering…

Why not use fewer computers?

One idea I considered early on was to have just a few computers, each with lots of outputs attached. It would be possible to put a lot of displays on one machine with a bus like I2C or diff-I2C, or even USB-attached microcontrollers. I explored this option a bit--perhaps it could be 5 or 10 machines driving 40 displays, instead of my goal of 40 machines. The downsides with this approach are additional complexity for the software/overall behavior, power management, and installation.

Software and overall behavior:

Each machine would need to stagger the display of data among its attached displays--which is absolutely doable, but more complicated. The "phone tree" of radios would also become less of a factor in the installation's behavior (read more about the radios).

Also, let's say a given display fails and needs to be replaced. How does a given host machine know what devices are attached? It's possible to look for them on an I2C or USB bus, to some extent, but it is hard to distinguish between some similar-looking but very different devices. (HD44780 via PCF8574T: is it 16x2? 20x4? 40x2? is there a backlight attached?)

I would need to store configuration either inside the display devices--by adding an adapter board with EEPROM memory between the machine and the display: added complexity--or in a centralized way, in software/local storage or EEPROM memory on the host board: possible to get "out of sync" with the physically attached devices.

Some of the devices I wanted to use would have the same I2C address by default, so I'd need to make each unique--another installation failure point. And some I2C devices' addresses aren't configurable at all, so there's a max of one per machine.

Let's say a given display device fails--perhaps it runs short on power, perhaps a cosmic ray hits it. On an I2C bus this can freeze the entire bus. The more output devices per machine, the higher the likelihood of having all of its output devices fail.

Power management:

Many of the vintage displays I'm using require their own odd voltages, so a given host machine with four displays might need to provide 3v3, 5v, 6v, 9v, and 12v to its attached host displays. I could pass 5V around and use local boost converters inside each display; this leads to a large current rush at startup. I could add power sequencing to fix this. More complicated.

All these displays would be pulling a much larger current through the Maelstrom host board, leading to secondary problems with current ratings of connectors, larger PTC self-resetting "fuses,"

Some of the outputs need clean power, like speakers/amplifiers and analog meters (DACs). Each additional device, especially with a boost converter or switching regulator, makes the machine's power supplythat much noisier. It is possible to fight against the noise with local power filtering inside each device, but I hoped to stick with commercial modules and would need to buffer them with my own power filtration boards. This problem scaled quickly in practice.

Installation complexity:

The last problem with the "multiple displays per machine" architecture was how to make it humane to "use" as an installer--with all this additional complexity to track through a chaotic few days of drilling, attaching, labeling, spackling, and so on.

If I have a single machine with an octopus of screens attached to it, I need to disconnect all this stuff, number and label it, perhaps assign it certain ports on the host machine, and preconfigure the machine to know what displays are attached.

Why I chose self-enclosed machines instead

Keeping each Maelstrom node completely self-contained* makes the boring parts of keeping track of the individual nodes much easier. I just need to find the machine, attach it to the wall, and power it up.

*as much as possible; a few are "exploded views" of bare components attached to the wall.

I can store all of the configuration details for a machine's attached outputs in EEPROM memory on the node's single host board. The output devices themselves are installed inside the case. There is no external wiring to snag or catch when unpacking or installing the machines.

Each machine can deal with its own inrush current from boost converter powerup. In a more tightly controlled environment, it's easier to measure and make adjustments to the power filtration on my host board.

The software can read everything it needs to know about the attached peripherals, configure critical system parameters, and compile the necessary Cython modules dynamically on startup. I can easily replace a host controller in case of a failure without worrying about any per-machine configuration stored in the controller's storage.

Getting this big decision made let me move on to deciding what kind of computers to use.

Chris Combs

Chris Combs

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.