This project uses Tensorflow Lite running on an ESP32 to create a voice-controlled robot. It can respond to simple one-word commands: "Left", "Right", "Forward" and "Backward".

You can watch a detailed video of how it works here:

I trained up a model using Tensorflow on the Commands Dataset from Google. This has about 20 words in it and I selected a very small subset of words - enough words to control a robot, but not so many that the model became unmanageable.

To generate the training data we load the WAV files in and extract spectrograms from each file.

To get sufficient data for our command words I've repeated the words multiple times shifting the position of the audio and adding random noise - this gives our neural network more data to train on and should help it to generalise.

A couple of the words - forward and backward have fewer examples so I've repeated these more often.

I've ended up with a fairly simple convolutional neural network, with 2 convolution layers followed by a fully connected layer which is then followed by an output layer.

As we are trying to recognise multiple different words we use the "softmax" activation function and we use the "CategoricalCrossentropy" as our loss function.

After training the model I ended up with just under 92% accuracy on the training data and just over 92% accuracy on the validation data. the test dataset gives us a similar level of performance.

Looking at the confusion matrix we can see that it's mostly misclassifying words as invalid. This is quite good for our use case as it should mean the robot errs on the side of false negatives instead of false positives.

As the model doesn't appear to be overfitting I've trained it on the complete data set. This gives us a final accuracy of around 94% and looking at the confusion matrix we see a lot better results. It is possible however that there could some overfitting.

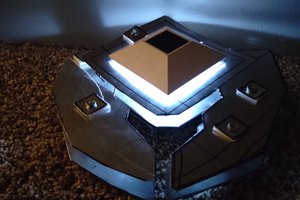

For the actual robot, I build a very simple two-wheeled robot. To drive the wheels I used two continuous servos and small power cell. It's got quite a wide wheelbase as the breadboard with the ESP32 on it is quite large.

To run the Tensorflow model on the ESP32 I'm using TensorFlow Lite. I've wrapped this in my own code to make it slightly easier to use.

To read audio we use I2S - this can either read from the built-in ADC for analogue microphones or directly from I2S digital microphones.

The command detector is run by a task that waits for audio samples to become available and then services the command detector.

Our command detector rewinds the audio data by one second, gets the spectrogram and then runs the prediction.

To improve the robustness of our detection we sample the prediction over multiple audio segments and also reject any detections that happen within one second of a previous detection.

If we detect a command then we queue it up for processing by the command processor.

Our command processor runs a task that listens to this queue for commands.

When a command arrives it changes the PWM signal that is being sent to the motors to either stop them or set the required direction.

To move forward we drive both motors forward, for backwards we drive both motors backwards. For left, we reverse the left motor and drive the right motor forward and for right, we do the opposite, right motor reverse and left motor forward.

With our continuous servos, a duty cycle of 1500us should hold them stopped, lower than this should reverse them and higher should drive them forward.

I've slightly tweaked the values for the right motor forward value as it was not turning as fast as the left motor and this caused the robot to veer off to one side.

Note that because we have the right motor upside down to drive it forward we run it in reverse and the drive it backwards we run it forward.

You may need to calibrate your own motors to get the robot to go in a straight line.

It works reasonably well!

It does occasionally confuse words and...

Read more » Chris G

Chris G

Vladimir

Vladimir

Arielle

Arielle

CJJakers

CJJakers

Roger

Roger