The project is a web-based application, written in HTML, CSS and Typescript.

Demo available HERE (tested on Chrome)

.

A neural network (MiDaS by Intel), already trained and loaded in Python using Pytorch and Pytorch Hub, was used to predict the depth for all the images selected, and the resulting prediction is recreated in a 3D space using Three.js and GLSL.

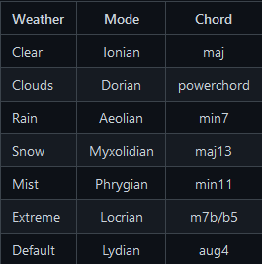

The user can move the camera with the mouse and change the depth using the mouse wheel. Through the interface he can select a city and a transition animation will be triggered. On top of that, a genetic algorithm creates a costantly evolving melody over a pad. The mode, and the chord voicing for the pad, depends on the weather condition from that location retrieved through the OpenWeatherMap API.

Matteo Amerena

Matteo Amerena

Yi-Wei Chen

Yi-Wei Chen

Costin Stroie

Costin Stroie

Koen Hufkens

Koen Hufkens