This sound chip was first produced in 1978, and was in arcade and pinball games, the Intellivision and Vectrex game consoles, and in sound cards for the Apple II and TRS-80 Color Computer.

How It Works

Library

A demonstration of the core functionalities can be found in main.py:

# Initialize sound object with pin definition. sound = GiSound(d0=8, d1=10, d2=12, d3=16, d4=18, d5=22, d6=24, d7=26, bc1=36, bdir=38, reset=40) # Set mixer; 1=0N, 0=OFF; (toneA, toneB, toneC, noiseA, noiseB, noiseC) sound.set_mixer(1, 1, 1, 0, 0, 0) # Set volume; 1=0N, 0=OFF; (volumeA, volumeB, volumeC) sound.set_volume(1, 1, 1) for i in range(4096): # Set tone value, 0-4096; (toneA, toneB, toneC) sound.set_tone(i, i+20, i+40) time.sleep(0.001) # Set noise, 0-31. sound.set_noise(2) sound.set_mixer(1, 1, 1, 1, 1, 1) sound.set_tone(200, 200, 200) # Define an envelope. sound.set_envelope_freq(10000) # 0-65535 sound.set_envelope_shape(0, 1, 1, 0) # 1=0N, 0=OFF; (continue, attack, alternate, hold) sound.enable_envelope(1, 1, 1) # 1=0N, 0=OFF; (chanA, chanB, chanC) time.sleep(2) # Turn off all sound output. sound.volume_off()

Further details are availble in the library.

Speech Synthesis

I'm sure there are better ways to go about this (e.g. Software Automatic Mouth), but I don't really understand them, so I said, "Dammit Jim, I'm a hacker, not a linguist" and came up with my own approach.

I first created a script that would systematically make each tone/noise combination that the AY-3-8910 can produce, and record each as a .wav file. Well, not quite everything possible; I only used 1 channel for tones (3 are available), and I didn't use any envelope functions. The number of conditions would have grown exponentially if I had done so, and I wasn't sure how much would be gained through their use.

Next, I recorded the target speech, "Greetings, Professor Falken." I split this into 30 millisecond segments. That seemed like a reasonable length to me for a minimal amount of sound that is perceivable, but I am making things up as I go along, so...

After this, I generated spectrograms for both the library of AY-3-8910 sounds, and the target sound clips. Finally, I created another script that compares each target sound to every available library sound, calculates the mean squared error of the difference, and then reports back the best match (i.e. lowest MSE).

This results in a sequence of tone/noise segments to run sequentially on the AY-3-8910 to reproduce the target speech. I did that here. If you don't have an AY-3-8910 sitting around, you can listen to a .wav of the result here. It's rough, to be sure, but it is distinguishable. And I don't want it to be too good, or it wouldn't be retro enough...at least that is what I like to tell myself. Seriously though, the first time I heard this I was very surprised that the beeps and hisses of the AY-3-8910 could be turned into speech at all and was quite pleased with the result.

While this approach only allows the chip to say one phrase, the next step is fairly obvious. I could take the same approach to generate sequences to reproduce all of the English phonemes. From there, I could just string together phonemes as needed to synthesize any arbitrary speech.

Media

YouTube:

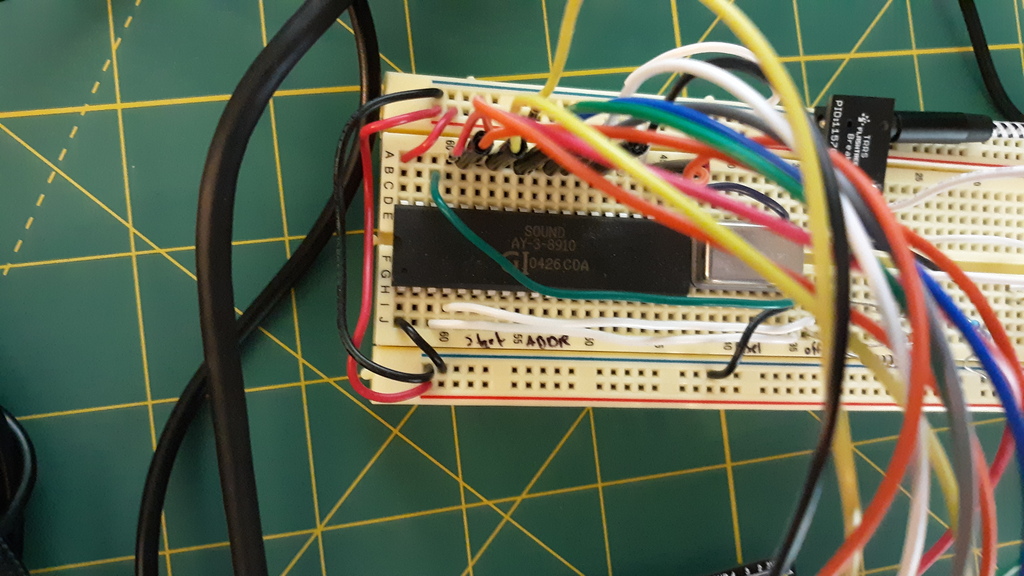

Bill of Materials

- 1 x Raspberry Pi 400, or similar

- 1 x AY-3-8910 sound generator

- 1 x 3.5mm jack to USB audio adapter

- 1 x Speaker (3.5mm jack input)

- 1 x 2 MHz crystal oscillator

- 1 x TRRS jack breakout board

- 2 x 1 nF capacitor

- 3 x 10K ohm resistor

- 1 x 1K ohm resistor

- 1 x breadboard

- miscellaneous wires

About the Author

Nick Bild

Nick Bild

Aspiring Roboticist

Aspiring Roboticist

Maybe there's a way to further improve the sound using a different mechanism.

Some guys got really good sounds out of a 80's sound chip using Viterbi coding:

https://www.msx.org/forum/development/msx-development/crystal-clean-pcm-8bit-samples-poor-psg