The goal for this experiment was to prove that the frame-separation and ghost-correction algorithm works. Here's the test image as taken with a DSLR. The camera exposure time (shutter open) was 15 seconds, consisting of a 4 second red flash, three seconds for me to re-arrange the dice in the dark, a 1 second blue flash, another re-arrangement, and a 1-second green flash. I know from using this camera for astrophotography that it has poor red sensitivity (it's practically blind to the H-alpha line at 656nm), so I used more red light.

As you can see, the image is a jumble of the three scenes. These scenes won't make a particularly exciting animation, but should prove that the algorithm can work. The problem, of course, is cross-sensitivity between the r, g, and b pixels in the camera sensor and the r, g, and b LEDs - each pixel sees all three LEDs to some degree, resulting in ghosts when you try to separate the frames.

Here are the raw red, green, and blue channels of the image (left, center, and right, respectively). You can clearly see the ghosting, especially in the green and blue images - in fact, in the green image, it's difficult to tell which dice are the ghosts! It's present in the red, too, but you really have to look for it.

Now, here are the corrected images with the ghost-correction algorithm applied:

You can still see some minor ghosting in the green channel (ghosts of ghosts?), but overall, the images are greatly improved - they'd certainly be good enough to make a short animation with, assuming they had been taken of a moving object. There is also some scaling issue with my calibration algorithm that has caused the green image to darken, but if I can't figure out the "correct" way to fix this, I can always manually adjust the image brightnesses to match (with very little guilt).

Overall, I'm pretty excited by this result. I knew the math worked out, but to see it work so well in practice is very satisfying

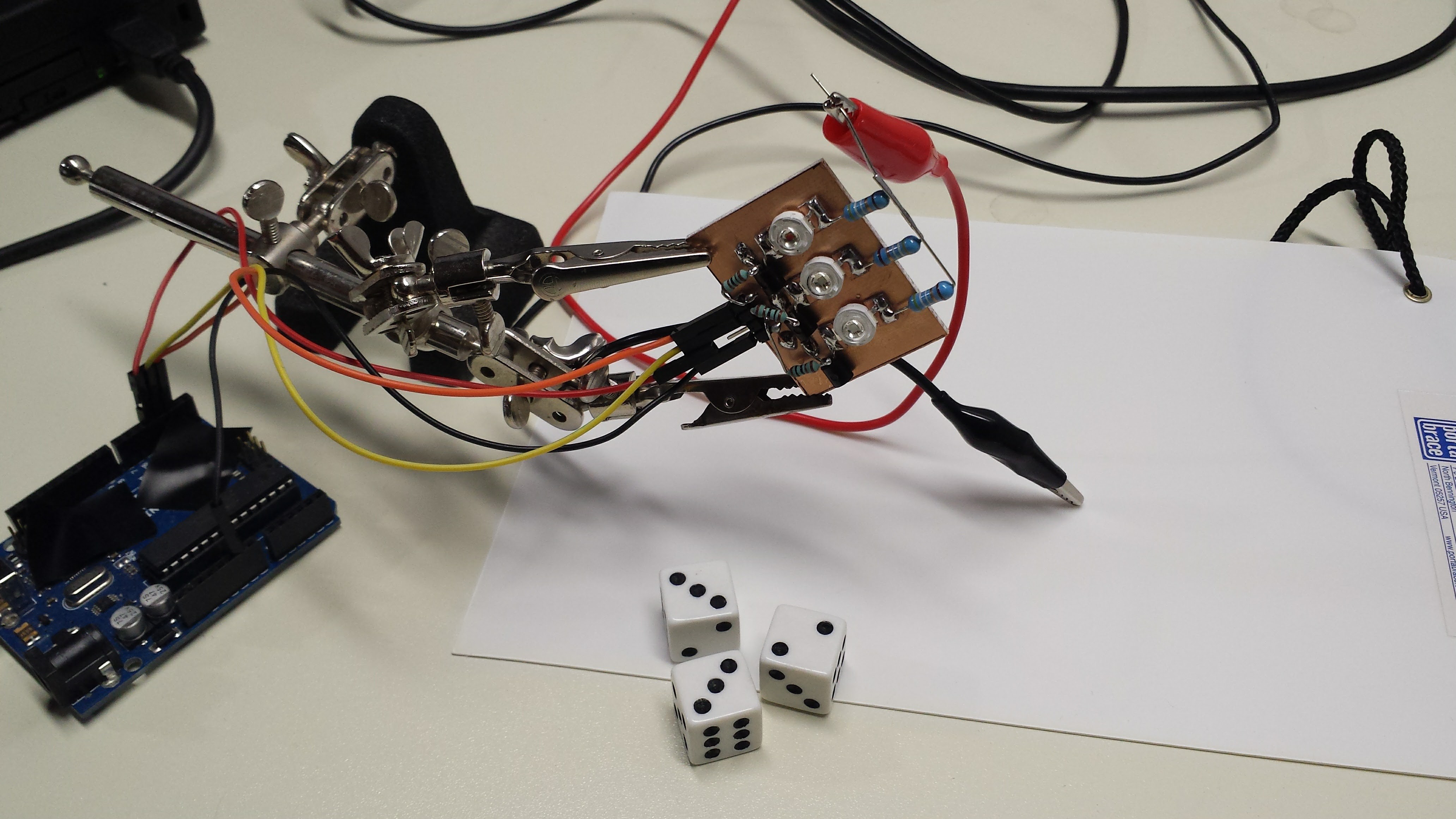

Here's the simple setup I used to capture the test images:

Ghost-Correction Algorithm

Those not interested in the mathematical details can stop reading now :-)

I used this simple model for image formation:

Where I is any particular pixel in the RGB image, M is a 3x3 matrix of sensitivities for the camera pixels vs the LEDs, and S is a vector representing the brightness of that particular pixel in the three scenes. You can write this out in terms of the components:

We measure I with the camera. If we have an estimate for M, we can use it to re-construct the individual components of S, in other words, the pixel values in the three scenes, by applying the inverse matrix:

To estimate M, three separate images were taken of a photographic white balance card, one illuminated with each of the LEDs:

I chose only pixels with maximum components between 100 and 254 for calibration. This eliminates much of the noise. Each image gives an estimate for one column of the matrix M. For example, with the red image, we have:

so, we can estimate the first column of M by:

We don't really know Sr, but since we're only really interested in the ratios of the color sensitivity, we can arbitrarily set Sr to 1 (likewise with Sg and Sb). Of course, this is where my image brightness issue comes from, but it doesn't seem like a huge problem. With the three images, we can estimate the three columns of M, and then invert the matrix for the correction algorithm. If the matrix ends up singular, you've got some bad data or a really terrible camera...

As an example, here's the estimated matrix M for the test images in this log:

You can see the cross-sensitivities in the off-diagonal components, especially between the green and blue channels.

Caveats

If you try this algorithm, make sure you have linear RGB pixel values. You probably don't. Trying this algorithm with images in a gamma-corrected color space like sRGB is a waste of time; it's just not going to work well at all. If you have to, you can apply the inverse gamma transformation to your image to get back into a linear space (at the cost of some precision). A better approach is to capture images in raw mode, if you have a capable camera. I captured these images with a Canon EOS 10D (that I've been using since 2003) which has a 12-bit ADC. I used Dave Coffin's outstanding dcraw program to decode the RAW image format into a linear space. Unfortunately, the python library I am using (PIL) doesn't support 16-bit component images, so I'm stuck in 8-bit precision.

I'll probably end up re-writing this code in C++ anyway, at which point I can use my old libraries for 16-bit images. I am anxious to see if the added precision improves the correction algorithm.

Another trick is to avoid mixing the channels during de-mosaicing. The pixels inside the camera are typically arranged in a Bayer pattern, which interleaves separate red, green, and blue sensitive pixels. To create an image with all three components at every pixel, software within the camera interpolates the missing values. In doing so, the three frames in my system are irreversibly mixed. Luckily, dcraw has an option to interpolate the color channels independently, which greatly improves the result.

The command line I used to extract the raw images from the CRW files from the camera was:

dcraw -W -g 1 1 -o 0 -f

EDIT 20161111

As I was falling asleep after writing this log, I realized what this algorithm means in terms of color science. I'm not a color scientist, but I've ended up dabbling in the field professionally for a long time. Anyway, multiplying by M^-1 can be seen as transforming the image into a color space where the LEDs are the three primaries. In this space, light from the red LED has the coordinates (1, 0, 0), and so on. This interpretation might provide clues about a more robust way to estimate M.

You may have noticed that my test scenes are of black-and-white objects. This eliminates some thorny issues. Colored objects will reflect the LED lights differently, causing objects which change their appearance in the three frames. Due to the bandwidth spread of the LED output, colored objects will also degrade the performance of ghost correction. Single-wavelength sources (hmmm, lasers) wouldn't have this issue. Additionally, any fluorescence in the objects (especially an issue for the blue LED) will cause uncorrectable ghosting. Even within these limitations, I think some interesting brief animations can be captured.

Ted Yapo

Ted Yapo

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.