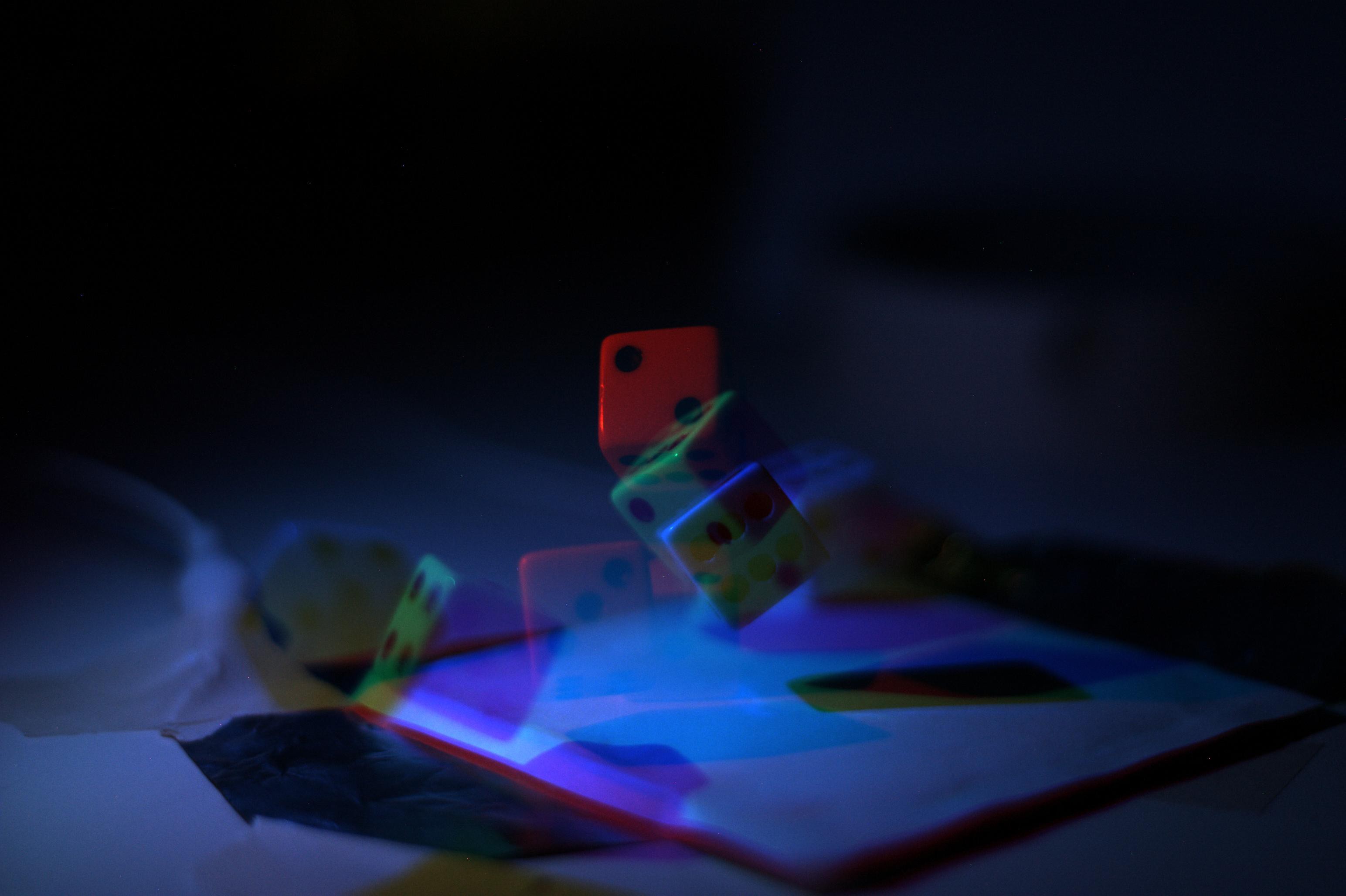

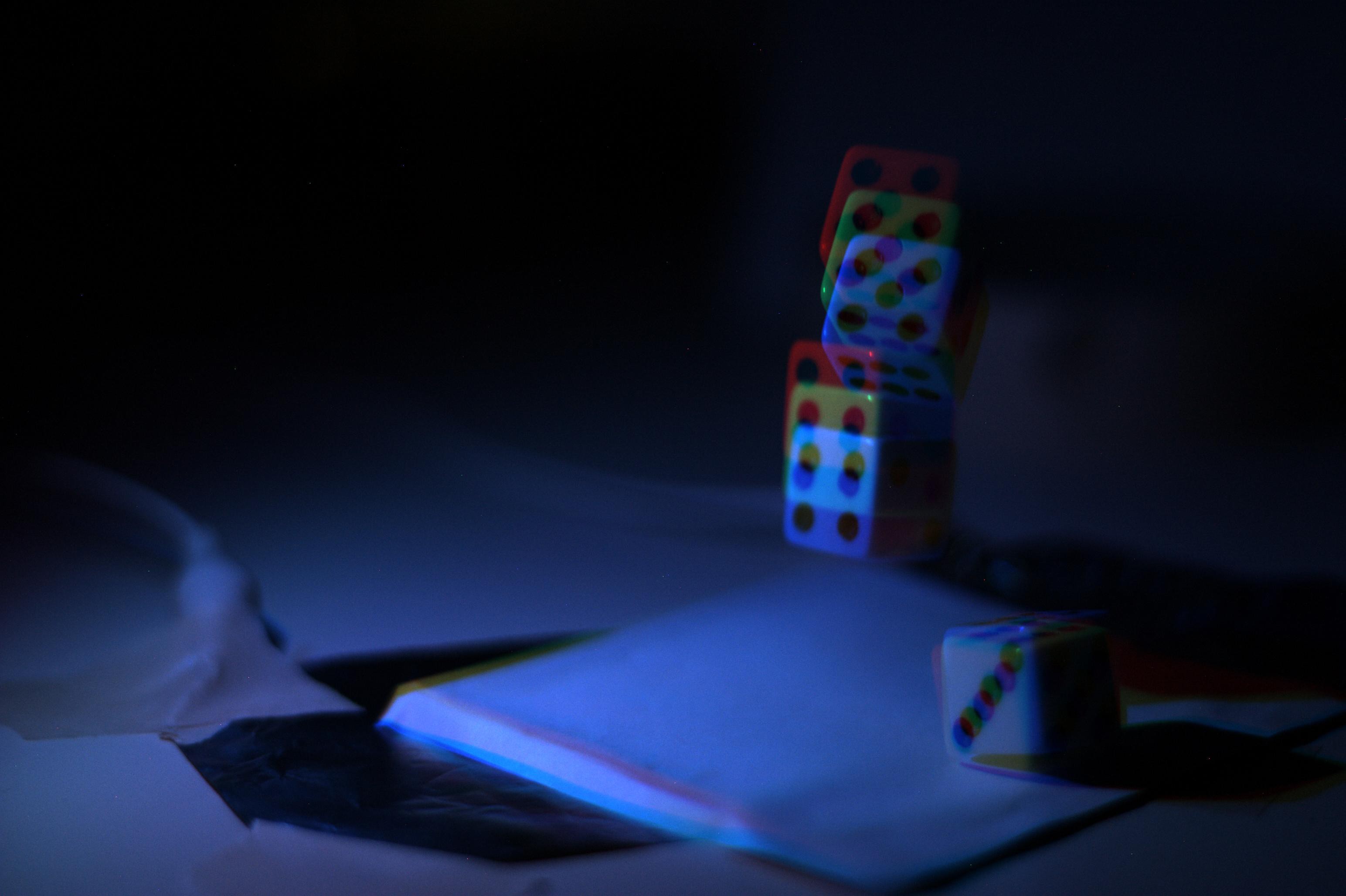

It turns out that you can make some 3-frame animations of rolling dice with just the 3W red, green, and blue LEDs driven with normal BJTs and 1k resistors from an Arduino. I used two pieces of aluminum foil as a trigger - the falling die presses one against the other, closing the circuit. It's a pain-in-the-ass to adjust, but I got it to work OK for some tests.

The flash sequence starts when the die hits the table, so this sequence is of the subsequent bounce. I experimented with 1 ms flashes, then moved to 200 us. From these experiments, I think if I can get 100x more light than I'm getting from these single-chip LEDs (driven below their rated current), I should be able to take some initial bullet photographs. More would be better :-)

What's Wrong?

There are a few issues I have been able to identify so-far:

- Some pixels (especially in the blue channel) are saturated 255. The ghosting-correction algorithm fails for these pixels. I think the only solution is to ensure no pixels in the image are saturated in any channel. The saturated areas in the above image are easy to spot.

- The brightnesses of the three channels don't match. I applied some hard-coded corrections to these animations, but I think I need to write an automatic brightness adjuster to eliminate this annoying effect

- The ghost-correction calibration has to be done at the same LED current used for the actual flash. I just used my original test calibration for this sequence, and it doesn't work that well. The problem is that the LED colors shift somewhat (blue shift) at different currents. To get the best images, a calibration will need to be done with the same duration and current flashes used for the photograph.

There are obviously issues to fix, but it seems like this is going to work. If I can get 1 us flashes bright enough, that's the equivalent of 1 million non-overlapping frames per second (for a 3-frame burst).

Gallery

Here are some other test images, shown along with the original RGB frame from the camera:

Ted Yapo

Ted Yapo

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.

I wish I knew more about the science behind this type of photography, but my coding skills are limited to the "olden" days of assembly language and visual basic, perhaps your algorithm can address the bayer filter directly, since the ratio is 50% green, 25% red and 25% blue?

Are you sure? yes | no

Yep, I'm using software that gives me the raw values off the sensor in the Bayer pattern - it turns out to be important. Some of the de-mosaicing algorithms traditionally used to interpolate pixels from the the RGBG pattern do screwy things to the data. I'd much rather handle this myself.

Are you sure? yes | no

Hey Ted, very cool! Now I am understanding what you are trying to do here, your right about the blue spectrum causing saturation problems, remember they are much shorter wavelengths and are separated by sub spectral lines; violet, indigo and blue. Using an amber filter (for reducing blue haze,) will tame the blue spectral features as far as the saturation is concerned.

Now the LED's are a separate problem, they are not perfect, no two will ever respond the same due to manufacturing defects, so I would suggest a linear array of some kind so the chopping can be precisely calculated, then implemented.

Are you sure? yes | no

Yes, colored filters to clean up the LED spectra is an interesting idea - it would eliminate some ghosts due to off-white objects. Even better would be some filters to clean up the camera response, but that's a much harder problem because the Bayer mask is on the sensor. I'd need some 3-peaked interference filters, which would have to be custom made.

And arrays of LEDs will pose their own problems, due to manufacturing spread as you mention. Overall, though, if the light from different red lights (for example) is well-mixed over the scene, the de-ghosting algorithm should handle it OK. The problems come if "red" light in one part of the scene is different from "red" light in another.

It'll never be perfect, and there will be some visible ghosts, but short of some very, very expensive equipment, I don't think there are many other ways to capture images (even if only a few) images this rapidly.

Are you sure? yes | no

Nice !

Are you sure? yes | no

can see the saturation, as you pointed out... but perty durn slick nonetheless.

Are you sure? yes | no