Last year, I made a computer head helmet. Rather than building an entirely new costume this year, I am instead improving this preexisting costume. Before I get ahead of myself, I should start by documenting this preexisting design a bit.

Physical Construction

The frame of the costume is an old CRT display that has been gutted and cleaned. From there, three key modifications. First, a hole was sawed in the bottom of the CRT case, with pipe insulation around the edge for comfort.The second modification is a hard hat. This allows for the costume to be worn as a helmet. I was lazy, so the hard hat was literally epoxied to the CRT case. Not clean, but it works.

The third modification is a screen. For this, an acrylic one-way mirror was cut to size and glued into place.

Electrical Construction

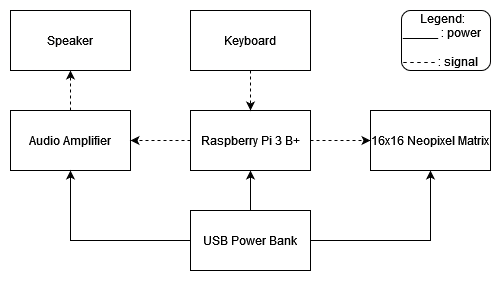

The electronics for this project were fairly simple, as shown below:

The Raspberry Pi had two functions. First, it controlled the Neopixel matrix to display an animated eye in green. Second, it spoke. Phrases were typed on the keyboard, and Espeak was used to say them aloud through the audio amplifier and speaker.

The Raspberry Pi had two functions. First, it controlled the Neopixel matrix to display an animated eye in green. Second, it spoke. Phrases were typed on the keyboard, and Espeak was used to say them aloud through the audio amplifier and speaker.Software

The software used was similarly simple. While the code can be seen in the first commit to main in my git repo (https://github.com/cogFrog/computerHead), I will briefly explain it here. There are two separate scripts that are run at once. The first script, testNeopixel.py, drives the Neopixel matrix. It uses four .gifs as four frames of animation (eye looking far left, eye looking left, eye looking left while blinking, eye closed looking left). These four frames of animation are flipped to get a total of eight frames of animation. A state machine with random transitions from each state to the connected states brings it all to life.

The other script, testSpeech.py, simply has Espeak say whatever the user types into the terminal. Unfortunately, there is no screen to allow the user to see what they are typing. The result is that I have carefully type and hope I don't make any mistakes.

Conclusion

That's it for now while I work on getting Mozilla's DeepSpeech working on my newly acquired Raspberry Pi 4. With any luck, updates will come soon and I can get this done before Halloween hits!

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.