User case

Let's see how the device works and how the different actors interact with it

The first point is to enter the data into the system. We have developed an Alexa Skill that launches a python function that stores that (name and frequency) in a database. So the caregiver can talk to Alexa and update the information about his patient, more details in a later section. A more professional way is to connect the health IT system with our system. It is a standard HL7, and it allows the communication health system. We can import this information as an HL7, OMP ^ O09 message (Treatment Order), SIU^S12 for the doctor appointment, and ORU for the medical test result. With a Mirth Connect (an open-source program that can manage these messages), we can configure a channel that stores the name of the medicine and the frequency of taking it in the database. But we prefer the maker way.

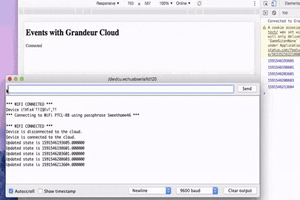

We manage the events with AWS Event Bridge, which fires some lambda functions, csDispensation01 and csDispensation002. It looks in the database and generates a JSON message for each of the pending information in that hour and we send it to the MQTT queues of IoT that are mounted. We get the subject from the database, patient table.

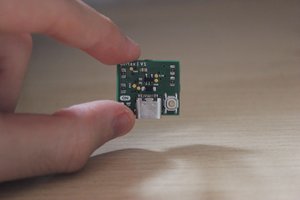

AWS IoT Edukit reads these MQTT messages. I have started from the Cloud Connected Blinky sample. I have added a few more tasks, and I have stored some messages in an SDCard, so I can play them when I need to interact with people. We use blinky LEDs to draw the attention of the person who has to take the medication. It changes the color when it spends a period of time without interaction with the patient. The name of the medicine is written on the screen. we can show ten different medicines. When the patient takes the medicines he touches the screen and the system updates the state of the medicine and clears the screen.

Another daily event (AWS event bridge) fires another lambda function, csNotification02, that looks in the database for medicines not taken in the last 24 hours and sends a message to the phone of the medical caregiver using AWS SNS.

Prescription Medicine, Alexa Skill

We store the medicine that CuramSenes will remember to take in a MariaDB table. As I have told you before, the professional (with several patients, connected directed to the hospital) way is to use HL7. But for now, I have developed a more maker way an Alexa Skill.

We have started from the hello word sample, in python. Some parts of the code are the original. I have changed the name and the invocation phrase, new medicine. For now, it is ok but it is not a self-explaining name.

I have used 2 news slot types, one for the time, the amazon implementation. For the medicine, I have created a new one, when the name of the medicines I use in the sample. But we can populate this list with a Vademecum-like list.

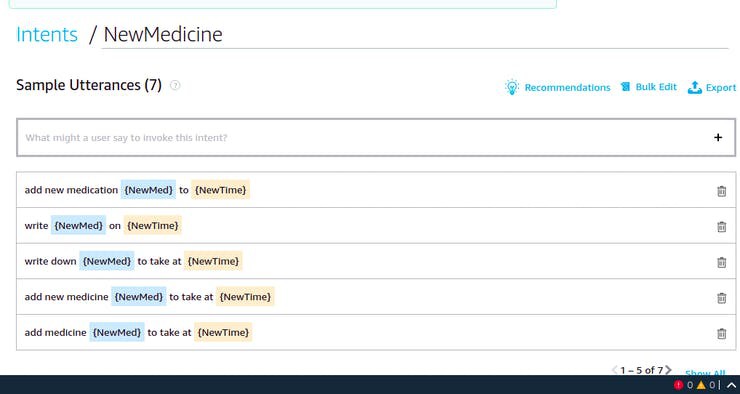

And I have added a new Intent, NewMedicine. It has the ways it can be invoked.

When somebody says these phrases it launches a python function, it connects to the database and stores the information there. The code is in GitHub:

This is a sample interaction:

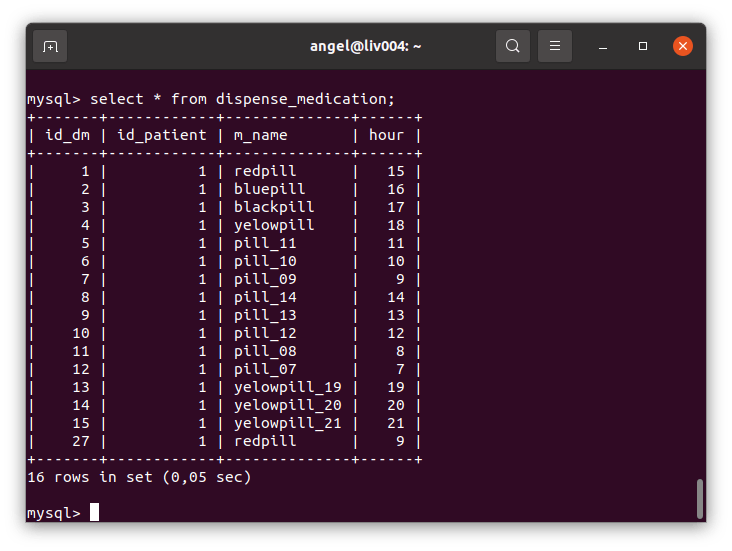

And the table has one more element...

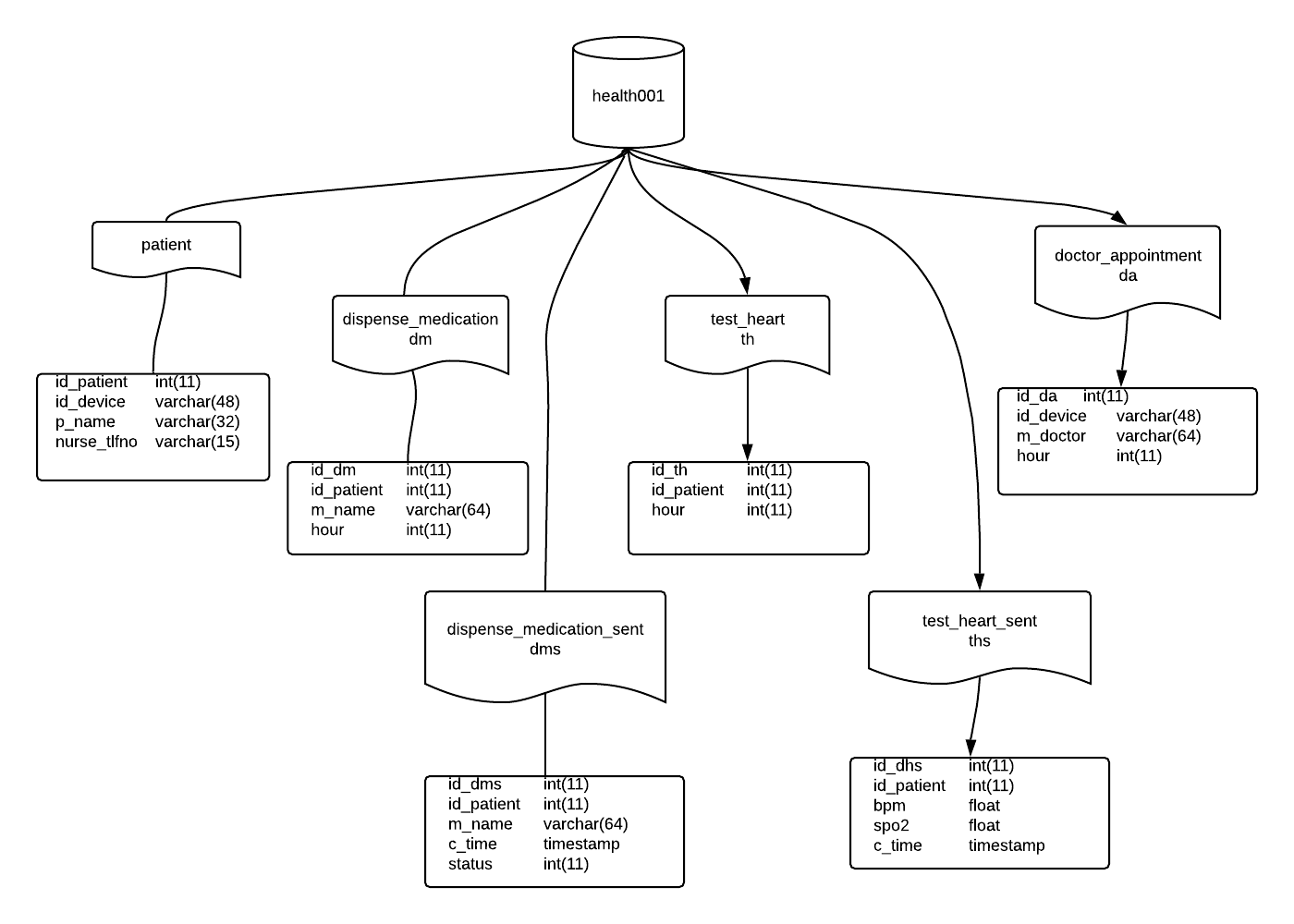

Data model

It is a very simple data model, we will complete it when we add improvements.

There are six tables:

- patient has the data for any patient, but in this sample, we have only one.

- dispensa_medication, where we store the medicines and at what time our patient has to take them.

- dispense_medication_sent, we add a record for any medicine dose, and the status it has.

- test_heart, stores the time we ask the patient to check his heart rate and O2 saturation

- test_heart_sent, stores the measures of the check

- doctor appointment, where we store the appointment with the doctor, so we can send a warning.

Something relevant is the status of the medicine in dms table, they are:...

Read more » Angel Cabello

Angel Cabello

Marin Vukosav

Marin Vukosav

Sumit

Sumit

Grandeur

Grandeur

Amine Mehdi Mansouri

Amine Mehdi Mansouri