I've managed to pull down data samples, label and sort the data and upload that data to an s3 bucket. Once the data has been made available we need to generated the mel-spectrograms, to do this we'll be using librosa to make the spectrogram and saving that to a file which will end up in a bucket for training.

To do this I've created a processing job to go through each sample and generate the spectogram:

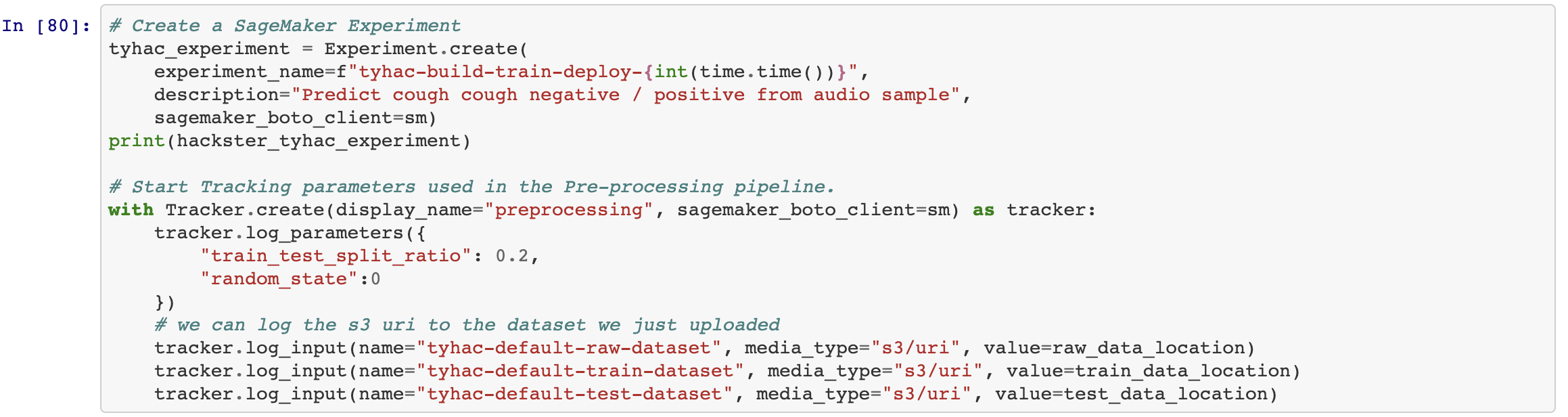

We can also see here the training is split, from my reading I had found that it's not good to run training and validation on the same data set. This method will split it so that only validation is run on "unseen" data which should help with the results.

Training

I'm using tensorflow to help out with the training, a seperate training job is submitted to sagemaker and results are saved to s3. The initial training results were pretty bad, I was unable to really to anything with the model. I tried various hyperparameters and many data preprocessing tweaks and verification without any luck.

Pre train loss: 0.6990411281585693 Acc: 0.5088862180709839

Epoch 1/200

93/93 - 79s - loss: 0.6429 - auc: 0.5088 - val_loss: 0.5533 - val_auc: 0.4560

Epoch 2/200

93/93 - 78s - loss: 0.5998 - auc: 0.4971 - val_loss: 0.6015 - val_auc: 0.5000

Epoch 3/200

93/93 - 77s - loss: 0.6017 - auc: 0.4744 - val_loss: 0.5525 - val_auc: 0.4427

Epoch 4/200

93/93 - 76s - loss: 0.5788 - auc: 0.5350 - val_loss: 0.5710 - val_auc: 0.4553

Epoch 5/200

93/93 - 77s - loss: 0.5807 - auc: 0.5307 - val_loss: 0.5465 - val_auc: 0.4724

Epoch 6/200

93/93 - 77s - loss: 0.5366 - auc: 0.5319 - val_loss: 0.5375 - val_auc: 0.4668

Epoch 7/200

93/93 - 77s - loss: 0.5437 - auc: 0.5724 - val_loss: 0.5726 - val_auc: 0.5067

Epoch 8/200

93/93 - 76s - loss: 1.2623 - auc: 0.5143 - val_loss: 0.6618 - val_auc: 0.5000

Epoch 9/200

93/93 - 77s - loss: 0.6556 - auc: 0.5066 - val_loss: 0.6495 - val_auc: 0.5000

Epoch 10/200

93/93 - 76s - loss: 0.6440 - auc: 0.5041 - val_loss: 0.6380 - val_auc: 0.5000

Epoch 11/200

93/93 - 76s - loss: 0.6334 - auc: 0.5327 - val_loss: 0.6285 - val_auc: 0.5000

Epoch 12/200

93/93 - 77s - loss: 0.6243 - auc: 0.4078 - val_loss: 0.6197 - val_auc: 0.5000

Epoch 13/200

93/93 - 76s - loss: 0.6156 - auc: 0.5306 - val_loss: 0.6113 - val_auc: 0.5000

Epoch 14/200

93/93 - 77s - loss: 0.6081 - auc: 0.4703 - val_loss: 0.6042 - val_auc: 0.5000

Epoch 15/200

93/93 - 77s - loss: 0.6011 - auc: 0.4222 - val_loss: 0.5975 - val_auc: 0.5000

Epoch 16/200

93/93 - 77s - loss: 0.5948 - auc: 0.4536 - val_loss: 0.5911 - val_auc: 0.5000

24/24 - 15s - loss: 0.5339 - auc: 0.4871

Post train loss: 0.5339470505714417 Acc: 0.48713698983192444To make matters worse, I continued to research how to test models and what metrics to use to make sure I didn't rely on false numbers. The models I trainined would consistently achieve an RoC of 0.5, which is useless.

Deploying an endpoint

Because I was using tensorflow deploying the endpoint was easy, the endpoint is used for interfence. We can see the model training above was no good, this is custom script I wrote just to test known labels using the endpoint:

Testing endpoint ...

begin positive sample run

negative: 1.25%

negative: 0.16%

positive: 99.82%

negative: 7.50%

negative: 0.40%

begin negative sample run

negative: 1.28%

negative: 0.19%

negative: 40.46%

negative: 29.63%

negative: 0.51%

mick

mick

Discussions

Become a Hackaday.io Member

Create an account to leave a comment. Already have an account? Log In.