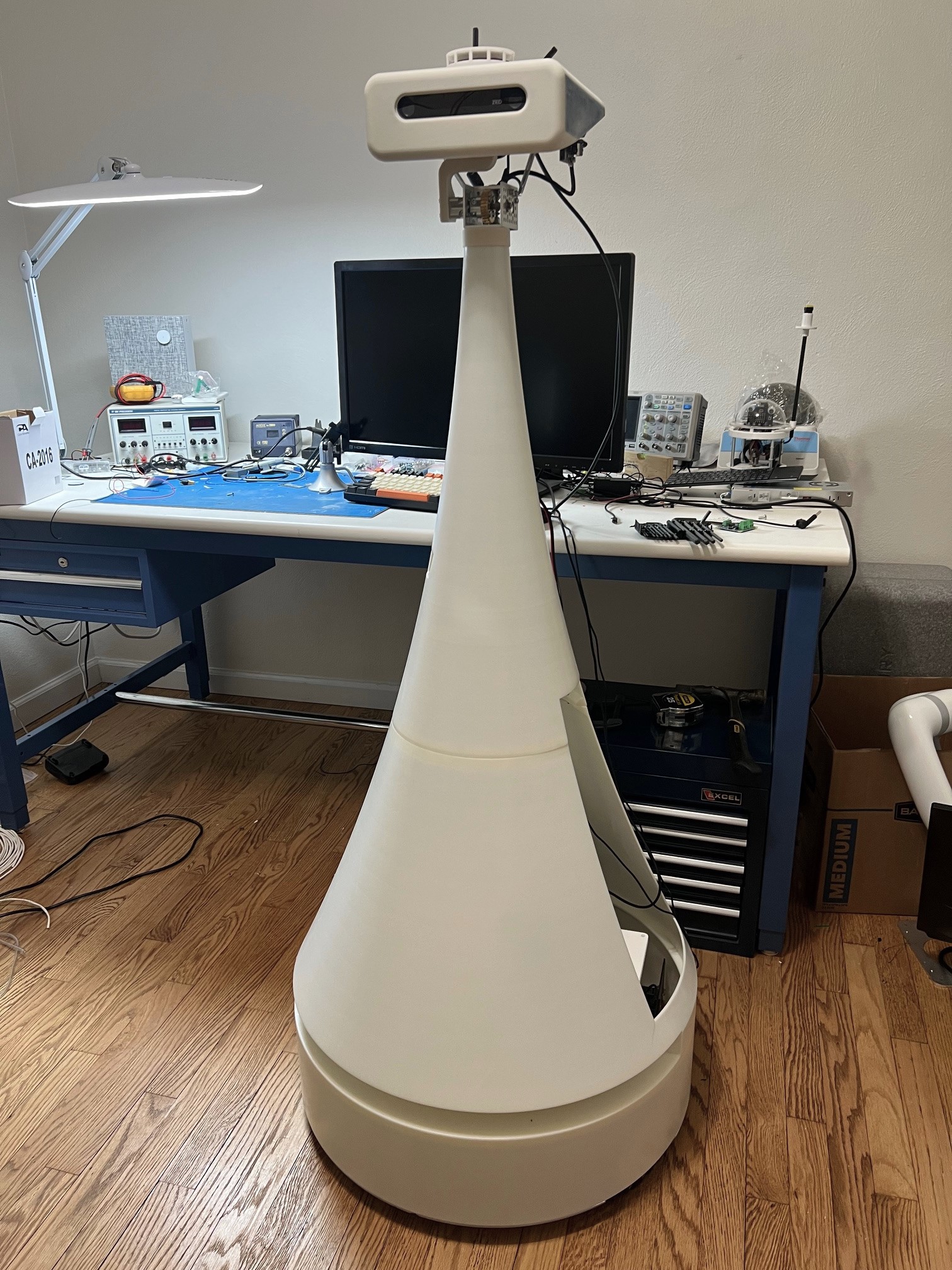

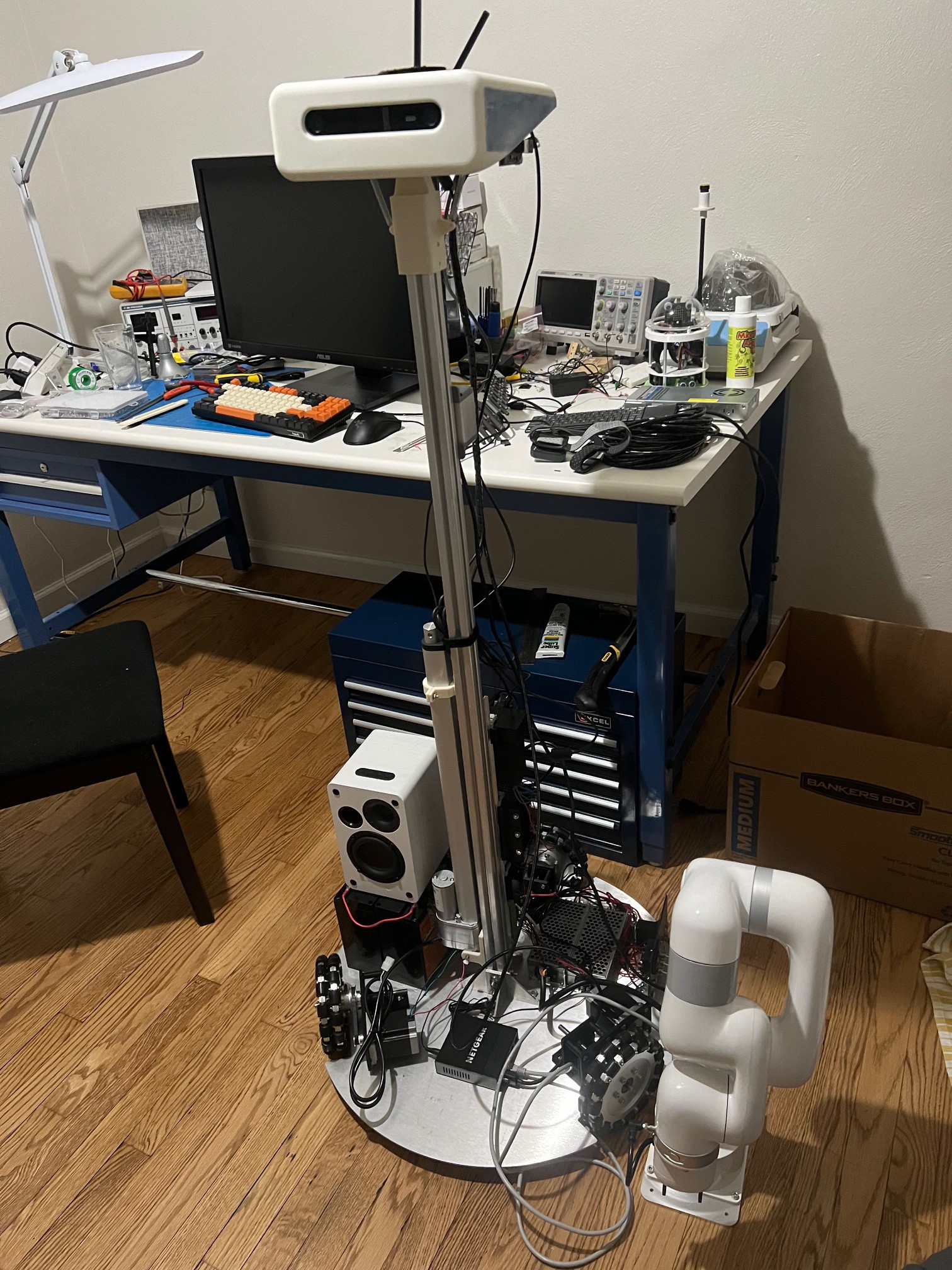

There is a lot to document for this so it will likely be in stages. First off I have always dreamed of having a home robot that could do simple to moderate tasks. The largest problem has been price. To me bringing down the price of robotics should always be the goal, as this will allow more to enjoy the benefits.

For this build I'm attempting to keep the part list at ~5k USD. It is a lot for most and would make a retail version around ~17k. Robotics is still in fact a expensive hobby and with that in mind this will be a development prototype. I will be however be selecting/designing components based on best value, availability and cost reduction in mind.

Current envisioned tasks for the robot-

- Read a physical book (with my now 8 year old flipping the pages)

- Wipe down a table

- Pick up items on the floor and place them in a basket. (requested by the wife...)

- Bring a drink from one location to another

- Serve wine/champagne for a party environment

- Greet people at the door

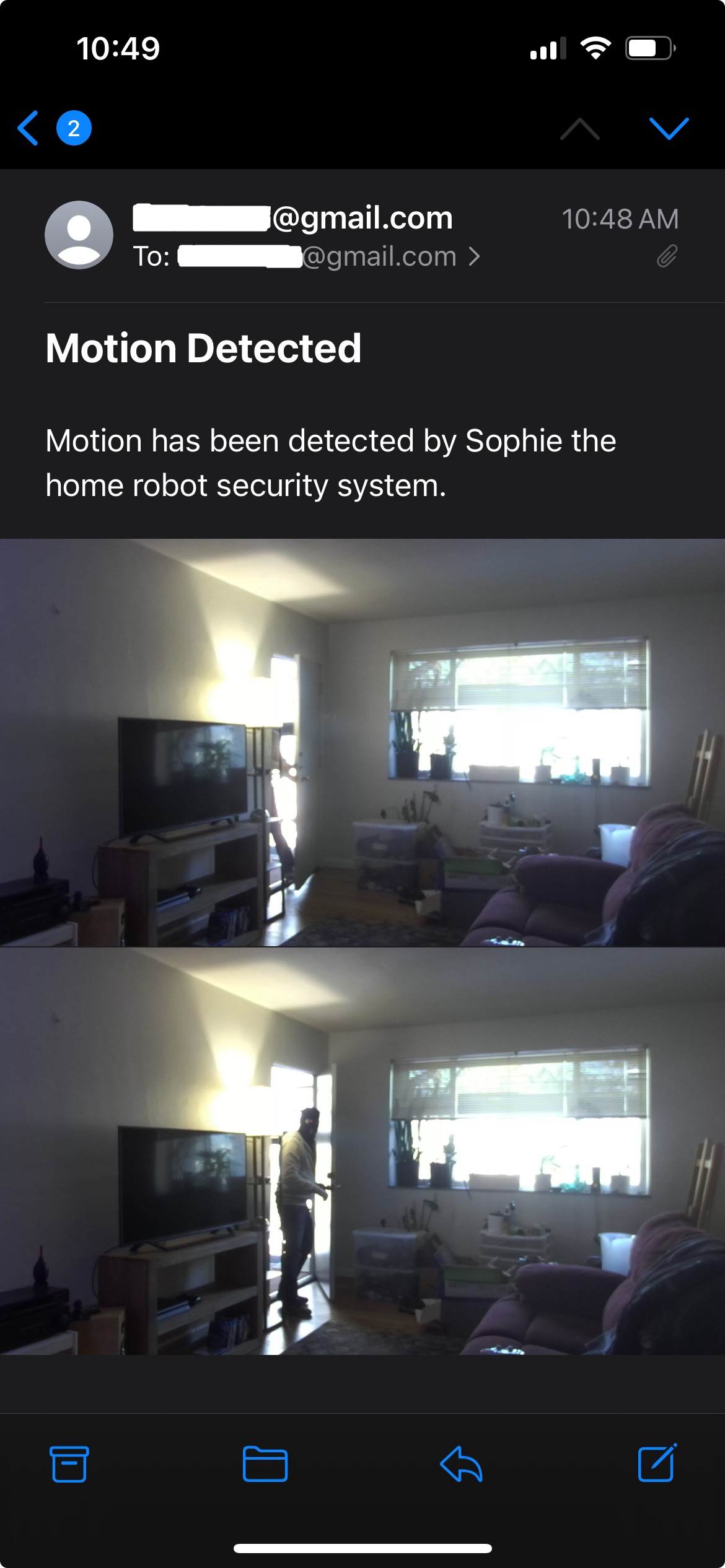

These are rather high level tasks, some more low level (ish) ones would be to use OpenCV to recognize a faces and assign a names to them, find a wall power socket to self charge and be able to report the weather and current temperature in the room. It looks like I may move up to the newer ZED 2 when I have a chance as I like that they have started to build in sensors to the camera that I should be able to monitor/report with text to speech and also have a better idea of the current head position/localization using the IMU.

These task will take a lot of engineering and programming to achieve. Though, I have ideas on ways to reduce the programing side with hardware solutions for some.

The recent cost reduction in high performance robot arms has made the project possible. The following robot arm was selected based on cost, number of axis, collaborative ability (safety), repeatability, and a smaller built in control box. https://www.kickstarter.com/projects/ufactory/ufactory-lite-6-most-affordable-collaborative-robot-arm

Apollo Timbers

Apollo Timbers

Paul Crouch

Paul Crouch

Tim Wilkinson

Tim Wilkinson

Roald Lemmens

Roald Lemmens

Keith Elliott

Keith Elliott