Features

- real-time convolution reverb

- 150+ impulse responses

- 2 to 4 parallel channels on Nano

- per channel impulse response selection

- per channel predelay selection

- per channel separate dry and wet mixer

- per channel separate dry and wet panning

- interpolates between impulse responses with settable interpolation time.

- configurable MIDI mapping

Prerequisites

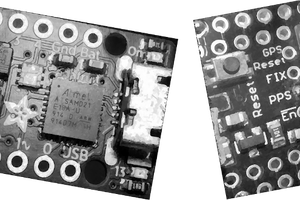

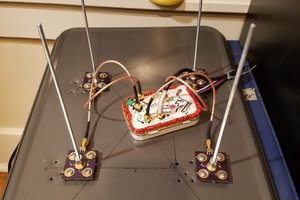

- NVIDIA Jetson (Nano, Xavier NX, AGX Xavier) or Desktop / Laptop with NVIDIA GPU

- External sound card (required for Jetson, optional for other setups). This project has been tested with a Focusrite Scarlett 2i2 and a Roland TR6S (which also presents itself as a sound card)

- MIDI controller (optional, can be software controller). This project was tested with a Novation Launch Control, and as such has mappings for this controller in settings.txt

- Some dark shades to look cool while you're convolving those beats...

Setting up JACK

Install jackd and probably qjackctl using the package manager. Try to find your sound device. Nope... Reboot a few times and cross your fingers. Get loads of xruns. Cry a little and smack it with a hammer. You're jack setup should now magically work.

The jetson kernel comes without alsa seq support, so start jack with -Xnone. The convolution code talks to raw MIDI devices directly, without going through Jack.

Building the source code

https://github.com/limitz/cuda-audio

MIDI Controls

There are 8 MIDI controls per channel. I've mapped them to 8 knobs and change the MIDI channel to switch between channels. You can also map a single knob to multiple channels, to have them in sync, particularly useful if you have a stereo input: Map everything except the pan controls to the same knobs, and then pan the dry signals (and optionally the wet signals) to left and right.

- SELECT - select one of the impulse responses to convolve with. The impulse responses will be interpolated between, depending on the interpolation speed (SPEED)

- PREDELAY - add a delay at the start of the wet signal. This is not interpolated and will cause clicks if done during live. (for now)

- DRY - dry signal level. (The unaltered original signal)

- WET - wet signal level. (The convolved signal, delayed by predelay)

- SPEED - interpolation speed between impulse responses when a new one is selected

- DRY PAN - pan (left to right) of the dry signal in the output mix

- WET PAN - pan (left to right) of the wet signal in the output mix

- LEVEL - level of the output mix (dry + wet) but not the residual

E/S Pronk

E/S Pronk

Raymond Lutz

Raymond Lutz

Dan Julio

Dan Julio

Patrick

Patrick

Martin

Martin

I'm very interested in the work done, but what's the point of using advanced math reverb when the Nvidia board has enough RAM and the reverb can be done arithmetically?