Livestock and Pet Geofencing

An autonomous system that monitors animals within a zone.

Team: SoFlo Primary Key

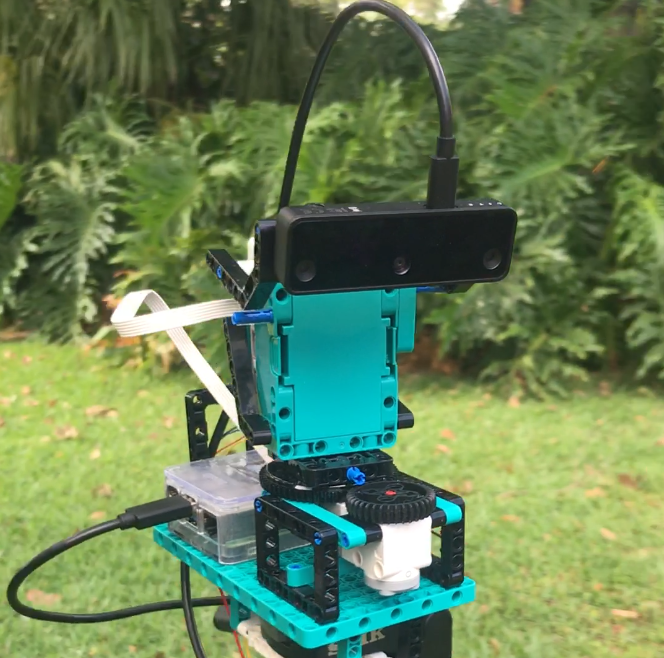

We are finalists in the OpenCV Spacial AI Contest. We will be posting information and updates about our project here as we approach the contest submission deadline on April 1st, 2022. We will be using the OAK-D-Lite and LEGO Mindstorm Robot Inventor.

Problem Statement

Monitoring livestock and pets within a zone can be a struggle. Traditional fences can be broken while leaving the owner unaware that their animals are loose. Loose animals can lead to property damage, loss of the animal, panic, and heartbreak. We propose an autonomous system that can monitor animals and send notifications when animals have crossed a boundary line of a predetermined zone.

This system consists of an autonomous module and a base station. The autonomous module is comprised of the Oak-D Lite camera, a pan, and tilt base, a Raspberry Pi with GPS, IMU, and LoRa. The autonomous module would ideally be suspended by a zipline trolley. The zipline trolley allows the system to monitor a much larger zone. The system can also be affixed atop a good vantage point for smaller zones.

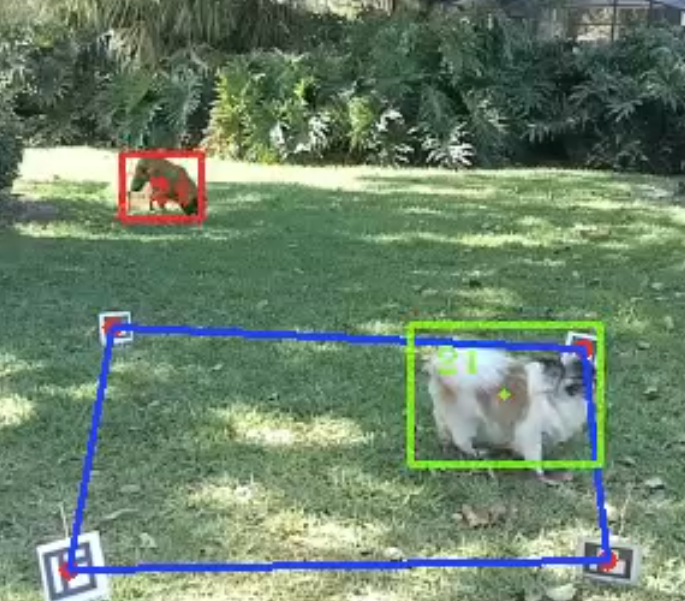

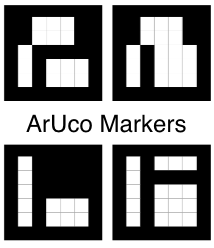

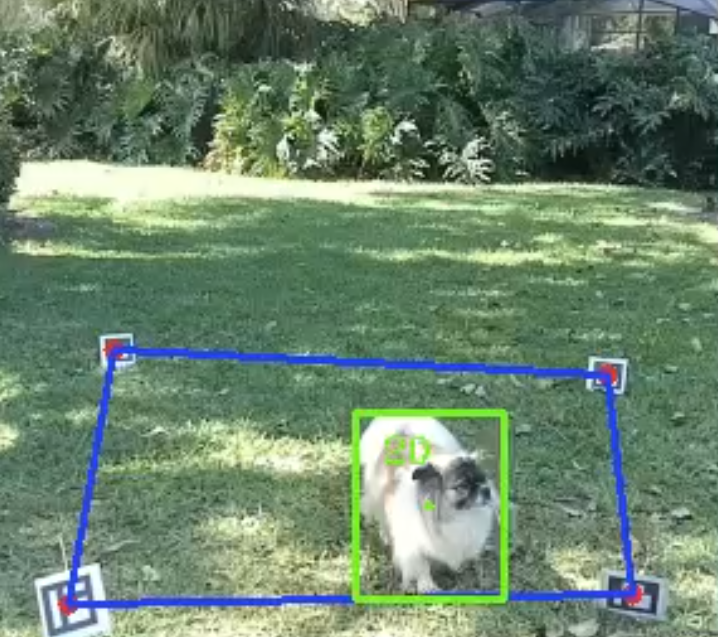

The base station is used to set the boundary of the zone and to relay LoRa notifications from the autonomous module to a wireless local area network. Notifications can be received by the user’s mobile device. The user may set up the zone by entering GPS coordinates or by printing out and placing ArUCo markers at the vertices of the zone. The ArUCo markers would only be needed during the setup process and afterward, they can be removed.

The out-of-zone detection pipeline detects the presence of an animal, classifies its species, tracks it, and compares its present location to the zone’s mask. The animal classification stage is optional and is utilized by users who want to detect unwanted animals.

| Stages | Methods |

|---|---|

| Animal Detection | Microsoft CameraTrap Project |

| Animal Classification | ResNetST-101 |

| ID Tracking | Centroid-Tracking |

| Out of Zone Detection | Bit-wise Comparison |

JT

JT

Anand Uthaman

Anand Uthaman

Yuta Suito

Yuta Suito

Livestock and pet geofencing is a technology-driven approach that utilizes GPS and wireless communication to create virtual boundaries for animals. By setting up virtual fences, owners and caretakers can ensure the safety, security, and well-being of their livestock and pets. To fully grasp the value of education in animal care and management, it is essential to explore relevant literature that sheds light on the subject. I recommend reading aticle ourculturemag.com/2021/11/25/the-value-of-education-in-animal-farm-by-george-orwell/. This insightful article delves into Orwell's classic allegorical novel and explores how education plays a crucial role in animal empowerment and independence.